DRLLog:深度强化学习在线日志异常检测

IF 5.4

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

IEEE Transactions on Network and Service Management

Pub Date : 2025-02-17

DOI:10.1109/TNSM.2025.3542595

引用次数: 0

摘要

系统日志记录了系统的状态和应用行为,为各种系统管理和诊断任务提供支持。然而,现有的日志异常检测方法面临着一些挑战,包括识别当前异常日志类型的局限性以及对异常检测模型进行在线增量更新的困难。为了解决这些挑战,本文引入了DRLLog,它应用深度强化学习(DRL)网络来检测异常事件。DRLLog使用深度Q网络(Deep Q Network, DQN)作为代理,日志条目作为奖励信号。通过与日志数据生成的环境进行交互,采取各种行动行为,以最大限度地获得反馈的奖励价值。通过这种方法,DRLLog实现了对历史日志数据的学习和对当前环境的感知,能够持续学习和适应不同的日志序列模式。此外,DRLLog通过在DQN的全连接层中使用两个低秩参数矩阵来表示其权矩阵的变化,从而引入了低秩自适应。在模型在线学习过程中,只更新模型的低秩参数矩阵,有效地降低了模型的开销。此外,DRLLog引入了焦点丢失(focal loss)功能,更加专注于异常日志的特征学习,有效解决了正常和异常日志数量不平衡的问题。我们在广泛使用的日志数据集(包括HDFS、BGL和ThunderBird)上评估了性能,与基线方法相比,F1-Score平均提高了3%。在在线模型学习过程中,DRLLog的参数数量平均减少了90%,训练和测试时间也显著减少。本文章由计算机程序翻译,如有差异,请以英文原文为准。

DRLLog: Deep Reinforcement Learning for Online Log Anomaly Detection

System logs record the system’s status and application behavior, providing support for various system management and diagnostic tasks. However, existing methods for log anomaly detection face several challenges, including limitations in recognizing current types of anomalous logs and difficulties in performing online incremental updates to the anomaly detection models. To address these challenges, this paper introduces DRLLog, which applies Deep Reinforcement Learning (DRL) networks to detect anomalous events. DRLLog uses Deep Q Network (DQN) as the agent, with log entries serving as reward signals. By interacting with the environment generated from log data and adopting various action behaviors, it aims to maximize the reward value obtained as feedback. Through this approach, DRLLog achieves learning from historical log data and perception of the current environment, enabling continuous learning and adaptation to different log sequence patterns. Additionally, DRLLog introduces low-rank adaptation by using two low-rank parameter matrices in the fully connected layer of the DQN to represent changes in its weight matrix. During online model learning, only low-rank parameter matrices of the model are updated, effectively reducing the model’s overhead. Furthermore, DRLLog introduces focal loss to focus more on learning the features of anomalous logs, effectively addressing the issue of imbalanced quantities between normal and anomalous logs. We evaluated the performance on widely used log datasets, including HDFS, BGL and ThunderBird, showing an average improvement of 3% in F1-Score compared to baseline methods. During online model learning, DRLLog achieves an average reduction of 90% in parameter count and a significant decrease in training and testing time as well.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

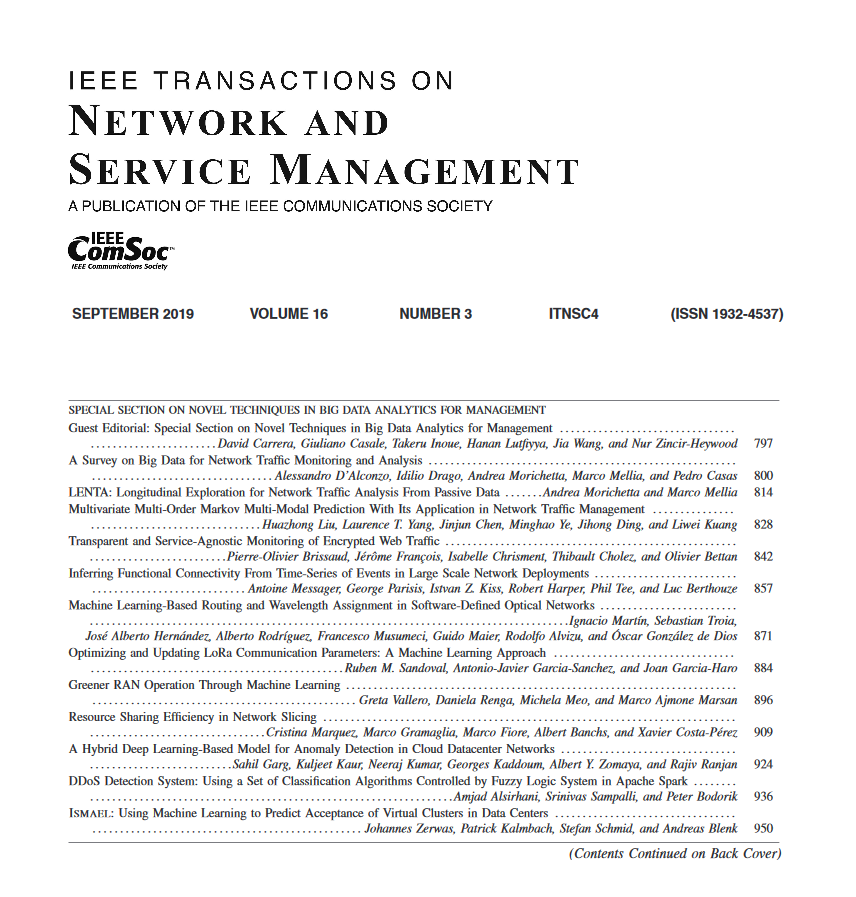

来源期刊

IEEE Transactions on Network and Service Management

Computer Science-Computer Networks and Communications

CiteScore

9.30

自引率

15.10%

发文量

325

期刊介绍:

IEEE Transactions on Network and Service Management will publish (online only) peerreviewed archival quality papers that advance the state-of-the-art and practical applications of network and service management. Theoretical research contributions (presenting new concepts and techniques) and applied contributions (reporting on experiences and experiments with actual systems) will be encouraged. These transactions will focus on the key technical issues related to: Management Models, Architectures and Frameworks; Service Provisioning, Reliability and Quality Assurance; Management Functions; Enabling Technologies; Information and Communication Models; Policies; Applications and Case Studies; Emerging Technologies and Standards.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: