面向大规模网络管理的联邦学习数据与模型迁移优化

IF 5.4

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

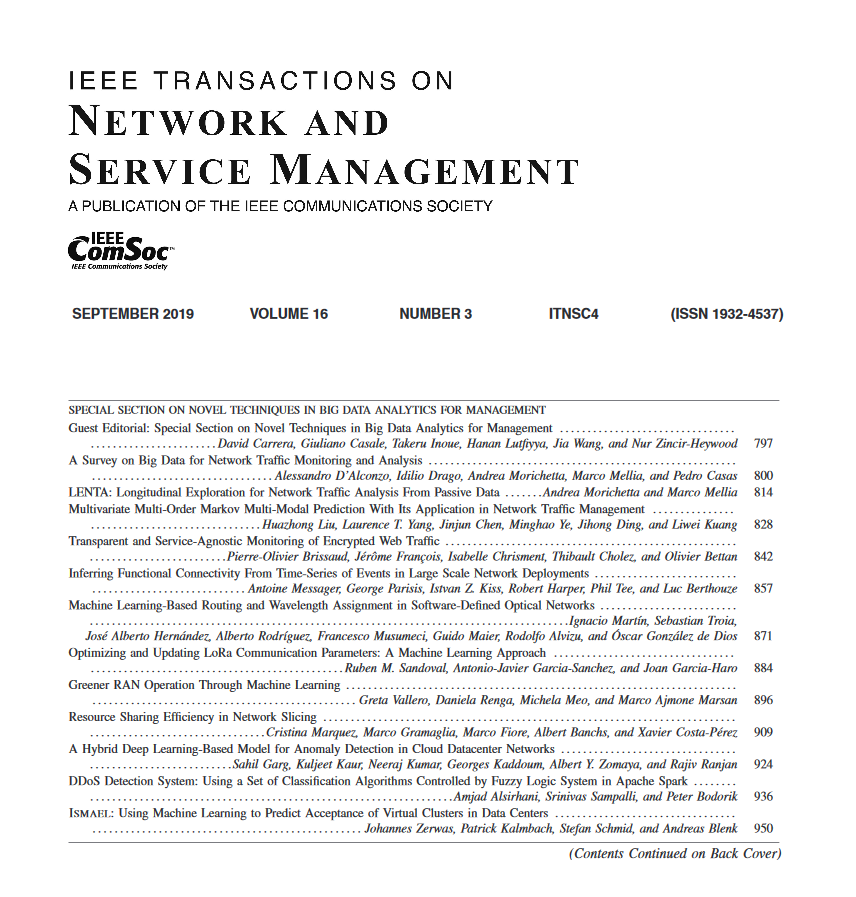

IEEE Transactions on Network and Service Management

Pub Date : 2025-02-03

DOI:10.1109/TNSM.2025.3538156

引用次数: 0

摘要

最近,深度学习被引入到自动化网络管理中,以降低人力成本。然而,从大规模网络中获取的日志数据量巨大,传统的集中式深度学习面临通信和计算成本问题。本文旨在通过利用联合学习对网络中产生的数据训练深度学习模型,降低通信和计算成本,并尽快部署深度学习模型。在该方案中,网络中每个点产生的数据都被传输到网络中的服务器上,通过服务器之间的联合学习训练深度学习模型。本文首先揭示了训练时间取决于数据和模型参数的传输路径和目的地。然后,我们引入了一种同步优化方法:(1)每个点通过哪些路径向哪些服务器传输数据;(2)服务器通过哪些路径向其他服务器传输参数。在实验中,我们对所提出的方法和复杂有线网络环境下的传统方法进行了数值和实验比较。结果表明,与传统方法相比,建议的方法减少了 34% 到 79% 的总训练时间。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Optimization of Data and Model Transfer for Federated Learning to Manage Large-Scale Network

Recently, deep learning has been introduced to automate network management to reduce human costs. However, the amount of log data obtained from the large-scale network is huge, and conventional centralized deep learning faces communication and computation costs. This paper aims to reduce communication and computation costs by training deep learning models using federated learning on data generated in the network and to deploy deep learning models as soon as possible. In this scheme, data generated at each point in the network are transferred to servers in the network, and deep learning models are trained by federated learning among the servers. In this paper, we first reveal that the training time depends on the transfer routes and the destinations of data and model parameters. Then, we introduce a simultaneous optimization method for (1) to which servers each point transfers the data through which routes and (2) through which routes the servers transfer the parameters to others. In the experiments, we numerically and experimentally compared the proposed method and naive methods in complicated wired network environments. We show that the proposed method reduced the total training time by 34% to 79% compared with the naive methods.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

IEEE Transactions on Network and Service Management

Computer Science-Computer Networks and Communications

CiteScore

9.30

自引率

15.10%

发文量

325

期刊介绍:

IEEE Transactions on Network and Service Management will publish (online only) peerreviewed archival quality papers that advance the state-of-the-art and practical applications of network and service management. Theoretical research contributions (presenting new concepts and techniques) and applied contributions (reporting on experiences and experiments with actual systems) will be encouraged. These transactions will focus on the key technical issues related to: Management Models, Architectures and Frameworks; Service Provisioning, Reliability and Quality Assurance; Management Functions; Enabling Technologies; Information and Communication Models; Policies; Applications and Case Studies; Emerging Technologies and Standards.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: