攻击下的联邦学习:通过计算机网络中的数据中毒攻击暴露漏洞

IF 5.4

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

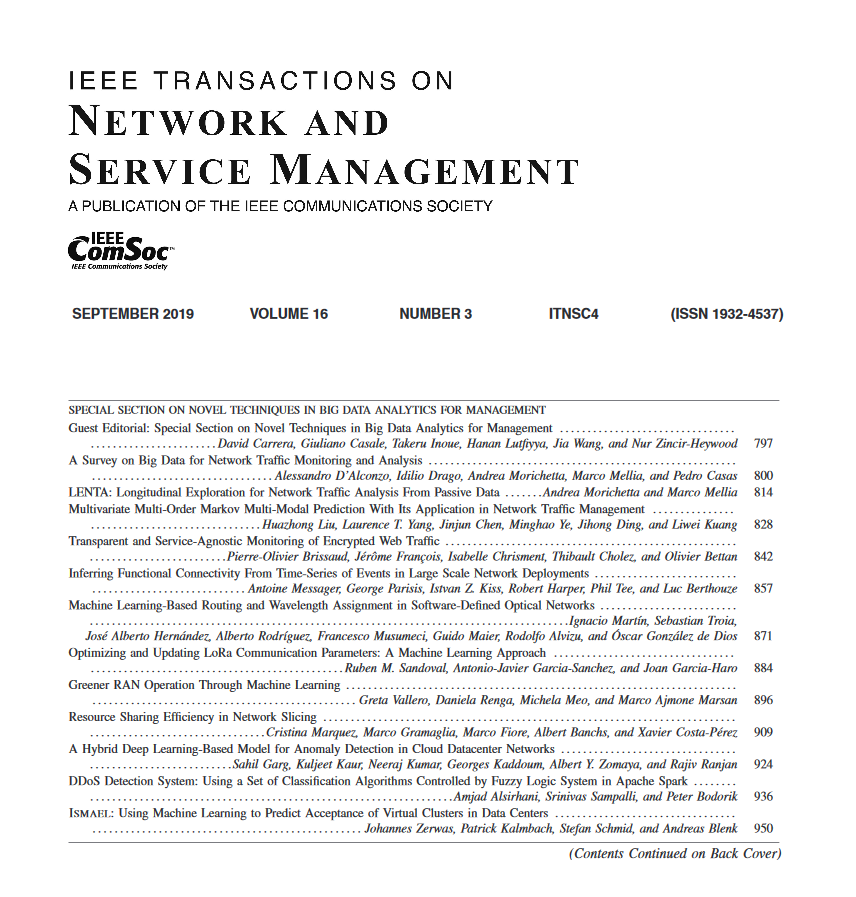

IEEE Transactions on Network and Service Management

Pub Date : 2025-01-03

DOI:10.1109/TNSM.2025.3525554

引用次数: 0

摘要

联邦学习是一种方法,它使多个设备能够在不共享原始数据的情况下共同训练共享模型,从而保护数据隐私。然而,联邦学习系统在训练和更新阶段容易受到数据中毒攻击。使用CIC和UNSW数据集,在十分之一的客户端的FL模型上测试了三种数据中毒攻击——标签翻转、特征中毒和模糊egn。对于标签翻转,我们随机修改良性数据的标签;对于特征中毒,我们改变随机森林技术识别的高度影响的特征;对于vague egan,我们使用生成式对抗网络生成对抗的例子。对抗性样本构成每个数据集的一小部分。在本研究中,我们改变了攻击者可以修改数据集以观察其对客户端和服务器端的影响的百分比。实验结果表明,标签翻转和vague egan攻击不会显著影响服务器的准确性,因为它们很容易被服务器检测到。相比之下,特征中毒攻击在保持高准确率和攻击成功率的同时巧妙地破坏了模型的性能,突出了它们的微妙性和有效性。因此,特征中毒攻击操纵服务器而不会导致模型准确性的显著降低,这凸显了联邦学习系统面对此类复杂攻击的脆弱性。为了缓解这些漏洞,我们探索了一种最新的防御方法,称为随机深度特征选择,它在训练期间随机化不同大小的服务器特征(例如,50和400)。此策略已被证明在最小化此类攻击的影响方面非常有效,特别是在特性中毒方面。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Federated Learning Under Attack: Exposing Vulnerabilities Through Data Poisoning Attacks in Computer Networks

Federated Learning is an approach that enables multiple devices to collectively train a shared model without sharing raw data, thereby preserving data privacy. However, federated learning systems are vulnerable to data-poisoning attacks during the training and updating stages. Three data-poisoning attacks—label flipping, feature poisoning, and VagueGAN—are tested on FL models across one out of ten clients using the CIC and UNSW datasets. For label flipping, we randomly modify labels of benign data; for feature poisoning, we alter highly influential features identified by the Random Forest technique; and for VagueGAN, we generate adversarial examples using Generative Adversarial Networks. Adversarial samples constitute a small portion of each dataset. In this study, we vary the percentages by which adversaries can modify datasets to observe their impact on the Client and Server sides. Experimental findings indicate that label flipping and VagueGAN attacks do not significantly affect server accuracy, as they are easily detectable by the Server. In contrast, feature poisoning attacks subtly undermine model performance while maintaining high accuracy and attack success rates, highlighting their subtlety and effectiveness. Therefore, feature poisoning attacks manipulate the server without causing a significant decrease in model accuracy, underscoring the vulnerability of federated learning systems to such sophisticated attacks. To mitigate these vulnerabilities, we explore a recent defensive approach known as Random Deep Feature Selection, which randomizes server features with varying sizes (e.g., 50 and 400) during training. This strategy has proven highly effective in minimizing the impact of such attacks, particularly on feature poisoning.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

IEEE Transactions on Network and Service Management

Computer Science-Computer Networks and Communications

CiteScore

9.30

自引率

15.10%

发文量

325

期刊介绍:

IEEE Transactions on Network and Service Management will publish (online only) peerreviewed archival quality papers that advance the state-of-the-art and practical applications of network and service management. Theoretical research contributions (presenting new concepts and techniques) and applied contributions (reporting on experiences and experiments with actual systems) will be encouraged. These transactions will focus on the key technical issues related to: Management Models, Architectures and Frameworks; Service Provisioning, Reliability and Quality Assurance; Management Functions; Enabling Technologies; Information and Communication Models; Policies; Applications and Case Studies; Emerging Technologies and Standards.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: