用于计算能力网络中跨域资源调度的 DPU 增强型多代理行动者批判算法

IF 4.7

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

IEEE Transactions on Network and Service Management

Pub Date : 2024-07-29

DOI:10.1109/TNSM.2024.3434997

引用次数: 0

摘要

计算能力网络(CPN)中计算资源分布不均匀,导致域内资源供需不平衡,需要跨域资源调度。为了解决CPN中的跨域资源调度挑战,本文提出了一种利用数据处理单元(DPU)卸载的改进的多代理Actor-Critic (IMAAC)资源调度方法。首先,我们引入了一种利用DPU卸载为CPN量身定制的跨域资源调度体系结构。具体来说,我们将多智能体深度强化学习(MADRL)智能体的某些功能委托给dpu,旨在减少在跨域调度决策生成过程中产生的通信成本。其次,在多agent Actor-Critic (MAAC)框架中引入并行经验集成和多头关注机制,压缩agent跨域关联的状态空间维度;最后,我们引入了并行的双策略网络结构,以减轻演员和评论家网络中的训练不稳定性和收敛性挑战。实验结果表明,与基准实验相比,IMAAC在系统总延迟、能耗和丢弃任务数方面分别降低了5.98%~13.56%、23.54%~33.55%和41.17%~58.88%。本文章由计算机程序翻译,如有差异,请以英文原文为准。

DPU-Enhanced Multi-Agent Actor-Critic Algorithm for Cross-Domain Resource Scheduling in Computing Power Network

The distribution of computing resources in the Computing Power Network (CPN) is uneven, leading to an imbalance in resource supply and demand within domains, necessitating cross-domain resource scheduling. To address the cross-domain resource scheduling challenge in CPN, this paper presents an Improved Multi-Agent Actor-Critic (IMAAC) resource scheduling approach leveraging Data Processing Unit (DPU) offloading. Initially, we introduce a cross-domain resource scheduling architecture tailored for CPN by leveraging DPU offloading. Specifically, we delegate certain functionalities of the Multi-Agent Deep Reinforcement Learning (MADRL) Agent to DPUs, aiming to mitigate communication costs incurred during the generation of cross-domain scheduling decisions. Second, we introduce the parallel experience ensemble and multi-head attention mechanism in the Multi-Agent Actor-Critic (MAAC) framework to compress the state-space dimensionality of agent association across domains. Finally, we introduce the parallelized dual-policy network structure to mitigate training instability and convergence challenges within the actor and critic networks. Experimental results showcase that IMAAC achieves noteworthy reductions of 5.98%~13.56%, 23.54%~33.55%, and 41.17%~58.88% in total system delay, energy consumption, and the number of discarded tasks, respectively, compared to benchmark experiments.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

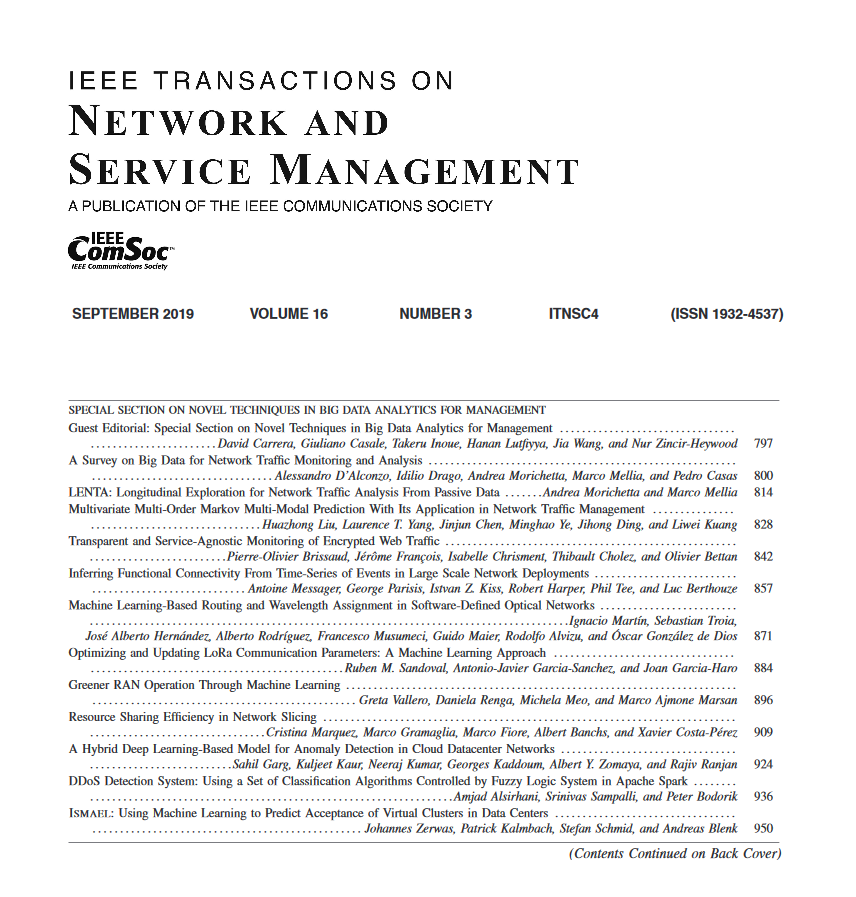

来源期刊

IEEE Transactions on Network and Service Management

Computer Science-Computer Networks and Communications

CiteScore

9.30

自引率

15.10%

发文量

325

期刊介绍:

IEEE Transactions on Network and Service Management will publish (online only) peerreviewed archival quality papers that advance the state-of-the-art and practical applications of network and service management. Theoretical research contributions (presenting new concepts and techniques) and applied contributions (reporting on experiences and experiments with actual systems) will be encouraged. These transactions will focus on the key technical issues related to: Management Models, Architectures and Frameworks; Service Provisioning, Reliability and Quality Assurance; Management Functions; Enabling Technologies; Information and Communication Models; Policies; Applications and Case Studies; Emerging Technologies and Standards.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: