Improving drug discovery with a hybrid deep generative model using reinforcement learning trained on a Bayesian docking approximation

Abstract

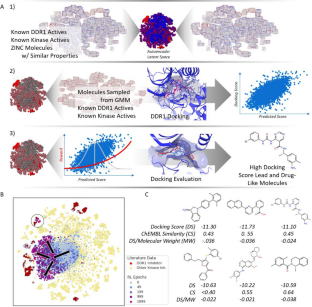

Generative approaches to molecular design are an area of intense study in recent years as a method to generate new pharmaceuticals with desired properties. Often though, these types of efforts are constrained by limited experimental activity data, resulting in either models that generate molecules with poor performance or models that are overfit and produce close analogs of known molecules. In this paper, we reduce this data dependency for the generation of new chemotypes by incorporating docking scores of known and de novo molecules to expand the applicability domain of the reward function and diversify the compounds generated during reinforcement learning. Our approach employs a deep generative model initially trained using a combination of limited known drug activity and an approximate docking score provided by a second machine learned Bayes regression model, with final evaluation of high scoring compounds by a full docking simulation. This strategy results in molecules with docking scores improved by 10–20% compared to molecules of similar size, while being 130 × faster than a docking only approach on a typical GPU workstation. We also show that the increased docking scores correlate with (1) docking poses with interactions similar to known inhibitors and (2) result in higher MM-GBSA binding energies comparable to the energies of known DDR1 inhibitors, demonstrating that the Bayesian model contains sufficient information for the network to learn to efficiently interact with the binding pocket during reinforcement learning. This outcome shows that the combination of the learned latent molecular representation along with the feature-based docking regression is sufficient for reinforcement learning to infer the relationship between the molecules and the receptor binding site, which suggest that our method can be a powerful tool for the discovery of new chemotypes with potential therapeutic applications.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: