MOHFL: Multi-Level One-Shot Hierarchical Federated Learning With Enhanced Model Aggregation Over Non-IID Data

IF 5.4

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

IEEE Transactions on Network and Service Management

Pub Date : 2025-04-15

DOI:10.1109/TNSM.2025.3560629

引用次数: 0

Abstract

Hierarchical federated learning (HFL) is a privacy-preserving distributed machine learning framework with a client-edge-cloud hierarchy, where multiple edge servers perform partial model aggregation to reduce costly communication with the cloud server. Nevertheless, most existing HFL methods require extensive iterative communication and public datasets, which not only increase communication overhead but also raise privacy and security concerns. Moreover, non-independent and identically distributed (non-IID) data among devices can significantly impact the accuracy of the global model in HFL. To address these challenges, we propose a multi-level one-shot HFL framework (MOHFL), which aims to improve the performance of the global model in a single communication round. Specifically, we employ conditional variational autoencoders (CVAEs) as local models and use the aggregated decoders to generate an IID training set for the global model, thereby mitigating the negative impact of non-IID data. We improve the performance of CVAEs under different levels of data heterogeneity through a dominant class-based data selection method. Subsequently, an edge aggregation scheme based on multi-teacher knowledge distillation and contrastive learning is proposed to aggregate the knowledge from local decoders to edge decoders. Extensive experiments on four real-world datasets demonstrate that MOHFL is very competitive against four state-of-the-art baselines under various settings.MOHFL:基于非iid数据的增强模型聚合的多级单次分层联邦学习

分层联邦学习(HFL)是一种具有客户端-云层次结构的隐私保护分布式机器学习框架,其中多个边缘服务器执行部分模型聚合,以减少与云服务器的昂贵通信。然而,大多数现有的HFL方法需要大量的迭代通信和公共数据集,这不仅增加了通信开销,而且引起了隐私和安全问题。此外,设备之间的非独立和同分布(non-IID)数据会显著影响HFL中全局模型的准确性。为了解决这些挑战,我们提出了一个多层次的单次HFL框架(MOHFL),旨在提高全局模型在单轮通信中的性能。具体来说,我们使用条件变分自编码器(CVAEs)作为局部模型,并使用聚合的解码器为全局模型生成IID训练集,从而减轻了非IID数据的负面影响。我们通过一种主要的基于类的数据选择方法提高了CVAEs在不同数据异质性水平下的性能。随后,提出了一种基于多教师知识蒸馏和对比学习的边缘聚合方案,将局部解码器的知识聚合到边缘解码器上。在四个真实数据集上进行的大量实验表明,在各种设置下,MOHFL与四个最先进的基线相比非常具有竞争力。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

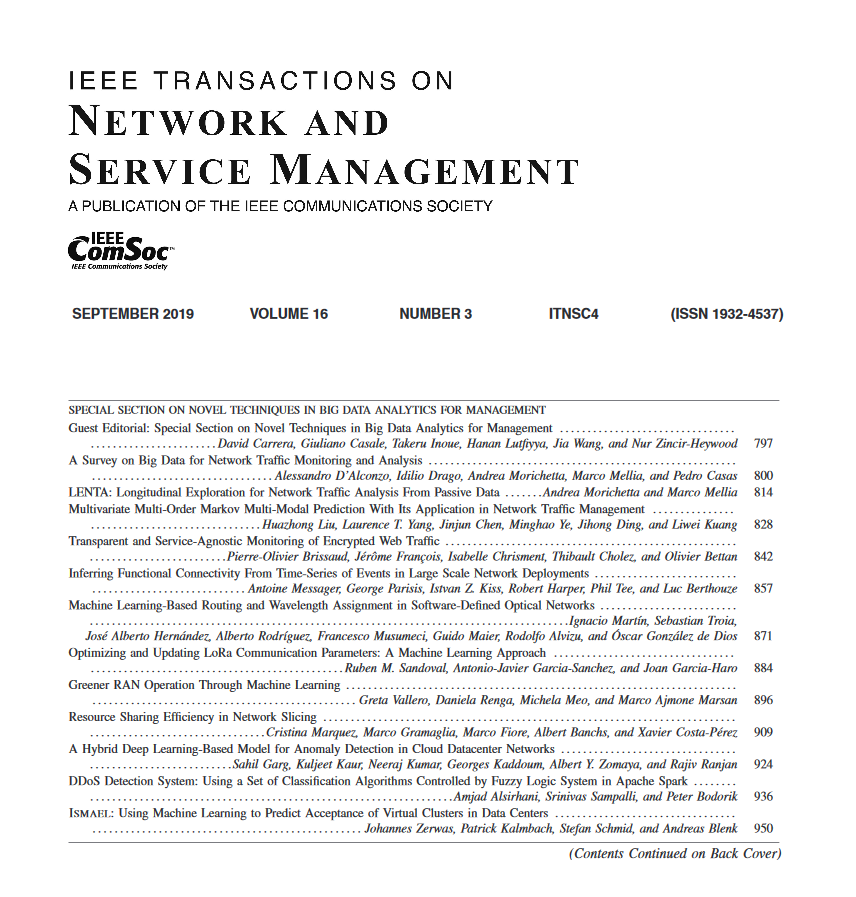

来源期刊

IEEE Transactions on Network and Service Management

Computer Science-Computer Networks and Communications

CiteScore

9.30

自引率

15.10%

发文量

325

期刊介绍:

IEEE Transactions on Network and Service Management will publish (online only) peerreviewed archival quality papers that advance the state-of-the-art and practical applications of network and service management. Theoretical research contributions (presenting new concepts and techniques) and applied contributions (reporting on experiences and experiments with actual systems) will be encouraged. These transactions will focus on the key technical issues related to: Management Models, Architectures and Frameworks; Service Provisioning, Reliability and Quality Assurance; Management Functions; Enabling Technologies; Information and Communication Models; Policies; Applications and Case Studies; Emerging Technologies and Standards.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: