Optimal Placement of the Virtualized Federated Learning Aggregation Function at the Edge

IF 5.4

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

IEEE Transactions on Network and Service Management

Pub Date : 2025-03-13

DOI:10.1109/TNSM.2025.3551257

引用次数: 0

Abstract

Federated Learning (FL) enables multiple devices (clients) training a shared machine learning (ML) model on local datasets and then sending the updated models to a central server, whose task is aggregating the locally-computed updates and sharing the learned global model again with the clients in an iterative process. The population of clients may change at each round, whereas the node executing the aggregation function is typically placed at an edge domain and remains static until the end of the overall FL training process. Indeed, the computing capabilities of the edge node hosting the aggregation function and the distance (latency) of such a node from the selected clients can highly affect the convergence rate of the FL training procedure. Moreover, the heterogeneous time-varying capabilities of edge nodes, coupled with the dynamic client population selected at each round, call for the optimal dynamic placement of the aggregation function across the available nodes in an edge domain. In this work, we formulate an optimization problem for the placement of the FL aggregation function, which aims to select at each round the edge node able to minimize the overall per-round training time, encompassing the aggregation time, the local training time at the clients and the time for exchanging the global model and the model updates. A time-efficient greedy heuristics is proposed, which is shown to well approximate the optimal solution and outperform the considered benchmark solutions.虚拟联邦学习聚合函数在边缘的最优放置

联邦学习(FL)允许多个设备(客户端)在本地数据集上训练共享机器学习(ML)模型,然后将更新的模型发送到中央服务器,中央服务器的任务是聚合本地计算的更新,并在迭代过程中再次与客户端共享学习到的全局模型。客户端人口可能在每一轮都发生变化,而执行聚合函数的节点通常被放置在边缘域并保持静态,直到整个FL训练过程结束。实际上,承载聚合函数的边缘节点的计算能力以及该节点与所选客户端的距离(延迟)会严重影响FL训练过程的收敛速度。此外,边缘节点的异构时变能力,加上每轮选择的动态客户端人口,要求在边缘域的可用节点上对聚合函数进行最优动态放置。在这项工作中,我们制定了一个FL聚合函数放置的优化问题,其目的是在每轮中选择能够最小化整体每轮训练时间的边缘节点,包括聚合时间,客户端的局部训练时间以及交换全局模型和模型更新的时间。提出了一种省时的贪婪启发式算法,该算法可以很好地逼近最优解,并且优于所考虑的基准解。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

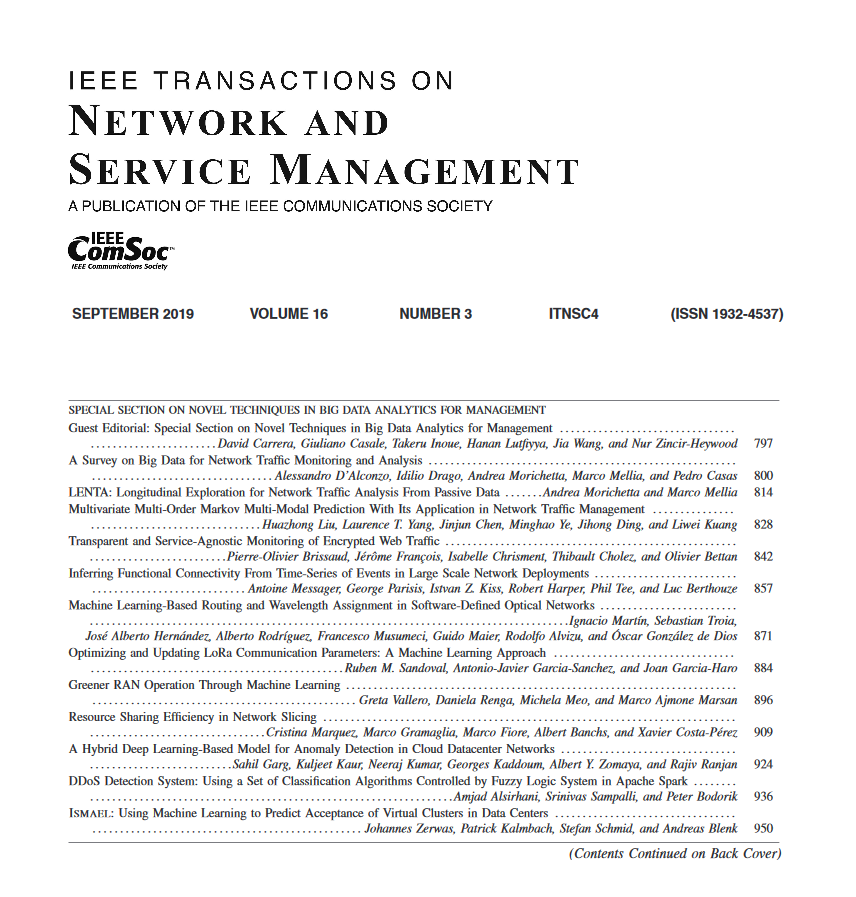

来源期刊

IEEE Transactions on Network and Service Management

Computer Science-Computer Networks and Communications

CiteScore

9.30

自引率

15.10%

发文量

325

期刊介绍:

IEEE Transactions on Network and Service Management will publish (online only) peerreviewed archival quality papers that advance the state-of-the-art and practical applications of network and service management. Theoretical research contributions (presenting new concepts and techniques) and applied contributions (reporting on experiences and experiments with actual systems) will be encouraged. These transactions will focus on the key technical issues related to: Management Models, Architectures and Frameworks; Service Provisioning, Reliability and Quality Assurance; Management Functions; Enabling Technologies; Information and Communication Models; Policies; Applications and Case Studies; Emerging Technologies and Standards.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: