A Reinforcement Learning Approach for D2D Spectrum Sharing in Wireless Industrial URLLC Networks

IF 4.7

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

IEEE Transactions on Network and Service Management

Pub Date : 2024-08-16

DOI:10.1109/TNSM.2024.3445123

引用次数: 0

Abstract

Distributed Radio Resource Management (RRM) solutions are gaining an increasing interest recently, especially when a large number of devices are present as in the case of a wireless industrial network. Self-organisation relying on distributed RRM schemes is envisioned to be one of the key pillars of 5G and beyond Ultra Reliable Low Latency Communication (URLLC) networks. Reinforcement learning is emerging as a powerful distributed technique to facilitate self-organisation. In this paper, spectrum sharing in a Device-to-Device (D2D)-enabled wireless network is investigated, targeting URLLC applications. A distributed scheme denoted as Reinforcement Learning Based Matching (RLBM) which combines reinforcement learning and matching theory, is presented with the aim of achieving an autonomous device-based resource allocation. A distributed local Q-table is used to avoid global information gathering and a stateless Q-learning approach is adopted, therefore reducing requirements for a large state-action mapping. Simulation case studies are used to verify the performance of the presented approach in comparison with other RRM techniques. The presented RLBM approach results in a good tradeoff of throughput, complexity and signalling overheads while maintaining the target Quality of Service/Experience (QoS/QoE) requirements of the different users in the network.无线工业 URLLC 网络中 D2D 频谱共享的强化学习方法

最近,分布式无线资源管理(RRM)解决方案越来越受到人们的关注,尤其是在无线工业网络中存在大量设备的情况下。依靠分布式 RRM 方案实现的自组织被认为是 5G 及以后的超可靠低延迟通信(URLLC)网络的关键支柱之一。强化学习正在成为促进自组织的一种强大的分布式技术。本文以 URLLC 应用为目标,研究了设备到设备(D2D)无线网络中的频谱共享。本文提出了一种名为 "基于强化学习的匹配"(RLBM)的分布式方案,该方案结合了强化学习和匹配理论,旨在实现基于设备的自主资源分配。该方案使用分布式本地 Q 表来避免全局信息收集,并采用无状态 Q 学习方法,从而降低了对大型状态-行动映射的要求。仿真案例研究用于验证所提出的方法与其他 RRM 技术相比的性能。所提出的 RLBM 方法在吞吐量、复杂性和信号开销之间实现了良好的权衡,同时保持了网络中不同用户对服务质量/体验(QoS/QoE)的目标要求。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

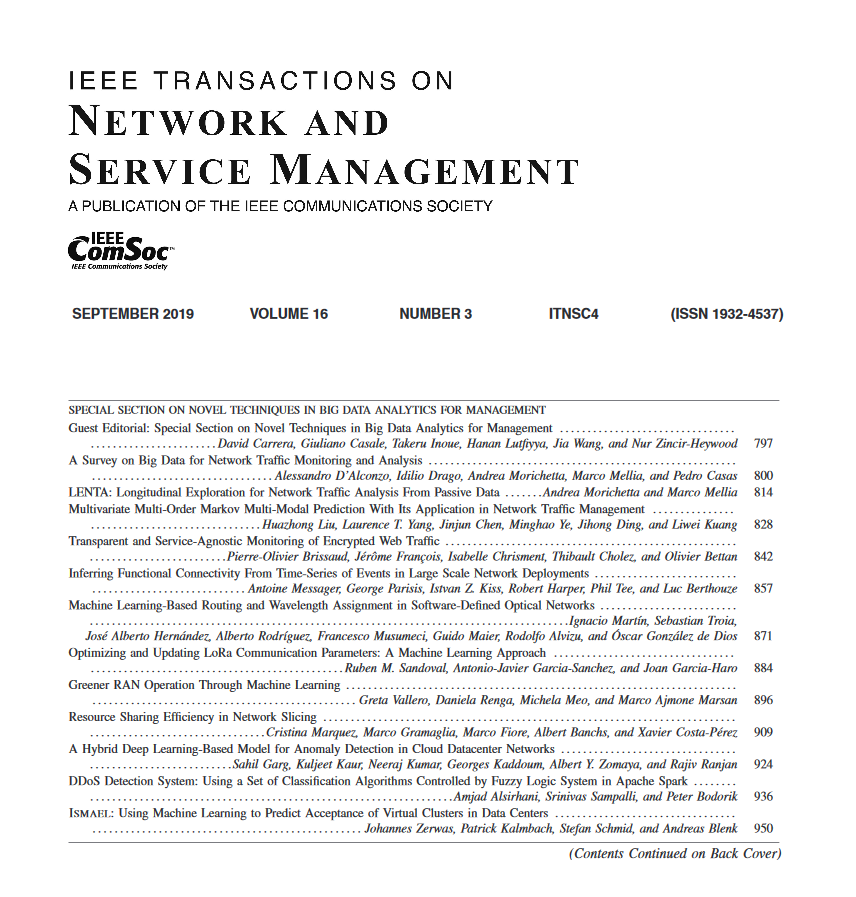

来源期刊

IEEE Transactions on Network and Service Management

Computer Science-Computer Networks and Communications

CiteScore

9.30

自引率

15.10%

发文量

325

期刊介绍:

IEEE Transactions on Network and Service Management will publish (online only) peerreviewed archival quality papers that advance the state-of-the-art and practical applications of network and service management. Theoretical research contributions (presenting new concepts and techniques) and applied contributions (reporting on experiences and experiments with actual systems) will be encouraged. These transactions will focus on the key technical issues related to: Management Models, Architectures and Frameworks; Service Provisioning, Reliability and Quality Assurance; Management Functions; Enabling Technologies; Information and Communication Models; Policies; Applications and Case Studies; Emerging Technologies and Standards.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: