A Novel Latency-Aware Resource Allocation and Offloading Strategy With Improved Prioritization and DDQN for Edge-Enabled UDNs

IF 4.7

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

IEEE Transactions on Network and Service Management

Pub Date : 2024-07-26

DOI:10.1109/TNSM.2024.3434457

引用次数: 0

Abstract

Driven by the vision of 6G, the need for diverse computation-intensive and delay-sensitive tasks continues to rise. The integration of mobile edge computing with the ultra-dense network is not only capable of handling traffic from a large number of smart devices but also delivers substantial processing capabilities to the users. This combined network is expected as an effective solution for meeting the latency-critical requirement and will enhance the quality of user experience. Nevertheless, when a massive number of devices offload tasks to edge servers, the problem of channel interference, network load and energy shortage of user devices (UDs) would increase. Therefore, we investigate the joint uplink and downlink resource allocation and task offloading optimization problem in terms of minimizing the overall task delay while sustaining the UD battery life. Thus, to achieve long-term gains while making quick decisions, we propose an improved double deep Q-network scheme named Prioritized double deep Q-network. In this, the prioritized experience replay has been improved by considering the experience freshness factor along with temporal difference error to achieve fast and efficient learning. Extensive numerical results prove the efficacy of the proposed scheme by analyzing delay and energy consumption. Especially, our scheme can considerably decrease the delay by 11.86%, 26.22%, 48.56%, and 61.04% compared to the OELO scheme, DQN scheme, LOS, and EOS, respectively, when the number of UDs varied from 30 to 180.针对边缘 UDN 的改进优先级和 DDQN 的新型延迟感知资源分配和卸载策略

在6G愿景的推动下,对各种计算密集型和延迟敏感型任务的需求持续上升。移动边缘计算与超密集网络的融合,不仅能够处理来自大量智能设备的流量,还能为用户提供大量的处理能力。这种组合网络有望成为满足延迟关键需求的有效解决方案,并将提高用户体验的质量。然而,当大量设备将任务卸载到边缘服务器时,会增加信道干扰、网络负载和用户设备(UDs)能量不足的问题。因此,我们从最小化整体任务延迟的同时保持UD电池寿命的角度出发,研究了联合上下行资源分配和任务卸载优化问题。因此,为了在快速决策的同时获得长期收益,我们提出了一种改进的双深度q网络方案,称为优先级双深度q网络。其中,通过考虑体验新鲜度因子和时间差误差对优先体验重放进行了改进,实现了快速高效的学习。大量的数值结果通过分析时延和能耗证明了该方案的有效性。尤其在UDs数量从30到180的范围内,与OELO方案、DQN方案、LOS方案和EOS方案相比,我们的方案可以显著降低时延,分别降低11.86%、26.22%、48.56%和61.04%。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

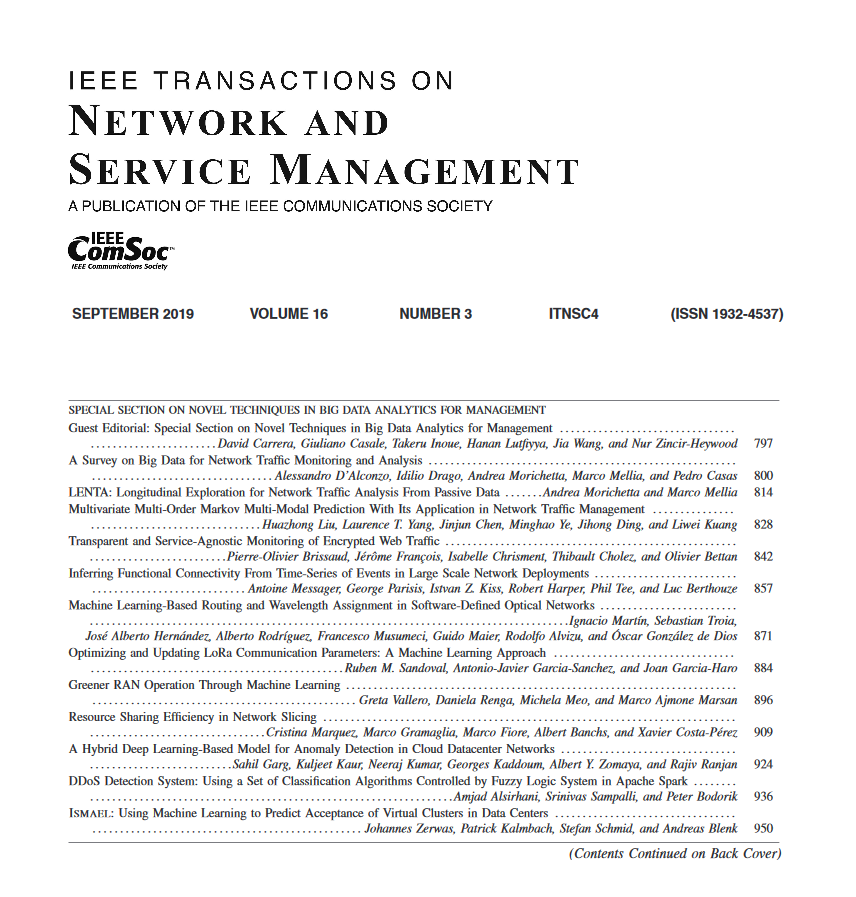

来源期刊

IEEE Transactions on Network and Service Management

Computer Science-Computer Networks and Communications

CiteScore

9.30

自引率

15.10%

发文量

325

期刊介绍:

IEEE Transactions on Network and Service Management will publish (online only) peerreviewed archival quality papers that advance the state-of-the-art and practical applications of network and service management. Theoretical research contributions (presenting new concepts and techniques) and applied contributions (reporting on experiences and experiments with actual systems) will be encouraged. These transactions will focus on the key technical issues related to: Management Models, Architectures and Frameworks; Service Provisioning, Reliability and Quality Assurance; Management Functions; Enabling Technologies; Information and Communication Models; Policies; Applications and Case Studies; Emerging Technologies and Standards.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: