RCFT: re-parameterization convolution and feature filter for object tracking

IF 5

2区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

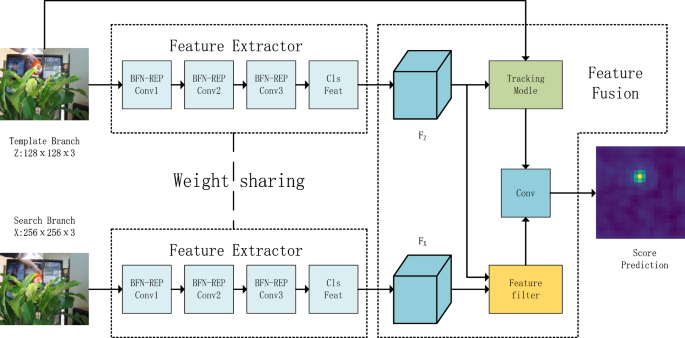

Abstract Siamese-based trackers have been widely studied for their high accuracy and speed. Both the feature extraction and feature fusion are two important components in Siamese-based trackers. Siamese-based trackers obtain fine local features by traditional convolution. However, some important channel information and global information are lost when enhancing local features. In the feature fusion process, cross-correlation-based feature fusion between the template and search region feature ignores the global spatial context information and does not make the best of the spatial information. In this paper, to solve the above problem, we design a novel feature extraction sub-network based on batch-free normalization re-parameterization convolution, which scales the features in the channel dimension and increases the receptive field. Richer channel information is obtained and powerful target features are extracted for the feature fusion. Furthermore, we learn a feature fusion network (FFN) based on feature filter. The FFN fuses the template and search region features in a global spatial context to obtain high-quality fused features by enhancing important features and filtering redundant features. By jointly learning the proposed feature extraction sub-network and FFN, the local and global information are fully exploited. Then, we propose a novel tracking algorithm based on the designed feature extraction sub-network and FFN with re-parameterization convolution and feature filter, referred to as RCFT. We evaluate the proposed RCFT tracker and some recent state-of-the-art (SOTA) trackers on OTB100, VOT2018, LaSOT, GOT-10k, UAV123 and the visual-thermal dataset VOT-RGBT2019 datasets, which achieves superior tracking performance with 45 FPS tracking speed.

RCFT:用于目标跟踪的参数化卷积和特征滤波

摘要基于连体体的跟踪器以其精度高、速度快等优点得到了广泛的研究。特征提取和特征融合是基于连体体的跟踪的两个重要组成部分。基于暹罗的跟踪器通过传统的卷积获得精细的局部特征。然而,在增强局部特征时,丢失了一些重要的通道信息和全局信息。在特征融合过程中,模板与搜索区域特征之间基于互相关的特征融合忽略了全局空间上下文信息,没有充分利用空间信息。为了解决上述问题,本文设计了一种基于无批处理归一化再参数化卷积的特征提取子网络,该网络在通道维度上缩放了特征,增加了接受域。获得更丰富的通道信息,提取强大的目标特征进行特征融合。此外,我们还学习了一种基于特征滤波器的特征融合网络(FFN)。FFN在全局空间背景下融合模板特征和搜索区域特征,通过增强重要特征和过滤冗余特征来获得高质量的融合特征。通过联合学习所提出的特征提取子网络和FFN,充分利用了局部信息和全局信息。然后,我们提出了一种基于设计的特征提取子网络和带有重参数化卷积和特征滤波器的FFN的跟踪算法,称为RCFT。我们在OTB100、VOT2018、LaSOT、GOT-10k、UAV123和视觉-热数据集VOT-RGBT2019数据集上评估了所提出的RCFT跟踪器和一些最新的最先进(SOTA)跟踪器,该跟踪器以45 FPS的跟踪速度获得了卓越的跟踪性能。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Complex & Intelligent Systems

COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE-

CiteScore

9.60

自引率

10.30%

发文量

297

期刊介绍:

Complex & Intelligent Systems aims to provide a forum for presenting and discussing novel approaches, tools and techniques meant for attaining a cross-fertilization between the broad fields of complex systems, computational simulation, and intelligent analytics and visualization. The transdisciplinary research that the journal focuses on will expand the boundaries of our understanding by investigating the principles and processes that underlie many of the most profound problems facing society today.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: