稀疏递归神经网络中的通用结构模式

IF 5.4

1区 物理与天体物理

Q1 PHYSICS, MULTIDISCIPLINARY

引用次数: 0

摘要

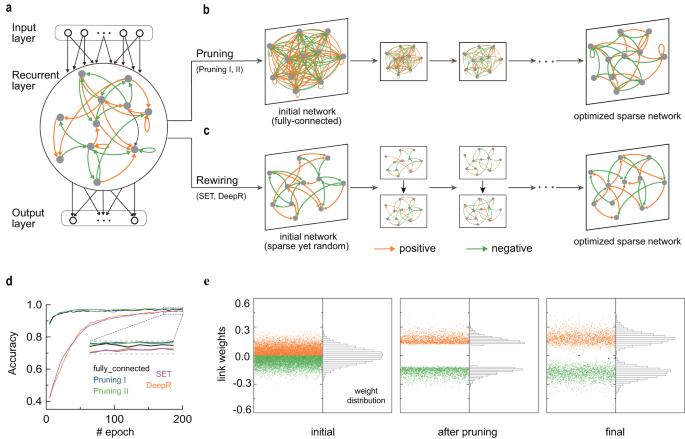

稀疏神经网络的性能可与全连接网络相媲美,但所需的能量和内存却更少,这为在资源有限的设备中部署人工智能带来了巨大希望。虽然近年来在开发稀疏化神经网络的方法方面取得了重大进展,但人工神经网络作为黑盒子是出了名的,性能良好的神经网络是否具有共同的结构特征仍是一个悬而未决的问题。在这里,我们分析了不同稀疏化策略训练的递归神经网络(RNN)在不同任务下的演化,并探索了这些稀疏化网络的拓扑规律性。我们发现,经过优化的稀疏拓扑结构共享一种普遍的签名图案模式,RNN 在稀疏化过程中朝着结构平衡的配置演化,而结构平衡可以提高稀疏 RNN 在各种任务中的性能。这种结构平衡模式也出现在其他最先进的模型中,包括神经常微分方程网络和连续时间 RNN。综上所述,我们的发现不仅揭示了伴随优化网络稀疏化的普遍结构特征,而且为优化架构搜索提供了一条途径。深度神经网络在物理科学和工程科学的应用领域取得了显著的成功,因此,寻找这种能在不牺牲性能的情况下以较少的连接(权重参数)高效工作的网络是非常有意义的。在这项工作中,作者展示了大量此类高效循环神经网络在其结构中显示出某些连接模式。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Universal structural patterns in sparse recurrent neural networks

Sparse neural networks can achieve performance comparable to fully connected networks but need less energy and memory, showing great promise for deploying artificial intelligence in resource-limited devices. While significant progress has been made in recent years in developing approaches to sparsify neural networks, artificial neural networks are notorious as black boxes, and it remains an open question whether well-performing neural networks have common structural features. Here, we analyze the evolution of recurrent neural networks (RNNs) trained by different sparsification strategies and for different tasks, and explore the topological regularities of these sparsified networks. We find that the optimized sparse topologies share a universal pattern of signed motifs, RNNs evolve towards structurally balanced configurations during sparsification, and structural balance can improve the performance of sparse RNNs in a variety of tasks. Such structural balance patterns also emerge in other state-of-the-art models, including neural ordinary differential equation networks and continuous-time RNNs. Taken together, our findings not only reveal universal structural features accompanying optimized network sparsification but also offer an avenue for optimal architecture searching. Deep neural networks have shown remarkable success in application areas across physical sciences and engineering science and finding such networks that can work efficiently with less connections (weight parameters) without sacrificing performance is thus of great interest. In this work the authors show that a large number of such efficient recurrent neural networks display certain connectivity patterns in their structure.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Communications Physics

Physics and Astronomy-General Physics and Astronomy

CiteScore

8.40

自引率

3.60%

发文量

276

审稿时长

13 weeks

期刊介绍:

Communications Physics is an open access journal from Nature Research publishing high-quality research, reviews and commentary in all areas of the physical sciences. Research papers published by the journal represent significant advances bringing new insight to a specialized area of research in physics. We also aim to provide a community forum for issues of importance to all physicists, regardless of sub-discipline.

The scope of the journal covers all areas of experimental, applied, fundamental, and interdisciplinary physical sciences. Primary research published in Communications Physics includes novel experimental results, new techniques or computational methods that may influence the work of others in the sub-discipline. We also consider submissions from adjacent research fields where the central advance of the study is of interest to physicists, for example material sciences, physical chemistry and technologies.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: