解码揭示早期视觉区域的视觉工作记忆内容

IF 50.5

1区 综合性期刊

Q1 MULTIDISCIPLINARY SCIENCES

引用次数: 1093

摘要

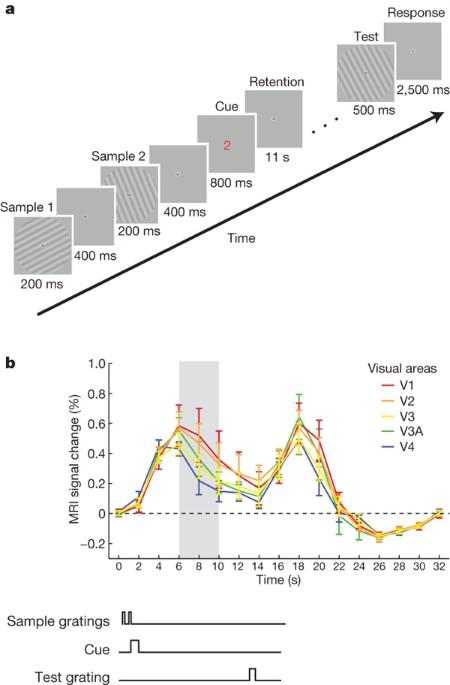

尽管我们可以在工作视觉记忆中保存多个不同的项目,但我们如何记住单个物体的具体细节和视觉特征仍然是一个谜。负责工作记忆的高阶区域中的神经元似乎对视觉细节没有选择性,而大脑皮层的早期视觉区域能够独特地处理从眼睛传入的视觉信号,但人们认为它们不能执行更高级的认知功能,如记忆。斯蒂芬妮-哈里森(Stephanie Harrison)和弗兰克-唐(Frank Tong)利用一种新技术对功能磁共振成像(fMRI)数据进行解码,发现早期视觉区域可以保留工作记忆中有关特征的特定信息。研究人员向志愿者展示了两个不同方向的条纹图案,并要求他们在接受 fMRI 扫描的同时记住其中一个方向的图案。通过对扫描结果的分析,我们可以预测在超过 80% 的测试中,受试者记住的是两个方向图案中的哪一个。这项研究表明,即使在没有物理刺激的情况下,早期视觉区域也能在工作记忆中保留有关特征的特定信息。利用功能磁共振成像解码方法,可以从早期视觉区域的活动高度准确地预测出视觉特征。视觉工作记忆是连接感知和高级认知功能的重要纽带,它可以积极地保持不再出现的刺激信息1,2。研究表明,高阶前额叶、顶叶、颞下叶和枕叶外侧区域的持续活动有助于视觉维持3,4,5,6,7,8,9,10,11,这也可能是工作记忆最多只能容纳 3-4 个项目的有限能力的原因9,10,11。由于高阶区域缺乏早期感觉区域的视觉选择性,人们一直不清楚观察者是如何记住特定的视觉特征的,例如光栅的精确方位,并且在延迟数秒后记忆能力的衰减最小12。有一种观点认为,感觉区域可以维持微调的特征信息13,但早期视觉区域在长时间延迟后几乎没有持续的活动14,15,16。在这里,我们展示了即使在整体活动水平较低的情况下,工作记忆中的方位信息也能从人类视觉皮层的活动模式中解码出来。通过使用功能磁共振成像和模式分类方法,我们发现视觉区域V1-V4的活动模式可以预测两个方向光栅中哪个被保存在记忆中,平均准确率高达80%以上,即使是在长时间延迟后活动下降到基线水平的参与者中也是如此。这些方向选择性活动模式在整个延迟期间持续存在,在单个视觉区域中非常明显,并且与未关注的、与任务无关的光栅所引起的反应相似。我们的研究结果表明,在没有物理刺激的情况下,早期视觉区域可以在长达数秒的时间内将有关视觉特征的特定信息保留在工作记忆中。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Decoding reveals the contents of visual working memory in early visual areas

Although we can hold several different items in working visual memory, how we remember specific details and visual features of individual objects remains a mystery. The neurons in the higher-order areas responsible for working memory seem to exhibit no selectivity for visual detail, and the early visual areas of the cerebral cortex are uniquely able to process incoming visual signals from the eye but, it was thought, not to perform higher cognitive functions such as memory. Using a new technique for decoding data from functional magnetic resonance imaging (fMRI), Stephanie Harrison and Frank Tong have found that early visual areas can retain specific information about features held in working memory. Volunteers were shown two striped patterns at different orientations and asked to memorize one of the orientations whilst being scanned by fMRI. From analysis of the scans it was possible to predict which of the two orientation patterns a subject was being retained in over 80% of tests. This study shows that early visual areas can retain specific information about features held in working memory even when there is no physical stimulus present. Using functional magnetic resonance imaging decoding methods, visual features could be predicted from early visual area activity with a high degree of accuracy. Visual working memory provides an essential link between perception and higher cognitive functions, allowing for the active maintenance of information about stimuli no longer in view1,2. Research suggests that sustained activity in higher-order prefrontal, parietal, inferotemporal and lateral occipital areas supports visual maintenance3,4,5,6,7,8,9,10,11, and may account for the limited capacity of working memory to hold up to 3–4 items9,10,11. Because higher-order areas lack the visual selectivity of early sensory areas, it has remained unclear how observers can remember specific visual features, such as the precise orientation of a grating, with minimal decay in performance over delays of many seconds12. One proposal is that sensory areas serve to maintain fine-tuned feature information13, but early visual areas show little to no sustained activity over prolonged delays14,15,16. Here we show that orientations held in working memory can be decoded from activity patterns in the human visual cortex, even when overall levels of activity are low. Using functional magnetic resonance imaging and pattern classification methods, we found that activity patterns in visual areas V1–V4 could predict which of two oriented gratings was held in memory with mean accuracy levels upwards of 80%, even in participants whose activity fell to baseline levels after a prolonged delay. These orientation-selective activity patterns were sustained throughout the delay period, evident in individual visual areas, and similar to the responses evoked by unattended, task-irrelevant gratings. Our results demonstrate that early visual areas can retain specific information about visual features held in working memory, over periods of many seconds when no physical stimulus is present.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Nature

综合性期刊-综合性期刊

CiteScore

90.00

自引率

1.20%

发文量

3652

审稿时长

3 months

期刊介绍:

Nature is a prestigious international journal that publishes peer-reviewed research in various scientific and technological fields. The selection of articles is based on criteria such as originality, importance, interdisciplinary relevance, timeliness, accessibility, elegance, and surprising conclusions. In addition to showcasing significant scientific advances, Nature delivers rapid, authoritative, insightful news, and interpretation of current and upcoming trends impacting science, scientists, and the broader public. The journal serves a dual purpose: firstly, to promptly share noteworthy scientific advances and foster discussions among scientists, and secondly, to ensure the swift dissemination of scientific results globally, emphasizing their significance for knowledge, culture, and daily life.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: