基于YOLOv8的全口病变检测改进算法

IF 2.2

4区 计算机科学

Q2 COMPUTER SCIENCE, SOFTWARE ENGINEERING

引用次数: 0

摘要

在口腔锥形束ct (Cone Beam Computed Tomography, CBCT)医学成像检测中,存在微小病变,检测难度大,准确率低。现有的检测模型比较复杂。针对这一问题,本文提出了一种基于YOLOv8改进的双级YOLO检测方法。具体而言,我们首先基于MobileNetV3重构骨干网,以提高计算速度和效率。其次,从三个方面提高检测精度:设计复合特征融合网络,增强模型的特征提取能力,解决融合过程中浅层信息丢失导致小病灶检测精度下降的问题;我们进一步结合空间信息和通道信息设计了C2f-SCSA模块,更深入地挖掘病变信息。针对现有CBCT图像中病灶类型有限、样本不足的问题,我们团队与专业牙科医院合作建立了高质量的数据集,包括15种病灶和2000多张准确标记的口腔CBCT图像,为模型训练提供了坚实的数据支持。实验结果表明,改进后的方法比原算法的准确率提高了3.5个百分点,召回率提高了4.7个百分点,平均平均精度(mAP)提高了3.3个百分点,计算负荷仅为7.6 GFLOPs。这证明了智能诊断全口病变的显著优势,同时提高了准确性和减少了计算负荷。本文章由计算机程序翻译,如有差异,请以英文原文为准。

An improved algorithm for full-mouth lesion detection based on YOLOv8

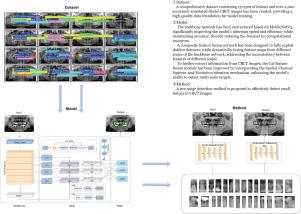

In medical imaging detection of oral Cone Beam Computed Tomography (CBCT), there exist tiny lesions that are challenging to detect with low accuracy. The existing detection models are relatively complex. To address this, this paper presents a dual-stage YOLO detection method improved based on YOLOv8. Specifically, we first reconstruct the backbone network based on MobileNetV3 to enhance computational speed and efficiency. Second, we improve detection accuracy from three aspects: we design a composite feature fusion network to enhance the model’s feature extraction capability, addressing the issue of decreased detection accuracy for small lesions due to the loss of shallow information during the fusion process; we further combine spatial and channel information to design the C2f-SCSA module, which delves deeper into the lesion information. To tackle the problem of limited types and insufficient samples of lesions in existing CBCT images, our team collaborated with a professional dental hospital to establish a high-quality dataset, which includes 15 types of lesions and over 2000 accurately labeled oral CBCT images, providing solid data support for model training. Experimental results indicate that the improved method enhances the accuracy of the original algorithm by 3.5 percentage points, increases the recall rate by 4.7 percentage points, and raises the mean Average Precision (mAP) by 3.3 percentage points, a computational load of only 7.6 GFLOPs. This demonstrates a significant advantage in intelligent diagnosis of full-mouth lesions while improving accuracy and reducing computational load.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Graphical Models

工程技术-计算机:软件工程

CiteScore

3.60

自引率

5.90%

发文量

15

审稿时长

47 days

期刊介绍:

Graphical Models is recognized internationally as a highly rated, top tier journal and is focused on the creation, geometric processing, animation, and visualization of graphical models and on their applications in engineering, science, culture, and entertainment. GMOD provides its readers with thoroughly reviewed and carefully selected papers that disseminate exciting innovations, that teach rigorous theoretical foundations, that propose robust and efficient solutions, or that describe ambitious systems or applications in a variety of topics.

We invite papers in five categories: research (contributions of novel theoretical or practical approaches or solutions), survey (opinionated views of the state-of-the-art and challenges in a specific topic), system (the architecture and implementation details of an innovative architecture for a complete system that supports model/animation design, acquisition, analysis, visualization?), application (description of a novel application of know techniques and evaluation of its impact), or lecture (an elegant and inspiring perspective on previously published results that clarifies them and teaches them in a new way).

GMOD offers its authors an accelerated review, feedback from experts in the field, immediate online publication of accepted papers, no restriction on color and length (when justified by the content) in the online version, and a broad promotion of published papers. A prestigious group of editors selected from among the premier international researchers in their fields oversees the review process.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: