多媒体推荐的双边全局语义增强

IF 15.5

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

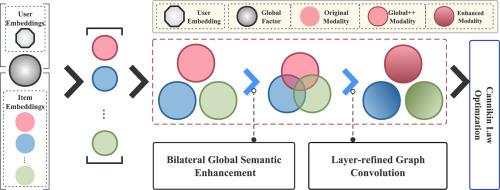

多媒体信息在互联网上泛滥,潜移默化地影响着人类社会。在快速发展的推荐系统中,结合多媒体信息来缓解数据稀疏性问题是一种流行的方法。然而,许多研究表明,在某些情况下,多模态信息会引入跨模态噪声。增强模态间的共同信息是消除跨模态噪声的一种可行方法。最近的高级工作使用额外的同构图增强用户(通过用户-用户图)或项目(通过项目-项目图)之间的模态公共信息。然而,这些额外的同构图结构将不可避免地带来巨大的计算成本。为了在减少计算成本的同时更好地提取模式间的共同信息,我们提出了一种用于多媒体推荐的双边全局语义增强方法,称为LOBSTER。具体来说,LOBSTER为用户和项目表示构建了两个全局语义空间,通过跨多种模式共享的额外可学习表示增强了用户和项目双方的全局/公共语义特征。LOBSTER进一步结合了层精图卷积网络(GCN)和动态优化来缓解过度平滑问题并调整不同模态的注意力水平。在三个真实数据集上进行的大量实验表明,与包含同质图的模型相比,LOBSTER实现了具有竞争力或更好的性能,同时提供了平均2.45倍的加速和60.26%的内存使用减少。我们的代码可在https://github.com/Jinfeng-Xu/LOBSTER上获得。本文章由计算机程序翻译,如有差异,请以英文原文为准。

LOBSTER: Bilateral global semantic enhancement for multimedia recommendation

Multimedia information floods the Internet, subtly influencing human society. Combining multimedia information to alleviate the data sparsity problem is a popular way within the rapid development of recommender systems. However, many studies reveal that multimodal information can introduce cross-modality noise in some cases. A feasible solution to alleviate cross-modality noises is to enhance the common information among modalities. Recent advanced works enhance modality common information between users (via user-user graphs) or items (via item-item graphs) using extra homogeneous graphs. However, these additional homogeneous graph structures will inevitably bring huge computational costs. To better extract common information among modalities while reducing computational costs, we propose a biLateral glOBal SemanTic Enhancement for multimedia Recommendation, which is called LOBSTER. Specifically, LOBSTER constructs two global semantic spaces for user and item representations, enhances global/common semantic features on both the user and item sides through additional learnable representations shared across multiple modalities. LOBSTER further incorporates a layer-refined Graph Convolutional Network (GCN) and a dynamic optimization to alleviate the over-smoothing problem and adjust attention levels for different modalities. Extensive experiments on three real-world datasets demonstrate that LOBSTER achieves competitive or superior performance compared to models incorporating homogeneous graphs, while providing an average 2.45 speedup and a 60.26 % reduction in memory usage. Our code is available at https://github.com/Jinfeng-Xu/LOBSTER.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Information Fusion

工程技术-计算机:理论方法

CiteScore

33.20

自引率

4.30%

发文量

161

审稿时长

7.9 months

期刊介绍:

Information Fusion serves as a central platform for showcasing advancements in multi-sensor, multi-source, multi-process information fusion, fostering collaboration among diverse disciplines driving its progress. It is the leading outlet for sharing research and development in this field, focusing on architectures, algorithms, and applications. Papers dealing with fundamental theoretical analyses as well as those demonstrating their application to real-world problems will be welcome.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: