研究虚拟人类的性别归属和情感感知

IF 2.8

4区 计算机科学

Q2 COMPUTER SCIENCE, SOFTWARE ENGINEERING

引用次数: 0

摘要

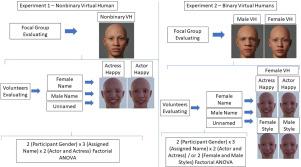

虚拟人(VHs)正变得越来越逼真,这引发了用户如何感知自己的性别和情感的问题。在本研究中,我们探讨了文本分配的性别和视觉面部特征对视频性别归因和情绪识别的影响。进行了两个实验。在第一个实验中,参与者评估了一个由男女演员共同表演的非二元动画VH。在第二部分中,参与者评估由真人演员或数据驱动的面部风格制作的二元男性和女性VHs。结果表明,用户通常依靠文本性别线索和面部特征来确定录像带的性别。当表情由女演员表演或来自面部风格时,尤其是在非二元模型中,情感识别更准确。值得注意的是,当VH在视觉上是雌雄同体时,参与者更一致地根据文本线索来判断性别,这表明缺乏强烈的性别面部标记增加了对文本信息的依赖。这些发现为设计更具包容性和感知一致性的虚拟代理提供了见解。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Examining the attribution of gender and the perception of emotions in virtual humans

Virtual Humans (VHs) are becoming increasingly realistic, raising questions about how users perceive their gender and emotions. In this study, we investigate how textually assigned gender and visual facial features influence both gender attribution and emotion recognition in VHs. Two experiments were conducted. In the first, participants evaluated a nonbinary VH animated with expressions performed by both male and female actors. In the second part, participants assessed binary male and female VHs animated by either real actors or data-driven facial styles. Results show that users often rely on textual gender cues and facial features to assign gender to VHs. Emotion recognition was more accurate when expressions were performed by actresses or derived from facial styles, particularly in nonbinary models. Notably, participants more consistently attributed gender according to textual cues when the VH was visually androgynous, suggesting that the absence of strong gendered facial markers increases the reliance on textual information. These findings offer insights for designing more inclusive and perceptually coherent virtual agents.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Computers & Graphics-Uk

工程技术-计算机:软件工程

CiteScore

5.30

自引率

12.00%

发文量

173

审稿时长

38 days

期刊介绍:

Computers & Graphics is dedicated to disseminate information on research and applications of computer graphics (CG) techniques. The journal encourages articles on:

1. Research and applications of interactive computer graphics. We are particularly interested in novel interaction techniques and applications of CG to problem domains.

2. State-of-the-art papers on late-breaking, cutting-edge research on CG.

3. Information on innovative uses of graphics principles and technologies.

4. Tutorial papers on both teaching CG principles and innovative uses of CG in education.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: