sweif:显式和隐式Swin变压器融合红外和可见光图像

IF 3.4

3区 物理与天体物理

Q2 INSTRUMENTS & INSTRUMENTATION

引用次数: 0

摘要

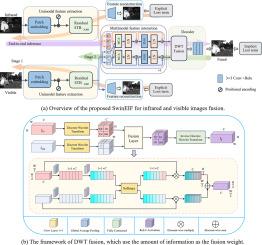

红外与可见光图像融合旨在将红外图像中的热目标信息与可见光图像中的精细纹理细节相融合,实现复杂环境下的全面场景感知。现有的显式方法依赖于手工制作的规则,难以处理跨模态差异,而隐式端到端模型需要经常不可用的大型成对数据集。为了解决这些挑战,我们提出了SwinEIF,这是一个基于Swin变压器的融合框架,它将显式和隐式范式协同用于红外-可见图像融合。该框架创新性地结合了一个显式单模态特征提取分支,该分支通过Swin Transformer的分层自关注学习特定于模态的表示,以及一个隐式多模态特征交互分支,该分支通过模态之间的交叉关注促进自适应特征融合。此外,基于离散小波变换(DWT)的融合解码器用于融合不同频段的特征,该融合过程使用源图像的内容作为融合权值来生成端到端的融合输出。利用显式图像融合范式的优势,在大规模、未对准的红外和可见光图像上训练单峰特征提取分支,使网络在第一阶段就能捕获多种模式,全面提取全局信息。在第二阶段,使用较小的、对齐的红外-可见光图像数据集对多模态特征交互分支和基于dwt的融合解码器进行微调,确保高质量的融合输出。通过充分结合两种范式的优势,SwinEIF在多个红外-可见图像数据集上表现出卓越的性能,优于最先进的融合方法。实验结果表明,该算法在主观视觉质量和客观评价指标上均表现出优异的融合性能和较强的泛化能力。本文章由计算机程序翻译,如有差异,请以英文原文为准。

SwinEIF: Explicit and implicit Swin Transformer fusion for infrared and visible images

Infrared and visible image fusion aims to integrate thermal target information from infrared images with fine texture details from visible images, enabling comprehensive scene perception in complex environments. Existing explicit methods rely on hand-crafted rules that struggle with cross-modal discrepancies, while implicit end-to-end models require large paired datasets that are often unavailable. To address these challenges, we propose SwinEIF, a Swin Transformer-based fusion framework that synergizes Explicit and Implicit paradigms for infrared-visible image Fusion. The framework innovatively combines an explicit unimodal feature extraction branch, which learns modality-specific representations through Swin Transformer’s hierarchical self-attention, with an implicit multi-modal feature interaction branch that facilitates adaptive feature fusion via cross-attention between modalities. Additionally, a Discrete Wavelet Transform (DWT)-based fusion decoder is incorporated to fuse features from different frequency bands, and this fusion process uses the content of the source images as fusion weights to generate end-to-end fusion outputs. Leveraging the strengths of the explicit image fusion paradigm, the unimodal feature extraction branch is trained on large-scale, unaligned infrared and visible images, enabling the network to capture diverse patterns and comprehensively extract global information in the first training stage. In the second stage, a smaller, aligned infrared-visible image dataset is then used to fine-tune the multi-modal feature interaction branch and the DWT-based fusion decoder, ensuring high-quality fusion outputs. By fully combining the advantages of both paradigms, SwinEIF demonstrates superior performance across multiple infrared-visible image datasets, outperforming state-of-the-art fusion methods. Experimental results confirm that SwinEIF excels in both subjective visual quality and objective evaluation metrics, showcasing remarkable fusion performance and strong generalization capabilities.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

CiteScore

5.70

自引率

12.10%

发文量

400

审稿时长

67 days

期刊介绍:

The Journal covers the entire field of infrared physics and technology: theory, experiment, application, devices and instrumentation. Infrared'' is defined as covering the near, mid and far infrared (terahertz) regions from 0.75um (750nm) to 1mm (300GHz.) Submissions in the 300GHz to 100GHz region may be accepted at the editors discretion if their content is relevant to shorter wavelengths. Submissions must be primarily concerned with and directly relevant to this spectral region.

Its core topics can be summarized as the generation, propagation and detection, of infrared radiation; the associated optics, materials and devices; and its use in all fields of science, industry, engineering and medicine.

Infrared techniques occur in many different fields, notably spectroscopy and interferometry; material characterization and processing; atmospheric physics, astronomy and space research. Scientific aspects include lasers, quantum optics, quantum electronics, image processing and semiconductor physics. Some important applications are medical diagnostics and treatment, industrial inspection and environmental monitoring.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: