一种用于多标签图像识别的带变压器的渐进注意网络

IF 7.6

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

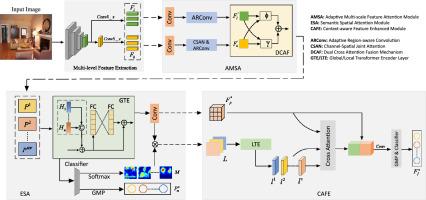

最近的研究通常通过构建高阶成对标签相关性来提高多标签图像识别的性能。然而,这些方法缺乏有效学习多尺度特征的能力,难以区分小尺度目标。此外,目前大多数基于注意力的捕获局部显著特征的方法可能会忽略许多有用的非显著特征。为了解决上述问题,我们提出了一种基于变压器的渐进式注意网络(TPANet)用于多标签图像识别。具体来说,我们首先设计了一种新的自适应多尺度特征注意(AMSA)模块来学习多层次特征中的跨尺度特征。然后,为了挖掘各种有用的对象特征,我们引入了变压器编码器来构建语义空间注意(ESA)模块,并提出了上下文感知特征增强(CAFE)模块。前者ESA模块用于发现完整的目标区域并捕获判别特征,后者CAFE模块利用目标局部特征增强像素级全局特征。提出的TPANet模型可以在三个流行的基准数据集(即MS-COCO 2014, Pascal VOC 2007和Visual Genome)中生成更准确的对象标签,并且与最先进的模型(例如,SST和FL-Tran等)具有竞争力。本文章由计算机程序翻译,如有差异,请以英文原文为准。

A progressive attention network with transformer for multi-label image recognition

Recent research typically improves the performance of multi-label image recognition by constructing higher-order pairwise label correlations. However, these methods lack the ability to effectively learn multi-scale features, which makes it difficult to distinguish small-scale objects. Moreover, most current attention-based methods to capture local salient features may ignore many useful non-salient features. To address the aforementioned issues, we propose a Transformer-based Progressive Attention Network (TPANet) for multi-label image recognition. Specifically, we first design a new adaptive multi-scale feature attention (AMSA) module to learn cross-scale features in multi-level features. Then, to excavate various useful object features, we introduce the transformer encoder to construct a semantic spatial attention (ESA) module and also propose a context-aware feature enhanced (CAFE) module. The former ESA module is used to discover complete object regions and capture discriminative features, and the latter CAFE module leverages object-local features to enhance pixel-level global features. The proposed TPANet model can generate more accurate object labels in three popular benchmark datasets (i.e., MS-COCO 2014, Pascal VOC 2007 and Visual Genome), and is competitive to state-of-the-art models (e.g., SST and FL-Tran, etc.).

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Pattern Recognition

工程技术-工程:电子与电气

CiteScore

14.40

自引率

16.20%

发文量

683

审稿时长

5.6 months

期刊介绍:

The field of Pattern Recognition is both mature and rapidly evolving, playing a crucial role in various related fields such as computer vision, image processing, text analysis, and neural networks. It closely intersects with machine learning and is being applied in emerging areas like biometrics, bioinformatics, multimedia data analysis, and data science. The journal Pattern Recognition, established half a century ago during the early days of computer science, has since grown significantly in scope and influence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: