学习连接改变神经表征到行为的汇总统计。

IF 3

3区 医学

Q2 NEUROSCIENCES

Frontiers in Neural Circuits

Pub Date : 2025-08-29

eCollection Date: 2025-01-01

DOI:10.3389/fncir.2025.1618351

引用次数: 0

摘要

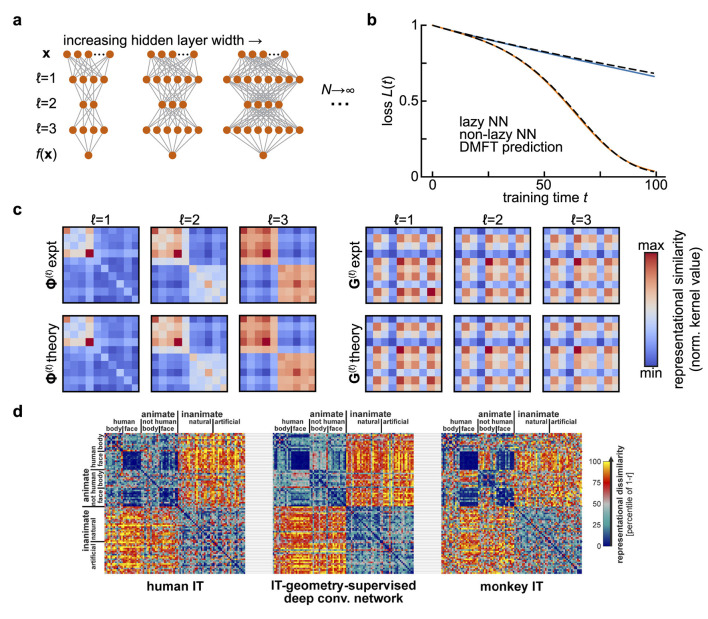

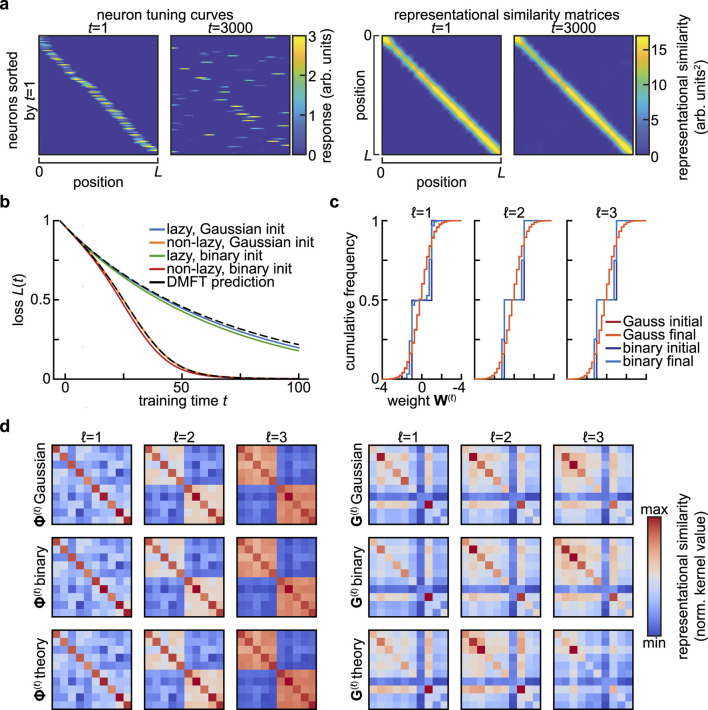

我们如何理解学习过程中神经活动的大规模记录?源自统计物理学的神经网络学习理论提供了一个潜在的答案:对于给定的任务,通常有一小组汇总统计数据足以预测网络学习时的性能。在这里,我们回顾了总结统计如何用于建立对神经网络学习的理论理解的最新进展。然后,我们讨论了这种观点如何为神经数据的分析提供信息,从而更好地理解生物和人工神经网络中的学习。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Summary statistics of learning link changing neural representations to behavior.

How can we make sense of large-scale recordings of neural activity across learning? Theories of neural network learning with their origins in statistical physics offer a potential answer: for a given task, there are often a small set of summary statistics that are sufficient to predict performance as the network learns. Here, we review recent advances in how summary statistics can be used to build theoretical understanding of neural network learning. We then argue for how this perspective can inform the analysis of neural data, enabling better understanding of learning in biological and artificial neural networks.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Frontiers in Neural Circuits

NEUROSCIENCES-

CiteScore

6.00

自引率

5.70%

发文量

135

审稿时长

4-8 weeks

期刊介绍:

Frontiers in Neural Circuits publishes rigorously peer-reviewed research on the emergent properties of neural circuits - the elementary modules of the brain. Specialty Chief Editors Takao K. Hensch and Edward Ruthazer at Harvard University and McGill University respectively, are supported by an outstanding Editorial Board of international experts. This multidisciplinary open-access journal is at the forefront of disseminating and communicating scientific knowledge and impactful discoveries to researchers, academics and the public worldwide.

Frontiers in Neural Circuits launched in 2011 with great success and remains a "central watering hole" for research in neural circuits, serving the community worldwide to share data, ideas and inspiration. Articles revealing the anatomy, physiology, development or function of any neural circuitry in any species (from sponges to humans) are welcome. Our common thread seeks the computational strategies used by different circuits to link their structure with function (perceptual, motor, or internal), the general rules by which they operate, and how their particular designs lead to the emergence of complex properties and behaviors. Submissions focused on synaptic, cellular and connectivity principles in neural microcircuits using multidisciplinary approaches, especially newer molecular, developmental and genetic tools, are encouraged. Studies with an evolutionary perspective to better understand how circuit design and capabilities evolved to produce progressively more complex properties and behaviors are especially welcome. The journal is further interested in research revealing how plasticity shapes the structural and functional architecture of neural circuits.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: