立体脑电图揭示了人在视听言语感知过程中颞上沟多感觉整合的神经特征。

IF 4

2区 医学

Q1 NEUROSCIENCES

引用次数: 0

摘要

人类的言语感知是多感官的,它将说话人声音中的听觉信息与说话人面部的视觉信息相结合。BOLD功能磁共振成像研究表明,颞上回(STG)处理听觉语言,颞上沟(STS)整合听觉和视觉语言,但作为一种间接的血流动力学测量,功能磁共振成像在追踪语音感知背后的快速神经计算能力方面受到限制。采用立体脑电图(sEEG)电极直接记录42例癫痫患者(25例F, 17例M)的STG和STS。参与者识别以听觉、视觉和视听形式呈现的单个单词,其中有或没有添加听觉噪音。看到说话者的脸提供了强烈的感知优势,提高了每个参与者对嘈杂语音的感知。在神经上,集中在STG中后部和STS的电极亚群对听觉言语(潜伏期71 ms)和视觉言语(潜伏期109 ms)都有反应。观察到明显的多感官增强,特别是在STS的上侧:与仅听觉语音相比,视听语音的反应延迟快了40%,反应幅度大了18%。相比之下,STG没有表现出更快或更大的多感官反应。令人惊讶的是,STS对视听语音的反应延迟明显快于STG (50 ms vs. 64 ms),表明STG在听觉语音感知中起主要作用,而STS在视听语音感知中起主导作用的平行通路模型。与功能磁共振成像一起,sEEG提供了越来越多的证据,证明STS在多感觉整合中起着关键作用。人类大脑最重要的功能之一就是与他人交流。在交谈过程中,人们利用说话者面部的视觉信息以及说话者声音的听觉信息。我们直接记录了癫痫患者在颞上沟(STS)植入电极的大脑活动,这是大脑中语言感知的关键区域。这些记录表明,在STS中,听到说话者的声音和看到说话者的脸比单独听到说话者的声音引起的神经反应更大、更快。当我们能看到说话人的脸时,STS的多感官增强可能是我们更好地理解嘈杂言语能力的神经基础。本文章由计算机程序翻译,如有差异,请以英文原文为准。

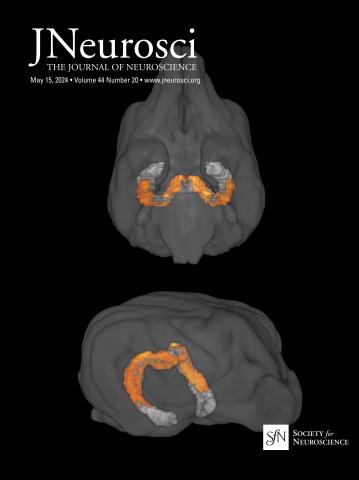

Stereoelectroencephalography reveals neural signatures of multisensory integration in the human superior temporal sulcus during audiovisual speech perception.

Human speech perception is multisensory, integrating auditory information from the talker's voice with visual information from the talker's face. BOLD fMRI studies have implicated the superior temporal gyrus (STG) in processing auditory speech and the superior temporal sulcus (STS) in integrating auditory and visual speech, but as an indirect hemodynamic measure, fMRI is limited in its ability to track the rapid neural computations underlying speech perception. Using stereoelectroencephalograpy (sEEG) electrodes, we directly recorded from the STG and STS in 42 epilepsy patients (25 F, 17 M). Participants identified single words presented in auditory, visual and audiovisual formats with and without added auditory noise. Seeing the talker's face provided a strong perceptual benefit, improving perception of noisy speech in every participant. Neurally, a subpopulation of electrodes concentrated in mid-posterior STG and STS responded to both auditory speech (latency 71 ms) and visual speech (109 ms). Significant multisensory enhancement was observed, especially in the upper bank of the STS: compared with auditory-only speech, the response latency for audiovisual speech was 40% faster and the response amplitude was 18% larger. In contrast, STG showed neither faster nor larger multisensory responses. Surprisingly, STS response latencies for audiovisual speech were significantly faster than those in the STG (50 ms vs. 64 ms), suggesting a parallel pathway model in which the STG plays the primary role in auditory-only speech perception, while the STS takes the lead in audiovisual speech perception. Together with fMRI, sEEG provides converging evidence that STS plays a key role in multisensory integration.Significance Statement One of the most important functions of the human brain is to communicate with others. During conversation, humans take advantage of visual information from the face of the talker as well as auditory information from the voice of the talker. We directly recorded activity from the brains of epilepsy patients implanted with electrodes in the superior temporal sulcus (STS), a key brain region for speech perception. These recordings showed that hearing the voice and seeing the face of the talker evoked larger and faster neural responses in STS than the talker's voice alone. Multisensory enhancement in the STS may be the neural basis for our ability to better understand noisy speech when we can see the face of the talker.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Journal of Neuroscience

医学-神经科学

CiteScore

9.30

自引率

3.80%

发文量

1164

审稿时长

12 months

期刊介绍:

JNeurosci (ISSN 0270-6474) is an official journal of the Society for Neuroscience. It is published weekly by the Society, fifty weeks a year, one volume a year. JNeurosci publishes papers on a broad range of topics of general interest to those working on the nervous system. Authors now have an Open Choice option for their published articles

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: