基于超网络加速评估的快速数据感知神经结构搜索

IF 7.6

3区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

引用次数: 0

摘要

微型机器学习(TinyML)有望通过在低功耗嵌入式系统上运行机器学习模型,彻底改变医疗保健、环境监测和工业维护等领域。然而,成功部署TinyML所需的复杂优化仍然阻碍着它的广泛采用。简化TinyML的一个有希望的途径是通过自动机器学习(AutoML),它可以将复杂的优化工作流提炼成可访问的关键决策。值得注意的是,硬件感知神经架构搜索——计算机根据预测性能和硬件指标搜索最优的TinyML模型——已经获得了显著的吸引力,产生了一些当今最广泛使用的TinyML模型。TinyML系统在非常严格的资源限制下运行,例如几kB的内存和mW范围内的能耗。在这个紧凑的设计空间中,输入数据配置的选择提供了一个有吸引力的精度-延迟权衡。因此,实现真正最优的TinyML系统需要联合调优输入数据和模型架构。尽管它很重要,但这种“数据感知神经架构搜索”仍然没有得到充分的探索。为了解决这一差距,我们提出了一种新的最先进的数据感知神经架构搜索技术,并在新颖的TinyML“唤醒视觉”数据集上展示了其有效性。我们的实验表明,在不同的时间和硬件限制下,与纯粹以架构为中心的方法相比,数据感知神经架构搜索始终能够发现更好的TinyML系统,这强调了数据感知优化在推进TinyML方面的关键作用。本文章由计算机程序翻译,如有差异,请以英文原文为准。

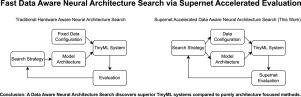

Fast data aware neural architecture search via supernet accelerated evaluation

Tiny machine learning (TinyML) promises to revolutionize fields such as healthcare, environmental monitoring, and industrial maintenance by running machine learning models on low-power embedded systems. However, the complex optimizations required for successful TinyML deployment continue to impede its widespread adoption.

A promising route to simplifying TinyML is through automatic machine learning (AutoML), which can distill elaborate optimization workflows into accessible key decisions. Notably, Hardware Aware Neural Architecture Searches — where a computer searches for an optimal TinyML model based on predictive performance and hardware metrics — have gained significant traction, producing some of today’s most widely used TinyML models.

TinyML systems operate under extremely tight resource constraints, such as a few kB of memory and an energy consumption in the mW range. In this tight design space, the choice of input data configuration offers an attractive accuracy-latency tradeoff. Achieving truly optimal TinyML systems thus requires jointly tuning both input data and model architecture.

Despite its importance, this “Data Aware Neural Architecture Search” remains underexplored. To address this gap, we propose a new state-of-the-art Data Aware Neural Architecture Search technique and demonstrate its effectiveness on the novel TinyML “Wake Vision” dataset. Our experiments show that across varying time and hardware constraints, Data Aware Neural Architecture Search consistently discovers superior TinyML systems compared to purely architecture-focused methods, underscoring the critical role of data-aware optimization in advancing TinyML.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Internet of Things

Multiple-

CiteScore

3.60

自引率

5.10%

发文量

115

审稿时长

37 days

期刊介绍:

Internet of Things; Engineering Cyber Physical Human Systems is a comprehensive journal encouraging cross collaboration between researchers, engineers and practitioners in the field of IoT & Cyber Physical Human Systems. The journal offers a unique platform to exchange scientific information on the entire breadth of technology, science, and societal applications of the IoT.

The journal will place a high priority on timely publication, and provide a home for high quality.

Furthermore, IOT is interested in publishing topical Special Issues on any aspect of IOT.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: