基于时空图神经网络的儿童动作识别数据高效方法系统分析

IF 3.5

3区 计算机科学

Q2 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

本文介绍了基于时空图神经网络(ST-GNN)的深度学习框架模型在儿童活动识别(CAR)中的实现。尽管图神经网络具有优越的性能潜力,但该领域的先前实现主要使用CNN, LSTM和其他方法。据我们所知,这项研究是第一次使用ST-GNN模型来识别儿童活动,同时使用实验室、野外和部署中的骨架数据。为了克服小型公开可用的儿童行为数据集带来的挑战,应用迁移学习方法(如特征提取和微调)来提高模型性能。作为主要贡献,我们开发了一个基于st - gnn的骨架模态模型,尽管使用了相对较小的儿童动作数据集,但与在更大(x10)的成人动作数据集(90.6%)上训练的实现相比,该模型在相似的动作子集上取得了更好的性能(94.81%)。使用基于st - gcn的特征提取和微调方法,与香草实现相比,准确率提高了10%-40%,达到了94.81%的最高准确率。此外,其他ST-GNN模型的实现表明,与ST-GCN基线相比,精度进一步提高了15%-45%。在活动数据集上的实验结果表明,类别多样性、数据集大小和仔细选择预训练数据集显著提高了准确性。在野外和部署中的实现证实了上述方法在现实世界中的适用性,ST-GNN模型在流数据上实现了11 FPS。最后,提供了图表达性和图重新布线对基于小数据集的模型精度影响的初步证据,概述了未来研究的潜在方向。代码可在https://github.com/sankamohotttala/ST_GNN_HAR_DEML上获得。本文章由计算机程序翻译,如有差异,请以英文原文为准。

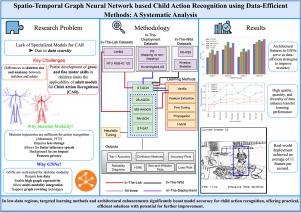

Spatio-temporal graph neural network based child action recognition using data-efficient methods: A systematic analysis

This paper presents implementations on child activity recognition (CAR) using spatial–temporal graph neural network (ST-GNN)-based deep learning models with the skeleton modality. Prior implementations in this domain have predominantly utilized CNN, LSTM, and other methods, despite the superior performance potential of graph neural networks. To the best of our knowledge, this study is the first to use an ST-GNN model for child activity recognition employing both in-the-lab, in-the-wild, and in-the-deployment skeleton data. To overcome the challenges posed by small publicly available child action datasets, transfer learning methods such as feature extraction and fine-tuning were applied to enhance model performance.

As a principal contribution, we developed an ST-GNN-based skeleton modality model that, despite using a relatively small child action dataset, achieved superior performance (94.81%) compared to implementations trained on a significantly larger (x10) adult action dataset (90.6%) for a similar subset of actions. With ST-GCN-based feature extraction and fine-tuning methods, accuracy improved by 10%–40% compared to vanilla implementations, achieving a maximum accuracy of 94.81%. Additionally, implementations with other ST-GNN models demonstrated further accuracy improvements of 15%–45% over the ST-GCN baseline.

The results on activity datasets empirically demonstrate that class diversity, dataset size, and careful selection of pre-training datasets significantly enhance accuracy. In-the-wild and in-the-deployment implementations confirm the real-world applicability of above approaches, with the ST-GNN model achieving 11 FPS on streaming data. Finally, preliminary evidence on the impact of graph expressivity and graph rewiring on accuracy of small dataset-based models is provided, outlining potential directions for future research. The codes are available at https://github.com/sankamohotttala/ST_GNN_HAR_DEML.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Computer Vision and Image Understanding

工程技术-工程:电子与电气

CiteScore

7.80

自引率

4.40%

发文量

112

审稿时长

79 days

期刊介绍:

The central focus of this journal is the computer analysis of pictorial information. Computer Vision and Image Understanding publishes papers covering all aspects of image analysis from the low-level, iconic processes of early vision to the high-level, symbolic processes of recognition and interpretation. A wide range of topics in the image understanding area is covered, including papers offering insights that differ from predominant views.

Research Areas Include:

• Theory

• Early vision

• Data structures and representations

• Shape

• Range

• Motion

• Matching and recognition

• Architecture and languages

• Vision systems

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: