通过世界模型掌握不同的控制任务

IF 48.5

1区 综合性期刊

Q1 MULTIDISCIPLINARY SCIENCES

引用次数: 0

摘要

开发一种通用算法,学习解决各种应用中的任务,一直是人工智能领域的一个基本挑战。虽然目前的强化学习算法可以很容易地应用于类似于它们开发的任务,但将它们配置为新的应用领域需要大量的人类专业知识和实验1,2。在这里,我们介绍了第三代梦想者,这是一种通用算法,在超过150种不同的任务中,用单一的配置胜过专门的方法。做梦的人学习环境的模型,并通过想象未来的情景来改善自己的行为。基于规范化、平衡和转换的鲁棒性技术使跨领域的稳定学习成为可能。据我们所知,“梦想者”是第一个在没有人类数据或课程的情况下,在《我的世界》中从零开始收集钻石的算法。这一成就对人工智能提出了重大挑战,因为人工智能需要在开放世界中从像素和稀疏奖励中探索有远见的策略3。我们的工作允许在没有大量实验的情况下解决具有挑战性的控制问题,使强化学习广泛适用。本文章由计算机程序翻译,如有差异,请以英文原文为准。

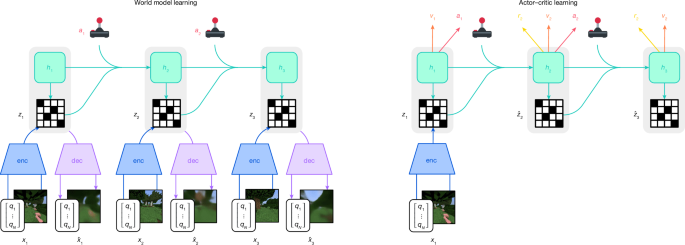

Mastering diverse control tasks through world models

Developing a general algorithm that learns to solve tasks across a wide range of applications has been a fundamental challenge in artificial intelligence. Although current reinforcement-learning algorithms can be readily applied to tasks similar to what they have been developed for, configuring them for new application domains requires substantial human expertise and experimentation1,2. Here we present the third generation of Dreamer, a general algorithm that outperforms specialized methods across over 150 diverse tasks, with a single configuration. Dreamer learns a model of the environment and improves its behaviour by imagining future scenarios. Robustness techniques based on normalization, balancing and transformations enable stable learning across domains. Applied out of the box, Dreamer is, to our knowledge, the first algorithm to collect diamonds in Minecraft from scratch without human data or curricula. This achievement has been posed as a substantial challenge in artificial intelligence that requires exploring farsighted strategies from pixels and sparse rewards in an open world3. Our work allows solving challenging control problems without extensive experimentation, making reinforcement learning broadly applicable. A general reinforcement-learning algorithm, called Dreamer, outperforms specialized expert algorithms across diverse tasks by learning a model of the environment and improving its behaviour by imagining future scenarios.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Nature

综合性期刊-综合性期刊

CiteScore

90.00

自引率

1.20%

发文量

3652

审稿时长

3 months

期刊介绍:

Nature is a prestigious international journal that publishes peer-reviewed research in various scientific and technological fields. The selection of articles is based on criteria such as originality, importance, interdisciplinary relevance, timeliness, accessibility, elegance, and surprising conclusions. In addition to showcasing significant scientific advances, Nature delivers rapid, authoritative, insightful news, and interpretation of current and upcoming trends impacting science, scientists, and the broader public. The journal serves a dual purpose: firstly, to promptly share noteworthy scientific advances and foster discussions among scientists, and secondly, to ensure the swift dissemination of scientific results globally, emphasizing their significance for knowledge, culture, and daily life.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: