化学过程的控制信息强化学习

IF 3.9

3区 工程技术

Q2 ENGINEERING, CHEMICAL

引用次数: 0

摘要

这项工作提出了一个控制知情的强化学习(CIRL)框架,该框架将比例-积分-导数(PID)控制组件集成到深度强化学习(RL)策略的体系结构中,将控制理论的先验知识纳入学习过程。CIRL通过结合两方面的优点来提高性能和鲁棒性:PID控制的干扰抑制和设定点跟踪能力以及深度强化学习的非线性建模能力。对连续搅拌槽式反应器系统的仿真研究表明,与传统的无模型深度RL和静态PID控制器相比,CIRL的性能有所提高。CIRL表现出更好的集点跟踪能力,特别是当泛化到包含训练分布之外的集点的轨迹时,表明泛化能力增强。此外,CIRL策略中嵌入的先验控制知识提高了其对不可观测系统干扰的鲁棒性。CIRL框架结合了经典控制和强化学习的优势,开发了具有样本效率和鲁棒性的深度强化学习算法,在复杂的工业系统中具有潜在的应用前景。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Control-Informed Reinforcement Learning for Chemical Processes

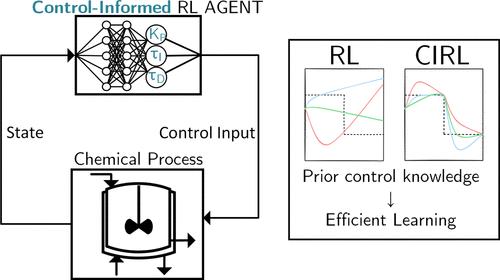

This work proposes a control-informed reinforcement learning (CIRL) framework that integrates proportional-integral-derivative (PID) control components into the architecture of deep reinforcement learning (RL) policies, incorporating prior knowledge from control theory into the learning process. CIRL improves performance and robustness by combining the best of both worlds: the disturbance-rejection and set point-tracking capabilities of PID control and the nonlinear modeling capacity of deep RL. Simulation studies conducted on a continuously stirred tank reactor system demonstrate the improved performance of CIRL compared to both conventional model-free deep RL and static PID controllers. CIRL exhibits better set point-tracking ability, particularly when generalizing to trajectories containing set points outside the training distribution, suggesting enhanced generalization capabilities. Furthermore, the embedded prior control knowledge within the CIRL policy improves its robustness to unobserved system disturbances. The CIRL framework combines the strengths of classical control and reinforcement learning to develop sample-efficient and robust deep reinforcement learning algorithms with potential applications in complex industrial systems.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Industrial & Engineering Chemistry Research

工程技术-工程:化工

CiteScore

7.40

自引率

7.10%

发文量

1467

审稿时长

2.8 months

期刊介绍:

ndustrial & Engineering Chemistry, with variations in title and format, has been published since 1909 by the American Chemical Society. Industrial & Engineering Chemistry Research is a weekly publication that reports industrial and academic research in the broad fields of applied chemistry and chemical engineering with special focus on fundamentals, processes, and products.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: