碳排放限额交易策略的深度递归 Q 网络算法。

IF 8

2区 环境科学与生态学

Q1 ENVIRONMENTAL SCIENCES

引用次数: 0

摘要

在全球变暖的背景下,碳交易市场被认为是一种有效的减排手段。随着越来越多的企业和个人参与碳市场交易,帮助他们自动识别碳交易投资机会,实现智能碳交易决策,具有重要的理论和现实意义。根据碳交易市场的特点,我们提出了一种新颖的深度强化学习(DRL)交易策略--深度递归 Q 网络(DRQN)。实验结果表明,基于 DRQN 算法的碳配额交易模型能够提供最优交易策略,并适应市场变化。具体而言,DRQN算法策略在广东(GD)和湖北(HB)碳市场的年化收益率分别为15.43%和34.75%,明显优于其他策略。为了更好地满足模型实际应用场景的需要,我们分析了贴现因子和交易成本的影响。研究结果表明,贴现因子可以为参与者提供更清晰的预期。在两个碳市场(GD 和 HB)中,最佳贴现因子值均为 0.4,过小或过大的贴现因子值都会对交易产生不利影响。同时,政府可以通过调节碳交易成本来限制参与者的投机行为,从而保证碳交易的公平性。本文章由计算机程序翻译,如有差异,请以英文原文为准。

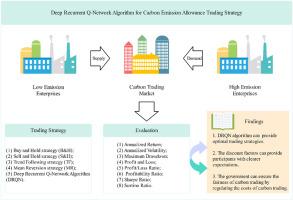

Deep recurrent Q-network algorithm for carbon emission allowance trading strategy

Against the backdrop of global warming, the carbon trading market is considered as an effective means of emission reduction. With more and more companies and individuals participating in carbon markets for trading, it is of great theoretical and practical significance to help them automatically identify carbon trading investment opportunities and achieve intelligent carbon trading decisions. Based on the characteristics of the carbon trading market, we propose a novel deep reinforcement learning (DRL) trading strategy - Deep Recurrent Q-Network (DRQN). The experimental results show that the carbon allowance trading model based on the DRQN algorithm can provide optimal trading strategies and adapt to market changes. Specifically, the annualized returns for the DRQN algorithm strategy in the Guangdong (GD) and Hubei (HB) carbon markets are 15.43% and 34.75%, respectively, significantly outperforming other strategies. To better meet the needs of the actual implementation scenarios of the model, we analyze the impacts of discount factors and trading costs. The research results indicate that discount factors can provide participants with clearer expectations. In both carbon markets (GD and HB), there exists an optimal discount factor value of 0.4, as both excessively small or large values can have adverse effects on trading. Simultaneously, the government can ensure the fairness of carbon trading by regulating the costs of carbon trading to limit the speculative behavior of participants.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Journal of Environmental Management

环境科学-环境科学

CiteScore

13.70

自引率

5.70%

发文量

2477

审稿时长

84 days

期刊介绍:

The Journal of Environmental Management is a journal for the publication of peer reviewed, original research for all aspects of management and the managed use of the environment, both natural and man-made.Critical review articles are also welcome; submission of these is strongly encouraged.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: