复杂网络动态神经网络模型的泛化

IF 5.4

1区 物理与天体物理

Q1 PHYSICS, MULTIDISCIPLINARY

引用次数: 0

摘要

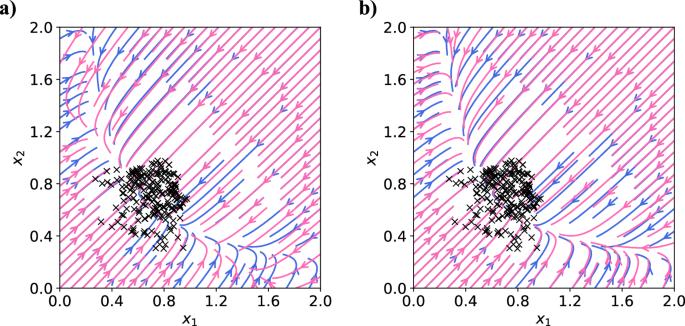

微分方程是研究从物理系统到复杂系统等各种动力学的普遍工具。数据驱动的微分方程近似是一种很有前途的替代传统方法,可用于揭示动态系统模型,尤其是在缺乏明确第一原理的复杂系统中。最近用于研究动力学的一种机器学习工具是神经网络,它可用于微分方程的求解或发现。然而,在不熟悉的环境中部署深度学习模型,如预测未观察状态空间区域或新图形上的动态,可能会导致虚假结果。我们以复杂系统为重点,研究了在测试数据和训练数据的统计属性不同的情况下,神经网络预测的泛化。我们发现,在训练数据的范围内,神经网络可以准确预测直接训练环境之外的动态。为了确定模型何时无法泛化到新环境中,我们提出了一种统计显著性检验方法。深度学习是发现控制方程的传统方法(如变分法和扰动法)或数据驱动方法(如符号回归法)的一种有前途的替代方法。本文探讨了复杂网络动力学的神经近似如何泛化到新的、未观察到的环境中,并提出了一个统计检验框架来量化推断预测的置信度。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Generalization of neural network models for complex network dynamics

Differential equations are a ubiquitous tool to study dynamics, ranging from physical systems to complex systems, where a large number of agents interact through a graph. Data-driven approximations of differential equations present a promising alternative to traditional methods for uncovering a model of dynamical systems, especially in complex systems that lack explicit first principles. A recently employed machine learning tool for studying dynamics is neural networks, which can be used for solution finding or discovery of differential equations. However, deploying deep learning models in unfamiliar settings-such as predicting dynamics in unobserved state space regions or on novel graphs-can lead to spurious results. Focusing on complex systems whose dynamics are described with a system of first-order differential equations coupled through a graph, we study generalization of neural network predictions in settings where statistical properties of test data and training data are different. We find that neural networks can accurately predict dynamics beyond the immediate training setting within the domain of the training data. To identify when a model is unable to generalize to novel settings, we propose a statistical significance test. Deep learning is a promising alternative to traditional methods for discovering governing equations, such as variational and perturbation methods, or data-driven approaches like symbolic regression. This paper explores the generalization of neural approximations of dynamics on complex networks to novel, unobserved settings and proposes a statistical testing framework to quantify confidence in the inferred predictions.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Communications Physics

Physics and Astronomy-General Physics and Astronomy

CiteScore

8.40

自引率

3.60%

发文量

276

审稿时长

13 weeks

期刊介绍:

Communications Physics is an open access journal from Nature Research publishing high-quality research, reviews and commentary in all areas of the physical sciences. Research papers published by the journal represent significant advances bringing new insight to a specialized area of research in physics. We also aim to provide a community forum for issues of importance to all physicists, regardless of sub-discipline.

The scope of the journal covers all areas of experimental, applied, fundamental, and interdisciplinary physical sciences. Primary research published in Communications Physics includes novel experimental results, new techniques or computational methods that may influence the work of others in the sub-discipline. We also consider submissions from adjacent research fields where the central advance of the study is of interest to physicists, for example material sciences, physical chemistry and technologies.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: