利用人工神经网络对分散式能源系统进行调度:以训练方法为重点的比较分析

IF 7.1

Q1 ENERGY & FUELS

引用次数: 0

摘要

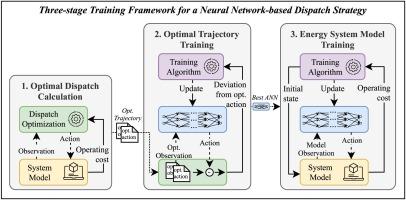

由于具有灵活性,分散式能源系统(DES)在整合可再生能源方面发挥着核心作用。为了有效利用可再生能源,必须运行可调度组件,以弥补不灵活的供应与能源需求之间的时间差。由于存在大量的能量转换器、储能系统和灵活的用户,实现这一目标的方法有很多。传统的基于规则的调度策略往往在此达到极限,而优化的调度策略(如模型预测控制或最优调度)通常基于非常好的预测。强化学习,特别是人工神经网络(ANN)的应用,为学习复杂的决策过程提供了可能。由于这需要较长的训练时间,因此需要一个高效的训练框架。本文提出了不同的训练方法来学习基于 ANN 的调度策略。在方法 I 中,ANN 试图学习相应最优调度问题的解决方案。在方法 II 中,在训练过程中模拟能源系统模型,计算基于 ANN 的调度产生的观测状态和运行成本。方法 III 将可快速执行的方法 I 解决方案作为热身解决方案,用于计算成本高昂的方法 II 训练。在本文中,基于模型的分析比较了不同的基于 ANN 的调度策略与基于规则的调度策略、模型预测调度(MPC)以及最优调度的计算效率和由此产生的运营成本。调度策略的比较基于三个不同系统拓扑结构的案例研究,并应用了训练和测试数据。然而,在所有案例研究中,无论是训练数据集还是测试数据集,训练方法 II 和 III 都明显优于基于规则的调度策略。值得注意的是,在前两个案例研究的训练数据中,方法 II 和 III 在高不确定性预测和中不确定性预测下的表现也超过了基于 MPC 的调度策略。相比之下,基于 MPC 的调度策略在第三个案例研究中更胜一筹,这可能是由于系统的复杂性更高,而在测试数据集中则是由于针对每个特定数据集进行了优化的方法优势。训练方法 III 的有效性取决于使用方法 I 进行热身训练的效果:热身训练只有在调度效果已经很好的情况下才有益处(如案例研究二所示)。否则,正如案例研究一和三所示,训练方法 II 更为有效。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Dispatch of decentralized energy systems using artificial neural networks: A comparative analysis with emphasis on training methods

Due to the availability of flexibility, Decentralized Energy Systems (DES) play a central role in integrating renewable energies. To efficiently utilize renewable energy, dispatchable components must be operated to bridge the time gap between inflexible supply and energy demand. Due to the large number of energy converters, energy storage systems, and flexible consumers, there are many ways to achieve this. Conventional rule-based dispatch strategies often reach their limits here, and optimized dispatch strategies (e.g., model predictive control or the optimal dispatch) are usually based on very good forecasts. Reinforcement learning, particularly the application of Artificial Neural Networks (ANN), offers the possibility to learn complex decision-making processes. Since long training times are required for this, an efficient training framework is needed. The present paper proposes different training methods to learn an ANN-based dispatch strategy. In Method I, the ANN attempts to learn the solution to the corresponding optimal dispatch problem. In method II, the energy system model is simulated during the training to compute the observation state and operating costs resulting from the ANN-based dispatch. Method III uses the fast executable Method I solution as a warm-up solution for the computationally expensive training with Method II. In the present paper, a model-based analysis compares the different ANN-based dispatch strategies with rule-based dispatch strategies, model predictive dispatch (MPC), and optimal dispatch regarding their computational efficiency and the resulting operating costs. The dispatch strategies are compared based on three case studies with different system topologies for which training and test data are applied.

Training method I proved to be non-competitive. However, training methods II and III significantly outperformed rule-based dispatch strategies across all case studies for both training and test data sets. Notably, methods II and III also surpassed MPC-based dispatch strategies under high and medium uncertainty forecasts for the training data in the first two case studies. In contrast, MPC-based dispatch was superior in the third case study, likely due to the higher system’s complexity and in the test data set due to the methodological advantage of being optimized for each specific data set. The effectiveness of training method III depends on the performance of the warm-up training with method I: warm-up is beneficial only if this results in an already promising dispatch (as seen in case study two). Otherwise, training method II proves more effective, as observed in case studies one and three.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Energy Conversion and Management-X

Multiple-

CiteScore

8.80

自引率

3.20%

发文量

180

审稿时长

58 days

期刊介绍:

Energy Conversion and Management: X is the open access extension of the reputable journal Energy Conversion and Management, serving as a platform for interdisciplinary research on a wide array of critical energy subjects. The journal is dedicated to publishing original contributions and in-depth technical review articles that present groundbreaking research on topics spanning energy generation, utilization, conversion, storage, transmission, conservation, management, and sustainability.

The scope of Energy Conversion and Management: X encompasses various forms of energy, including mechanical, thermal, nuclear, chemical, electromagnetic, magnetic, and electric energy. It addresses all known energy resources, highlighting both conventional sources like fossil fuels and nuclear power, as well as renewable resources such as solar, biomass, hydro, wind, geothermal, and ocean energy.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: