面向人机协作环境的激光雷达-深度相机信息融合方法

IF 14.7

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

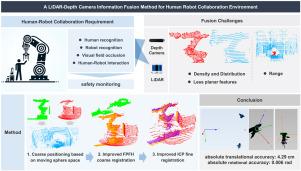

随着先进制造业中人机协作的发展,多传感器集成日益成为确保人机互动安全的关键组成部分。鉴于深度摄像头和激光雷达等多传感器数据在范围尺度、密度和排列模式上的差异,准确融合多种来源的信息已成为保障人机安全的迫切需要。本文以激光雷达和深度摄像头为重点,探讨数据采集范围、点密度和分布模式的差异给信息融合带来的挑战。我们为人机协作环境提出了一种异构传感器信息融合方法。为了解决点云范围尺度差异巨大的问题,我们引入了移动球空间粗定位算法,根据相似特征缩小感兴趣的尺度范围。此外,为了解决点云之间密度差异大、重叠率低的难题,我们提出了基于重叠率的改进型 FPFH 粗配准算法和基于生成对应点的增强型 ICP 精配准算法。本文提出的方法被应用于在人机协作场景中融合来自 64 线激光雷达和深度相机的信息。实验结果表明,绝对平移精度为 4.29 厘米,绝对旋转精度为 0.006 拉德,满足了人机协作中异构传感器信息融合的要求。本文章由计算机程序翻译,如有差异,请以英文原文为准。

A LiDAR-depth camera information fusion method for human robot collaboration environment

With the evolution of human–robot collaboration in advanced manufacturing, multisensor integration has increasingly become a critical component for ensuring safety during human–robot interactions. Given the disparities in range scales, densities, and arrangement patterns among multisensor data, such as that from depth cameras and LiDAR, accurately fusing information from multiple sources has emerged as a pressing need to safeguard human–robot safety. This paper focuses on LiDAR and depth cameras, addressing the challenges posed by the differences in data collection range, point density, and distribution patterns which complicate information fusion. We propose a heterogeneous sensor information fusion method for human–robot collaborative environments. To solve the problem of substantial differences in point cloud range scales, a moving sphere space coarse localization algorithm is introduced, narrowing down the scale of interest based on similar features. Furthermore, to address the challenge of significant density differences and low overlap rates between point clouds, we present an improved FPFH coarse registration algorithm based on overlap ratio and an enhanced ICP fine registration algorithm based on the generation of corresponding points. The method proposed herein is applied to the fusion of information from a 64-line LiDAR and a depth camera within a human–robot collaboration scene. Experimental results demonstrate an absolute translational accuracy of 4.29 cm and an absolute rotational accuracy of 0.006 rad, meeting the requirements for heterogeneous sensor information fusion in the context of human–robot collaboration.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Information Fusion

工程技术-计算机:理论方法

CiteScore

33.20

自引率

4.30%

发文量

161

审稿时长

7.9 months

期刊介绍:

Information Fusion serves as a central platform for showcasing advancements in multi-sensor, multi-source, multi-process information fusion, fostering collaboration among diverse disciplines driving its progress. It is the leading outlet for sharing research and development in this field, focusing on architectures, algorithms, and applications. Papers dealing with fundamental theoretical analyses as well as those demonstrating their application to real-world problems will be welcome.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: