LightingFormer:用于弱光图像增强的变换器-CNN 混合网络

IF 2.5

4区 计算机科学

Q2 COMPUTER SCIENCE, SOFTWARE ENGINEERING

引用次数: 0

摘要

最近的深度学习方法在弱光图像增强方面取得了可喜的成果。然而,目前的方法往往受到噪声和伪影的影响,而且大多数方法都是基于卷积神经网络,在捕捉长程依赖性方面存在局限性,导致对低照度图像中极暗部分的恢复不足。为了解决这些问题,本文提出了一种基于 Transformer 的新型低照度图像增强网络,称为 LightingFormer。具体来说,我们提出了一种新颖的 Transformer-CNN 混合块,通过混合注意力捕捉全局和局部信息。它结合了 Transformer 在捕捉长距离依赖性方面的优势,以及 CNN 在提取低层次特征和增强局部性方面的优势,从而在弱光图像中恢复极暗部分并增强局部细节。此外,我们还采用 U-Net 鉴别器自适应增强弱光图像中的不同区域,避免曝光过度或曝光不足,抑制噪声和伪影。大量实验表明,我们的方法在数量和质量上都优于最先进的方法。此外,在物体检测中的应用也证明了我们的方法在高级视觉任务中的潜力。本文章由计算机程序翻译,如有差异,请以英文原文为准。

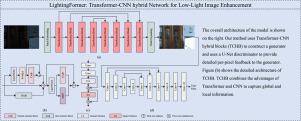

LightingFormer: Transformer-CNN hybrid network for low-light image enhancement

Recent deep-learning methods have shown promising results in low-light image enhancement. However, current methods often suffer from noise and artifacts, and most are based on convolutional neural networks, which have limitations in capturing long-range dependencies resulting in insufficient recovery of extremely dark parts in low-light images. To tackle these issues, this paper proposes a novel Transformer-based low-light image enhancement network called LightingFormer. Specifically, we propose a novel Transformer-CNN hybrid block that captures global and local information via mixed attention. It combines the advantages of the Transformer in capturing long-range dependencies and the advantages of CNNs in extracting low-level features and enhancing locality to recover extremely dark parts and enhance local details in low-light images. Moreover, we adopt the U-Net discriminator to enhance different regions in low-light images adaptively, avoiding overexposure or underexposure, and suppressing noise and artifacts. Extensive experiments show that our method outperforms the state-of-the-art methods quantitatively and qualitatively. Furthermore, the application to object detection demonstrates the potential of our method in high-level vision tasks.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Computers & Graphics-Uk

工程技术-计算机:软件工程

CiteScore

5.30

自引率

12.00%

发文量

173

审稿时长

38 days

期刊介绍:

Computers & Graphics is dedicated to disseminate information on research and applications of computer graphics (CG) techniques. The journal encourages articles on:

1. Research and applications of interactive computer graphics. We are particularly interested in novel interaction techniques and applications of CG to problem domains.

2. State-of-the-art papers on late-breaking, cutting-edge research on CG.

3. Information on innovative uses of graphics principles and technologies.

4. Tutorial papers on both teaching CG principles and innovative uses of CG in education.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: