生物成像深度学习模型的可视化可解释性。

IF 36.1

1区 生物学

Q1 BIOCHEMICAL RESEARCH METHODS

引用次数: 0

摘要

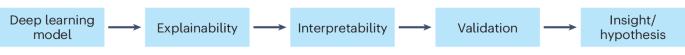

深度学习在分析生物图像方面的成功是以牺牲具有生物学意义的解释为代价的。我们回顾了可解释人工智能(XAI)在生物成像中的应用现状,并讨论了它在假设生成和数据驱动发现方面的潜力。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Visual interpretability of bioimaging deep learning models

The success of deep learning in analyzing bioimages comes at the expense of biologically meaningful interpretations. We review the state of the art of explainable artificial intelligence (XAI) in bioimaging and discuss its potential in hypothesis generation and data-driven discovery.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Nature Methods

生物-生化研究方法

CiteScore

58.70

自引率

1.70%

发文量

326

审稿时长

1 months

期刊介绍:

Nature Methods is a monthly journal that focuses on publishing innovative methods and substantial enhancements to fundamental life sciences research techniques. Geared towards a diverse, interdisciplinary readership of researchers in academia and industry engaged in laboratory work, the journal offers new tools for research and emphasizes the immediate practical significance of the featured work. It publishes primary research papers and reviews recent technical and methodological advancements, with a particular interest in primary methods papers relevant to the biological and biomedical sciences. This includes methods rooted in chemistry with practical applications for studying biological problems.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: