探索生成式人工智能辅助反馈写作,用于学生对物理概念问题的书面回答,并进行提示工程和少量学习

IF 2.6

2区 教育学

Q1 EDUCATION & EDUCATIONAL RESEARCH

Physical Review Physics Education Research

Pub Date : 2024-06-13

DOI:10.1103/physrevphyseducres.20.010152

引用次数: 0

摘要

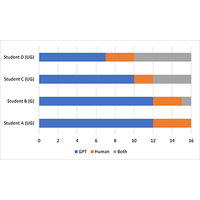

教师的反馈对学生概念理解和推理能力的发展起着至关重要的作用。然而,对学生的书面回答进行评分并提供个性化的反馈可能需要花费大量时间,尤其是在招生人数较多的课程中。在本研究中,我们探索使用 GPT-3.5 撰写学生对概念问题的书面回答的反馈,并采用了提示工程和少量学习技术。在第一阶段,我们使用了一小部分(n=20)学生对一个概念性问题的回答来反复训练 GPT 生成反馈。其中四个与人工撰写的反馈配对的回答被作为 GPT 的示例包含在提示中。我们让 GPT 为另外 16 个回答生成反馈,并通过多次迭代完善了提示。在第二阶段,我们向四名学生研究员(一名研究生和三名本科生研究员)提供了 16 个回复以及两个版本的反馈,其中一个由作者撰写,另一个由 GPT 撰写。我们要求学生对每个反馈的正确性和有用性进行评分,并指出哪个反馈是由 GPT 生成的。结果显示,学生对作者和 GPT 的反馈的正确性评价不相上下,但他们都认为 GPT 的反馈更有用。此外,识别 GPT 反馈的成功率很低,从 0.1 到 0.6 不等。在第三阶段,我们要求 GPT 为其余学生的回答(n=65)生成反馈信息。反馈信息由四位指导教师根据他们向学生提供反馈所需的修改程度进行评分。所有四位指导教师都对大约 70% 的反馈语句(从 68% 到 78% 不等)进行了评分,认为只需稍加修改或无需修改。这项研究证明了使用生成式人工智能(AI)作为助手,为学生的书面回答生成反馈的可行性,而提示中的例子数量相对较少。人工智能助手是大幅减少学生书面回答评分时间的解决方案之一。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Exploring generative AI assisted feedback writing for students’ written responses to a physics conceptual question with prompt engineering and few-shot learning

Instructor’s feedback plays a critical role in students’ development of conceptual understanding and reasoning skills. However, grading student written responses and providing personalized feedback can take a substantial amount of time, especially in large enrollment courses. In this study, we explore using GPT-3.5 to write feedback on students’ written responses to conceptual questions with prompt engineering and few-shot learning techniques. In stage I, we used a small portion () of the student responses on one conceptual question to iteratively train GPT to generate feedback. Four of the responses paired with human-written feedback were included in the prompt as examples for GPT. We tasked GPT to generate feedback for another 16 responses and refined the prompt through several iterations. In stage II, we gave four student researchers (one graduate and three undergraduate researchers) the 16 responses as well as two versions of feedback, one written by the authors and the other by GPT. Students were asked to rate the correctness and usefulness of each feedback and to indicate which one was generated by GPT. The results showed that students tended to rate the feedback by human and GPT equally on correctness, but they all rated the feedback by GPT as more useful. Additionally, the success rates of identifying GPT’s feedback were low, ranging from 0.1 to 0.6. In stage III, we tasked GPT to generate feedback for the rest of the students’ responses (). The feedback messages were rated by four instructors based on the extent of modification needed if they were to give the feedback to students. All four instructors rated approximately 70% (ranging from 68% to 78%) of the feedback statements needing only minor or no modification. This study demonstrated the feasibility of using generative artificial intelligence (AI) as an assistant to generate feedback for student written responses with only a relatively small number of examples in the prompt. An AI assistant can be one of the solutions to substantially reduce time spent on grading student written responses.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Physical Review Physics Education Research

Social Sciences-Education

CiteScore

5.70

自引率

41.90%

发文量

84

审稿时长

32 weeks

期刊介绍:

PRPER covers all educational levels, from elementary through graduate education. All topics in experimental and theoretical physics education research are accepted, including, but not limited to:

Educational policy

Instructional strategies, and materials development

Research methodology

Epistemology, attitudes, and beliefs

Learning environment

Scientific reasoning and problem solving

Diversity and inclusion

Learning theory

Student participation

Faculty and teacher professional development

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: