基于统一规范损失函数的人工神经网络

IF 1.7

3区 数学

Q2 MATHEMATICS, APPLIED

引用次数: 0

摘要

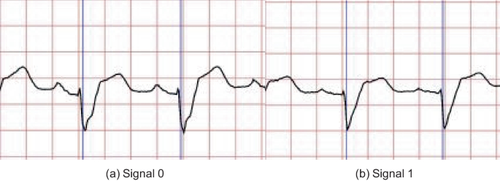

我们探讨了在无隐藏层的人工神经网络训练中使用基于最大值正态的非平滑损失函数的可能性。我们假设,在某些特殊情况下,即训练数据非常小或类的大小不成比例时,这可能会带来更优越的分类结果。我们在一个没有隐藏层的简单人工神经网络上进行的数值实验似乎证实了我们的假设。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Artificial neural networks with uniform norm-based loss functions

We explore the potential for using a nonsmooth loss function based on the max-norm in the training of an artificial neural network without hidden layers. We hypothesise that this may lead to superior classification results in some special cases where the training data are either very small or the class size is disproportional. Our numerical experiments performed on a simple artificial neural network with no hidden layer appear to confirm our hypothesis.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

CiteScore

3.00

自引率

5.90%

发文量

68

审稿时长

3 months

期刊介绍:

Advances in Computational Mathematics publishes high quality, accessible and original articles at the forefront of computational and applied mathematics, with a clear potential for impact across the sciences. The journal emphasizes three core areas: approximation theory and computational geometry; numerical analysis, modelling and simulation; imaging, signal processing and data analysis.

This journal welcomes papers that are accessible to a broad audience in the mathematical sciences and that show either an advance in computational methodology or a novel scientific application area, or both. Methods papers should rely on rigorous analysis and/or convincing numerical studies.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: