调整集群选择的强化学习参数以改进进化算法

IF 4.3

Q2 ENGINEERING, CHEMICAL

引用次数: 0

摘要

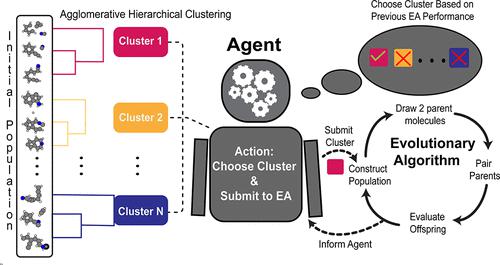

寻找具有所需特性的最佳分子结构是一项热门挑战,可应用于药物发现等领域。遗传算法是全局最小分子搜索的常用方法,因为它能够搜索能量景观的大区域,并通过并行化减少计算时间。为了减少不稳定中间结构的产生量,提高进化算法的整体效率,在多个实例中引入了聚类。然而,很少有文献详细说明区分聚类之间选择频率的效果。为了在我们的遗传算法中找到探索和利用之间的平衡,我们提出了一种对起始种群进行聚类的系统,并通过动态概率为进化算法运行选择聚类,该概率取决于每个聚类产生的分子的适合度。我们定义了四个参数:MFavOvrAll-A、MFavClus-B、NoNewFavClus-C 和 Select-D,它们分别对应于产生整体最佳结构的奖励、产生本集群最佳结构的奖励、未产生最佳结构的惩罚以及基于集群选择率的惩罚。奖励会增加群组未来被选中的概率,而惩罚则会降低这种概率。为了优化这四个参数,我们使用高斯分布来近似计算每个簇的进化算法性能,并对不同的参数组合进行网格搜索。结果显示,参数 MFavOvrAll-A(奖励产生最佳整体结构的集群)和参数 Select-D(外观惩罚)的效果明显大于参数 MFavClus-B 和 NoNewFavClus-C。为了生成最成功的模型,必须在 MFavOvrAll-A 和 Select-D 之间取得平衡,以反映强化学习算法中常见的开发与探索之间的权衡。结果表明,在喹啉类结构搜索中,我们基于强化学习的簇选择方法优于非簇进化算法。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Tuning Reinforcement Learning Parameters for Cluster Selection to Enhance Evolutionary Algorithms

The ability to find optimal molecular structures with desired properties is a popular challenge, with applications in areas such as drug discovery. Genetic algorithms are a common approach to global minima molecular searches due to their ability to search large regions of the energy landscape and decrease computational time via parallelization. In order to decrease the amount of unstable intermediate structures being produced and increase the overall efficiency of an evolutionary algorithm, clustering was introduced in multiple instances. However, there is little literature detailing the effects of differentiating the selection frequencies between clusters. In order to find a balance between exploration and exploitation in our genetic algorithm, we propose a system of clustering the starting population and choosing clusters for an evolutionary algorithm run via a dynamic probability that is dependent on the fitness of molecules generated by each cluster. We define four parameters, MFavOvrAll-A, MFavClus-B, NoNewFavClus-C, and Select-D, that correspond to a reward for producing the best structure overall, a reward for producing the best structure in its own cluster, a penalty for not producing the best structure, and a penalty based on the selection ratio of the cluster, respectively. A reward increases the probability of a cluster’s future selection, while a penalty decreases it. In order to optimize these four parameters, we used a Gaussian distribution to approximate the evolutionary algorithm performance of each cluster and performed a grid search for different parameter combinations. Results show parameter MFavOvrAll-A (rewarding clusters for producing the best structure overall) and parameter Select-D (appearance penalty) have a significantly larger effect than parameters MFavClus-B and NoNewFavClus-C. In order to produce the most successful models, a balance between MFavOvrAll-A and Select-D must be made that reflects the exploitation vs exploration trade-off often seen in reinforcement learning algorithms. Results show that our reinforcement-learning-based method for selecting clusters outperforms an unclustered evolutionary algorithm for quinoline-like structure searches.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

ACS Engineering Au

化学工程技术-

自引率

0.00%

发文量

0

期刊介绍:

)ACS Engineering Au is an open access journal that reports significant advances in chemical engineering applied chemistry and energy covering fundamentals processes and products. The journal's broad scope includes experimental theoretical mathematical computational chemical and physical research from academic and industrial settings. Short letters comprehensive articles reviews and perspectives are welcome on topics that include:Fundamental research in such areas as thermodynamics transport phenomena (flow mixing mass & heat transfer) chemical reaction kinetics and engineering catalysis separations interfacial phenomena and materialsProcess design development and intensification (e.g. process technologies for chemicals and materials synthesis and design methods process intensification multiphase reactors scale-up systems analysis process control data correlation schemes modeling machine learning Artificial Intelligence)Product research and development involving chemical and engineering aspects (e.g. catalysts plastics elastomers fibers adhesives coatings paper membranes lubricants ceramics aerosols fluidic devices intensified process equipment)Energy and fuels (e.g. pre-treatment processing and utilization of renewable energy resources; processing and utilization of fuels; properties and structure or molecular composition of both raw fuels and refined products; fuel cells hydrogen batteries; photochemical fuel and energy production; decarbonization; electrification; microwave; cavitation)Measurement techniques computational models and data on thermo-physical thermodynamic and transport properties of materials and phase equilibrium behaviorNew methods models and tools (e.g. real-time data analytics multi-scale models physics informed machine learning models machine learning enhanced physics-based models soft sensors high-performance computing)

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: