DTC:深度跟踪控制

IF 26.1

1区 计算机科学

Q1 ROBOTICS

引用次数: 0

摘要

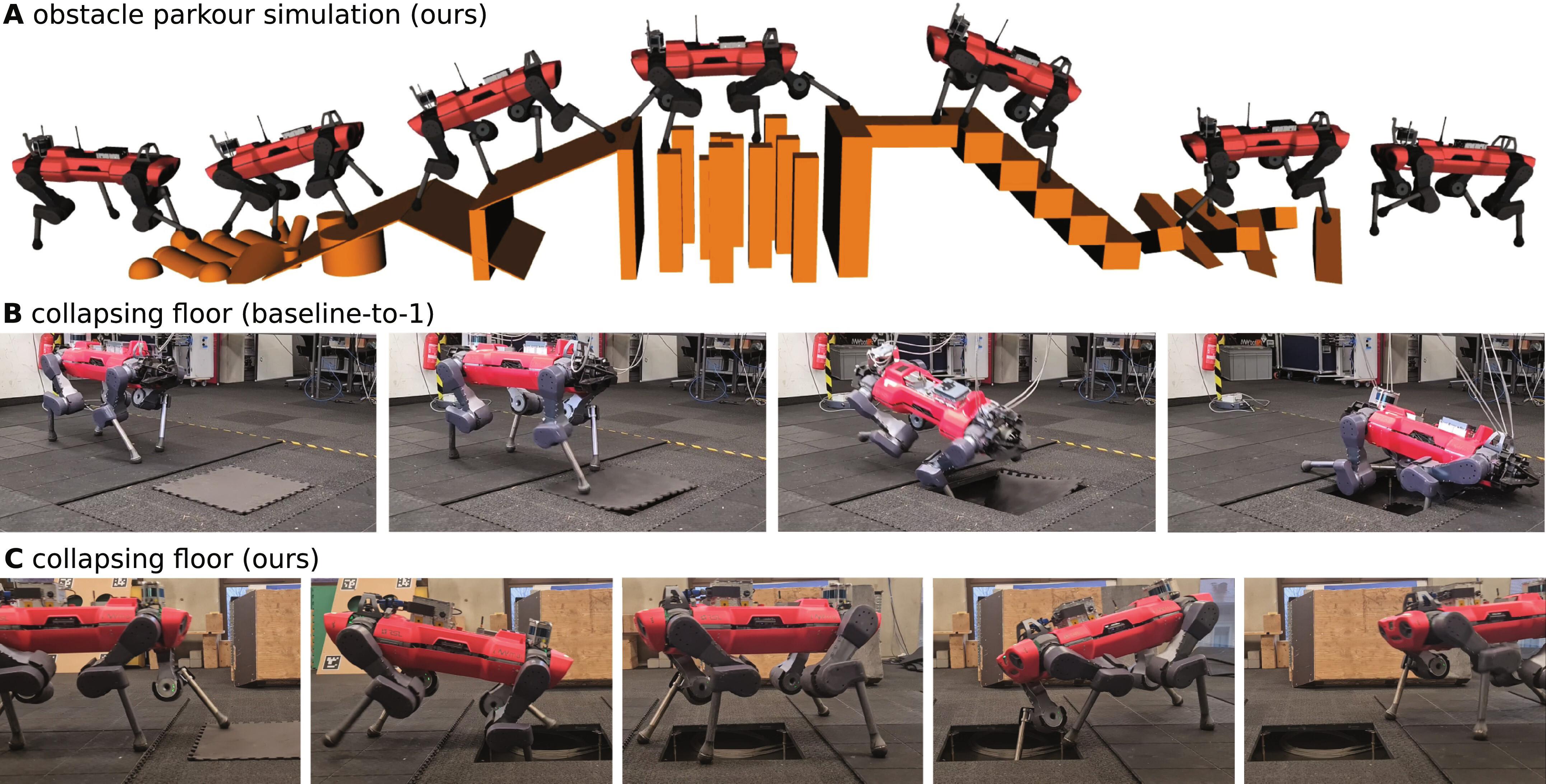

支腿运动是一个复杂的控制问题,需要同时具备准确性和鲁棒性才能应对现实世界的挑战。传统上,有脚系统是通过轨迹优化和反动力学来控制的。这种基于分层模型的方法很有吸引力,因为它具有直观的成本函数调整、精确的规划、通用性,最重要的是,它是通过十多年的广泛研究获得的深刻理解。然而,模型不匹配和违反假设是导致错误操作的常见原因。另一方面,基于仿真的强化学习可以产生具有前所未有的鲁棒性和恢复技能的运动策略。然而,所有的学习算法都很难应对有效立足点稀少的环境(如间隙或阶石)中出现的稀疏奖励。在这项工作中,我们提出了一种混合控制架构,该架构结合了两个世界的优势,可同时实现更高的鲁棒性、足部定位精度和地形泛化。我们的方法使用基于模型的规划器,在训练过程中推出参考运动。深度神经网络策略在模拟中进行训练,旨在跟踪优化后的立足点。我们评估了我们的运动管道在稀疏地形上的准确性,在这种地形上,纯数据驱动的方法很容易失败。此外,与基于模型的方法相比,我们证明了在地面湿滑或变形的情况下,我们的方法具有更强的鲁棒性。最后,我们展示了我们提出的跟踪控制器在不同轨迹优化方法中的通用性,这在训练过程中是看不到的。总之,我们的工作将在线规划的预测能力和优化保证与离线学习固有的鲁棒性结合在一起。本文章由计算机程序翻译,如有差异,请以英文原文为准。

DTC: Deep Tracking Control

Legged locomotion is a complex control problem that requires both accuracy and robustness to cope with real-world challenges. Legged systems have traditionally been controlled using trajectory optimization with inverse dynamics. Such hierarchical model-based methods are appealing because of intuitive cost function tuning, accurate planning, generalization, and, most importantly, the insightful understanding gained from more than one decade of extensive research. However, model mismatch and violation of assumptions are common sources of faulty operation. Simulation-based reinforcement learning, on the other hand, results in locomotion policies with unprecedented robustness and recovery skills. Yet, all learning algorithms struggle with sparse rewards emerging from environments where valid footholds are rare, such as gaps or stepping stones. In this work, we propose a hybrid control architecture that combines the advantages of both worlds to simultaneously achieve greater robustness, foot-placement accuracy, and terrain generalization. Our approach uses a model-based planner to roll out a reference motion during training. A deep neural network policy is trained in simulation, aiming to track the optimized footholds. We evaluated the accuracy of our locomotion pipeline on sparse terrains, where pure data-driven methods are prone to fail. Furthermore, we demonstrate superior robustness in the presence of slippery or deformable ground when compared with model-based counterparts. Last, we show that our proposed tracking controller generalizes across different trajectory optimization methods not seen during training. In conclusion, our work unites the predictive capabilities and optimality guarantees of online planning with the inherent robustness attributed to offline learning.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Science Robotics

Mathematics-Control and Optimization

CiteScore

30.60

自引率

2.80%

发文量

83

期刊介绍:

Science Robotics publishes original, peer-reviewed, science- or engineering-based research articles that advance the field of robotics. The journal also features editor-commissioned Reviews. An international team of academic editors holds Science Robotics articles to the same high-quality standard that is the hallmark of the Science family of journals.

Sub-topics include: actuators, advanced materials, artificial Intelligence, autonomous vehicles, bio-inspired design, exoskeletons, fabrication, field robotics, human-robot interaction, humanoids, industrial robotics, kinematics, machine learning, material science, medical technology, motion planning and control, micro- and nano-robotics, multi-robot control, sensors, service robotics, social and ethical issues, soft robotics, and space, planetary and undersea exploration.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: