什么时候可以给机器人更少:儿童对机器人的公平直觉

IF 3.8

2区 计算机科学

Q2 ROBOTICS

引用次数: 0

摘要

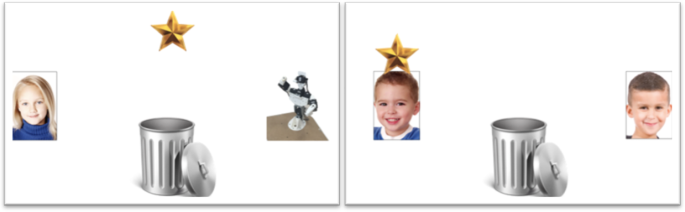

儿童在发育过程中较早形成公平的直觉。虽然我们知道孩子们相信其他人关心分配的公平,但他们是否相信其他代理人(如机器人)也会这样做,我们知之甚少。在两个实验中(N = 273),我们调查了4至9岁儿童的直觉,即机器人是否会对人类儿童的不公平待遇感到不安。孩子们被告知一个场景,在两种情况下,资源在一个人类孩子和一个目标接受者之间分配:另一个孩子或一个机器人。目标接受者(孩子或机器人)收到的比另一个孩子少。然后,他们被要求评估分配的公平程度,以及目标接收者是否会感到不安。实验1和实验2都使用了相同的设计,但实验2还包括一个视频,展示了机器人的机械“机器人”动作。我们的研究结果表明,当弱势接受者是机器人而不是儿童时,儿童认为不平等分享更公平(实验1和2)。此外,儿童认为儿童会比机器人更难过(实验2)。最后,我们发现,这种区别对待这两种情况的倾向随着年龄的增长而增强(实验2)。这些结果表明,幼儿在资源分配任务中对待机器人和儿童是相似的。但随着年龄的增长,它们之间的差别越来越大。具体来说,当目标接受者是机器人时,孩子们认为不平等的不公平程度会降低,并且认为机器人对不平等的愤怒会减少。本文章由计算机程序翻译,如有差异,请以英文原文为准。

When it is ok to give the Robot Less: Children’s Fairness Intuitions Towards Robots

Abstract Children develop intuitions about fairness relatively early in development. While we know that children believe other humans care about distributional fairness, considerably less is known about whether they believe other agents, such as robots, do as well. In two experiments (N = 273) we investigated 4- to 9-year-old children’s intuitions about whether robots would be upset about unfair treatment as human children. Children were told about a scenario in which resources were being split between a human child and a target recipient: either another child or a robot across two conditions. The target recipient (either child or robot) received less than another child. They were then asked to evaluate how fair the distribution was, and whether the target recipient would be upset. Both Experiment 1 and 2 used the same design, but Experiment 2 also included a video demonstrating the robot’s mechanistic “robotic” movements. Our results show that children thought it was more fair to share unequally when the disadvantaged recipient was a robot rather than a child (Experiment 1 and 2). Furthermore, children thought that the child would be more upset than the robot (Experiment 2). Finally, we found that this tendency to treat these two conditions differently became stronger with age (Experiment 2). These results suggest that young children treat robots and children similarly in resource allocation tasks, but increasingly differentiate them with age. Specifically, children evaluate inequality as less unfair when the target recipient is a robot, and think that robots will be less angry about inequality.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

International Journal of Social Robotics

ROBOTICS-

CiteScore

9.80

自引率

8.50%

发文量

95

期刊介绍:

Social Robotics is the study of robots that are able to interact and communicate among themselves, with humans, and with the environment, within the social and cultural structure attached to its role. The journal covers a broad spectrum of topics related to the latest technologies, new research results and developments in the area of social robotics on all levels, from developments in core enabling technologies to system integration, aesthetic design, applications and social implications. It provides a platform for like-minded researchers to present their findings and latest developments in social robotics, covering relevant advances in engineering, computing, arts and social sciences.

The journal publishes original, peer reviewed articles and contributions on innovative ideas and concepts, new discoveries and improvements, as well as novel applications, by leading researchers and developers regarding the latest fundamental advances in the core technologies that form the backbone of social robotics, distinguished developmental projects in the area, as well as seminal works in aesthetic design, ethics and philosophy, studies on social impact and influence, pertaining to social robotics.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: