多模式视频检索与剪辑:一个用户研究

IF 1.9

3区 计算机科学

Q3 COMPUTER SCIENCE, INFORMATION SYSTEMS

引用次数: 0

摘要

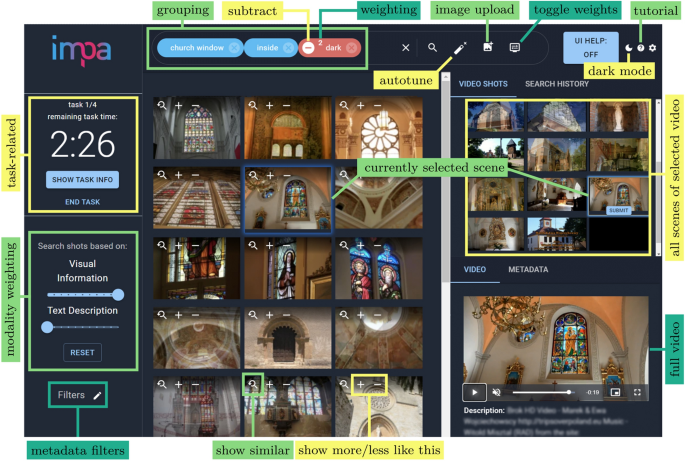

最近的机器学习进展证明了零射击模型在从互联网收集的大量数据上训练的有效性。其中,CLIP(对比语言-图像预训练)作为一种多模态模型被引入,在许多不同的任务和领域上具有很高的准确性。然而,该模型不受约束的特性引出了一个问题,即它是否能够有效地部署在面向非技术用户的开放域实际应用程序中。在本文中,我们评估CLIP是否可以用于现实世界环境中的多模态视频检索。为此,我们实现了impa,这是一个由CLIP驱动的高效的基于镜头的检索系统。我们还在统一的图形用户界面中实现了高级查询功能,以方便直观和有效地使用CLIP进行视频检索任务。最后,我们通过对电视新闻媒体行业的视频编辑专业人员和记者进行用户研究,对我们的检索系统进行了实证评估。在参与者完成开放域视频检索任务后,我们通过问卷调查、访谈和UI交互日志收集数据。我们的评估集中在使用自然语言检索的感知直观性、检索准确性以及用户如何与系统UI交互。我们发现我们的高级特性产生了更高的任务准确性、用户评分和更高效的查询。总的来说,我们的结果显示了设计直观和高效的用户界面的重要性,以便能够在现实场景中有效地部署大型模型,如CLIP。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Multimodal video retrieval with CLIP: a user study

Abstract Recent machine learning advances demonstrate the effectiveness of zero-shot models trained on large amounts of data collected from the internet. Among these, CLIP (Contrastive Language-Image Pre-training) has been introduced as a multimodal model with high accuracy on a number of different tasks and domains. However, the unconstrained nature of the model begs the question whether it can be deployed in open-domain real-word applications effectively in front of non-technical users. In this paper, we evaluate whether CLIP can be used for multimodal video retrieval in a real-world environment. For this purpose, we implemented impa , an efficient shot-based retrieval system powered by CLIP. We additionally implemented advanced query functionality in a unified graphical user interface to facilitate an intuitive and efficient usage of CLIP for video retrieval tasks. Finally, we empirically evaluated our retrieval system by performing a user study with video editing professionals and journalists working in the TV news media industry. After having the participants solve open-domain video retrieval tasks, we collected data via questionnaires, interviews, and UI interaction logs. Our evaluation focused on the perceived intuitiveness of retrieval using natural language, retrieval accuracy, and how users interacted with the system’s UI. We found that our advanced features yield higher task accuracy, user ratings, and more efficient queries. Overall, our results show the importance of designing intuitive and efficient user interfaces to be able to deploy large models such as CLIP effectively in real-world scenarios.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Information Retrieval Journal

工程技术-计算机:信息系统

CiteScore

6.20

自引率

0.00%

发文量

17

审稿时长

13.5 months

期刊介绍:

The journal provides an international forum for the publication of theory, algorithms, analysis and experiments across the broad area of information retrieval. Topics of interest include search, indexing, analysis, and evaluation for applications such as the web, social and streaming media, recommender systems, and text archives. This includes research on human factors in search, bridging artificial intelligence and information retrieval, and domain-specific search applications.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: