用于识别在传统汉语练习中执行的视频录制动作的CNN-LSTM模型。

IF 3.7

3区 医学

Q2 ENGINEERING, BIOMEDICAL

IEEE Journal of Translational Engineering in Health and Medicine-Jtehm

Pub Date : 2023-06-02

DOI:10.1109/JTEHM.2023.3282245

引用次数: 1

摘要

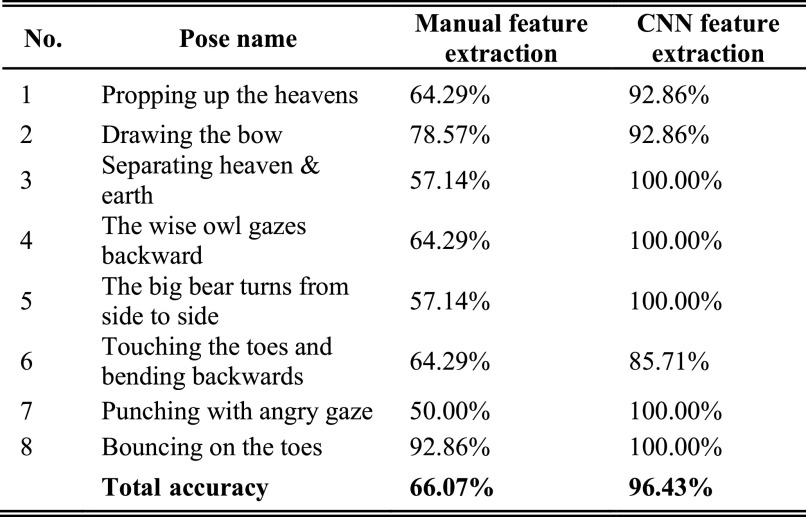

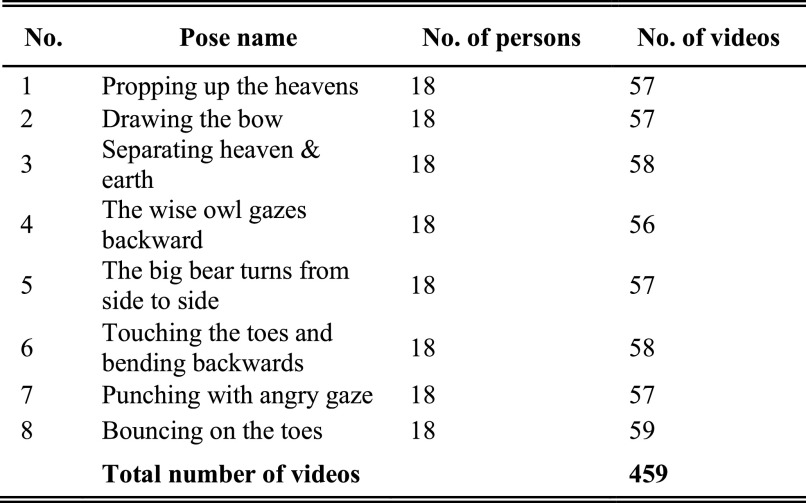

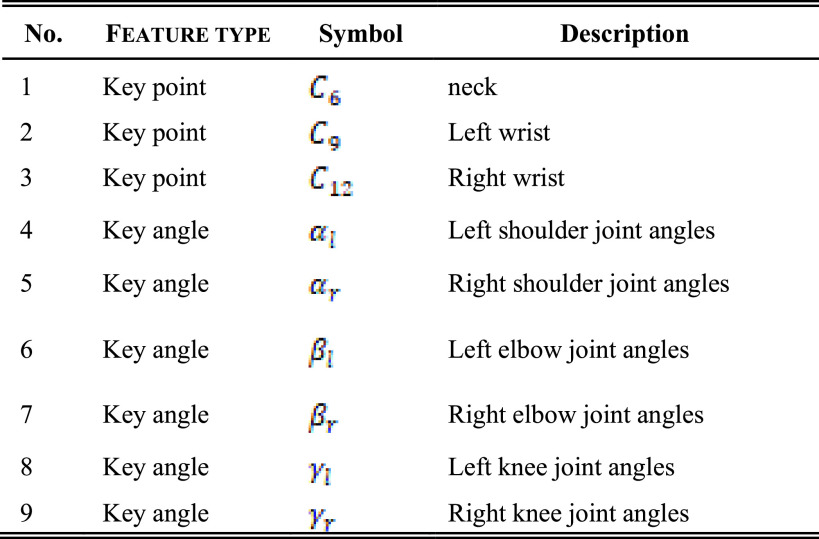

从视频数据中识别人类行为是智能康复评估领域的一个重要问题。运动特征提取和模式识别是实现这一目标的两个关键步骤。传统的动作识别模型通常基于从视频帧中手动提取的几何特征,但难以适应复杂的场景,无法实现高精度的识别和鲁棒性。我们研究了一个运动识别模型,并将其应用于中国传统体操(即八段锦)的复杂动作序列识别。我们首先开发了一种用于识别视频帧中捕捉的动作序列的卷积神经网络(CNN)和长短期记忆(LSTM)组合模型,并将其应用于识别八段锦的动作。此外,该方法还与传统的基于几何运动特征的动作识别模型进行了比较,在该模型中,Openpose用于识别骨骼中的关节位置。它的高识别精度性能已经在测试视频数据集上得到了验证,该数据集包含来自18个不同练习者的视频片段。CNN-LSTM识别模型在测试集上的准确率达到96.43%;而传统动作识别模型中手动提取的特征在测试视频数据集上只能达到66.07%的分类准确率。CNN模块提取的抽象图像特征在提高LSTM模型的分类精度方面更为有效。所提出的基于CNN-LSTM的方法可以成为识别复杂动作的有用工具。本文章由计算机程序翻译,如有差异,请以英文原文为准。

CNN-LSTM Model for Recognizing Video-Recorded Actions Performed in a Traditional Chinese Exercise

Identifying human actions from video data is an important problem in the fields of intelligent rehabilitation assessment. Motion feature extraction and pattern recognition are the two key procedures to achieve such goals. Traditional action recognition models are usually based on the geometric features manually extracted from video frames, which are however difficult to adapt to complex scenarios and cannot achieve high-precision recognition and robustness. We investigate a motion recognition model and apply it to recognize the sequence of complicated actions of a traditional Chinese exercise (ie, Baduanjin). We first developed a combined convolutional neural network (CNN) and long short-term memory (LSTM) model for recognizing the sequence of actions captured in video frames, and applied it to recognize the actions of Baduanjin. Moreover, this method has been compared with the traditional action recognition model based on geometric motion features in which Openpose is used to identify the joint positions in the skeletons. Its performance of high recognition accuracy has been verified on the testing video dataset, containing the video clips from 18 different practicers. The CNN-LSTM recognition model achieved 96.43% accuracy on the testing set; while those manually extracted features in the traditional action recognition model were only able to achieve 66.07% classification accuracy on the testing video dataset. The abstract image features extracted by the CNN module are more effective on improving the classification accuracy of the LSTM model. The proposed CNN-LSTM based method can be a useful tool in recognizing the complicated actions.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

IEEE Journal of Translational Engineering in Health and Medicine-Jtehm

Engineering-Biomedical Engineering

CiteScore

7.40

自引率

2.90%

发文量

65

审稿时长

27 weeks

期刊介绍:

The IEEE Journal of Translational Engineering in Health and Medicine is an open access product that bridges the engineering and clinical worlds, focusing on detailed descriptions of advanced technical solutions to a clinical need along with clinical results and healthcare relevance. The journal provides a platform for state-of-the-art technology directions in the interdisciplinary field of biomedical engineering, embracing engineering, life sciences and medicine. A unique aspect of the journal is its ability to foster a collaboration between physicians and engineers for presenting broad and compelling real world technological and engineering solutions that can be implemented in the interest of improving quality of patient care and treatment outcomes, thereby reducing costs and improving efficiency. The journal provides an active forum for clinical research and relevant state-of the-art technology for members of all the IEEE societies that have an interest in biomedical engineering as well as reaching out directly to physicians and the medical community through the American Medical Association (AMA) and other clinical societies. The scope of the journal includes, but is not limited, to topics on: Medical devices, healthcare delivery systems, global healthcare initiatives, and ICT based services; Technological relevance to healthcare cost reduction; Technology affecting healthcare management, decision-making, and policy; Advanced technical work that is applied to solving specific clinical needs.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: