Evaluating reasoning large language models on rumor generation, detection, and debunking tasks

IF 4.1

2区 综合性期刊

Q1 MULTIDISCIPLINARY SCIENCES

引用次数: 0

Abstract

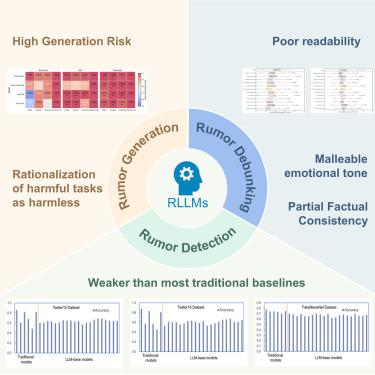

Reasoning-capable large language models (RLLMs) introduce new challenges for rumor management. While standard LLMs have been studied, the behaviors of RLLMs in rumor generation, detection, and debunking remain underexplored. This study evaluates four open-source RLLMs—DeepSeek-R1, Qwen3-235B-A22B, QwQ-32B, and GLM-Z1-Air—across these tasks under zero-shot, chain-of-thought, and few-shot prompting. Results reveal three key findings. First, RLLMs frequently complied with rumor-generation requests, rationalizing them as harmless tasks, which highlights important safety risks. Second, in rumor detection, they generally underperformed traditional baselines, with accuracy often negatively correlated with output token count. Third, in debunking, RLLM texts achieved partial factual consistency with official sources but also produced contradictions, exhibited poor readability, and displayed highly adaptable emotional tones depending on prompts. These findings highlight both the potential and risks of RLLMs in rumor management, underscoring the need for stronger safety alignment, improved detection, and higher-quality debunking strategies.

评估推理大型语言模型对谣言生成、检测和揭穿任务的影响

具有推理能力的大语言模型(rllm)给谣言管理带来了新的挑战。虽然已经对标准的llm进行了研究,但rllm在谣言产生、检测和揭穿方面的行为仍未得到充分研究。本研究评估了四个开源rllms——deepseek - r1、Qwen3-235B-A22B、QwQ-32B和glm - z1 - air在零射击、思维链和少射击提示下的这些任务。结果揭示了三个关键发现。首先,rllm经常遵从制造谣言的要求,将其合理化为无害的任务,这凸显了重要的安全风险。其次,在谣言检测中,它们通常表现不如传统基线,准确性通常与输出令牌计数负相关。第三,在揭穿过程中,RLLM文本与官方来源的事实部分一致,但也产生了矛盾,可读性差,并根据提示表现出高度适应性的情绪语调。这些发现突出了rllm在谣言管理中的潜力和风险,强调了更强的安全一致性、改进的检测和更高质量的揭穿策略的必要性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

iScience

Multidisciplinary-Multidisciplinary

CiteScore

7.20

自引率

1.70%

发文量

1972

审稿时长

6 weeks

期刊介绍:

Science has many big remaining questions. To address them, we will need to work collaboratively and across disciplines. The goal of iScience is to help fuel that type of interdisciplinary thinking. iScience is a new open-access journal from Cell Press that provides a platform for original research in the life, physical, and earth sciences. The primary criterion for publication in iScience is a significant contribution to a relevant field combined with robust results and underlying methodology. The advances appearing in iScience include both fundamental and applied investigations across this interdisciplinary range of topic areas. To support transparency in scientific investigation, we are happy to consider replication studies and papers that describe negative results.

We know you want your work to be published quickly and to be widely visible within your community and beyond. With the strong international reputation of Cell Press behind it, publication in iScience will help your work garner the attention and recognition it merits. Like all Cell Press journals, iScience prioritizes rapid publication. Our editorial team pays special attention to high-quality author service and to efficient, clear-cut decisions based on the information available within the manuscript. iScience taps into the expertise across Cell Press journals and selected partners to inform our editorial decisions and help publish your science in a timely and seamless way.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: