User-centric eXplainable AI criteria for implementing AI-based denoising in PET/CT

IF 2.8

Q2 RADIOLOGY, NUCLEAR MEDICINE & MEDICAL IMAGING

引用次数: 0

Abstract

Introduction

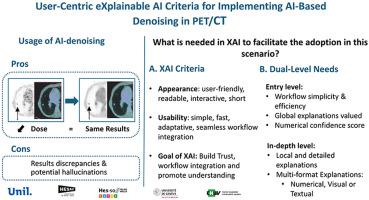

The clinical adoption of AI-based denoising in PET/CT relies on the development of transparent and trustworthy tools that align with the radiographers' needs and support integration into routine practice. This study aims to determine the key characteristics of an eXplainable Artificial Intelligence (XAI)/tool aligning the radiographers' needs to facilitate the clinical adoption of AI-based denoising algorithm in PET/CT.

Methods

Two focus groups were organised, involving ten voluntary participants recruited from nuclear medicine departments from Western-Switzerland, forming a convenience sample of radiographers. Two different scenarios, matching or mismatching the ground truth were used to identify their needs and the questions they would like to ask to understand the AI-denoising algorithm. Additionally, the characteristics that an XAI tool should possess to best meet their needs were investigated. Content analysis was performed following the three steps outlined by Wanlin. Ethics cleared the study.

Results

Ten radiographers (aged 31-60y) identified two levels of explanation: (1) simple, global explanations with numerical confidence levels for rapid understanding in routine settings; (2) detailed, case-specific explanations using mixed formats where necessary, depending on the clinical situation and users to build confidence and support decision-making. Key questions include the functions of the algorithm (‘what’), the clinical context (‘when’) and the dependency of the results (‘how’). An effective XAI tool should be easy, adaptable, user-friendly and not disruptive to workflows.

Conclusion

Radiographers need two levels of explanation from XAI tools: global summaries that preserve workflow efficiency and detailed, case-specific insights when needed. Meeting these needs is key to fostering trust, understanding, and integration of AI-based denoising in PET/CT.

Implications for practice

Implementing adaptive XAI tools tailored to radiographers’ needs can support clinical workflows and accelerate the adoption of AI in PET/CT imaging.

在PET/CT中实现基于人工智能去噪的以用户为中心的可解释的人工智能标准

临床采用基于人工智能的PET/CT去噪依赖于透明和值得信赖的工具的发展,这些工具与放射技师的需求保持一致,并支持整合到日常实践中。本研究旨在确定符合放射技师需求的可解释人工智能(XAI)/工具的关键特征,以促进临床采用基于人工智能的PET/CT去噪算法。方法从瑞士西部核医学部门招募10名志愿者,组织2个焦点小组,形成方便样本。使用两种不同的场景,匹配或不匹配的基本事实来确定他们的需求和他们想要问的问题,以理解人工智能去噪算法。此外,还研究了XAI工具应该具备哪些特征才能最好地满足他们的需求。按照万林概述的三个步骤进行内容分析。伦理道德为这项研究扫清了障碍。结果10名31-60岁的放射技师确定了两种解释水平:(1)简单、全局的解释,具有数值置信水平,以便在常规设置中快速理解;(2)根据临床情况和用户,在必要时采用混合格式进行详细的个案解释,以建立信心和支持决策。关键问题包括算法的功能(“什么”)、临床背景(“何时”)和结果的依赖性(“如何”)。一个有效的XAI工具应该是简单的、适应性强的、用户友好的,并且不会破坏工作流程。结论:放射科医师需要从XAI工具中获得两个层次的解释:保持工作流程效率的全局总结,以及必要时详细的、针对特定案例的见解。满足这些需求是促进信任、理解和整合基于人工智能的PET/CT去噪的关键。实施适应放射技师需求的自适应XAI工具可以支持临床工作流程,并加速人工智能在PET/CT成像中的应用。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Radiography

RADIOLOGY, NUCLEAR MEDICINE & MEDICAL IMAGING-

CiteScore

4.70

自引率

34.60%

发文量

169

审稿时长

63 days

期刊介绍:

Radiography is an International, English language, peer-reviewed journal of diagnostic imaging and radiation therapy. Radiography is the official professional journal of the College of Radiographers and is published quarterly. Radiography aims to publish the highest quality material, both clinical and scientific, on all aspects of diagnostic imaging and radiation therapy and oncology.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: