High-resolution time-lapse imaging of droplet-cell dynamics via optimal transport and contrastive learning

IF 5.4

2区 工程技术

Q1 BIOCHEMICAL RESEARCH METHODS

引用次数: 0

Abstract

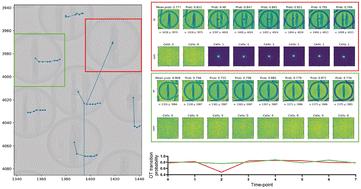

Single-cell analysis is essential for uncovering heterogeneous biological functions that arise from intricate cellular responses. Here, microfluidic droplet arrays enable high-throughput data collection through cell encapsulation in picoliter volumes, and the time-lapse imaging of these arrays further reveal functional dynamics and changes. However, accurate tracking of cell identities across time frames with large intervals in between remains challenging when droplets move significantly. Specifically, existing machine learning methods often depend on labeled data or require neighboring cells as reference; without them, these methods struggle to track droplets and cells across long distances within images with complex movement patterns. To address these limitations, we developed a pipeline that combines visual object detection, feature extraction via contrastive learning, and optimal transport-based object matching, minimizing the reliance on labeled training data. We validated our approach across various experimental and simulated conditions and were able to track thousands of water-in-oil microfluidic droplets over large distances and long intervals between frames (>30 min). We achieved high precision in previously untraceable scenarios, tracking 50 pl droplets in images with small, medium and large movements (corresponding to ∼126, ∼800 and ∼10 000 μm, respectively) with a success rate of correctly tracked droplets of >90% for average movements within 2–12 droplet diameters, and >60% for average movements of >100 droplet diameters. This workflow lays the foundation for the tracking of droplets over time in these arrays when large and complex movement patterns are present and where the uniqueness of the sample makes repeated experiments infeasible.

通过最佳传输和对比学习的液滴-细胞动力学的高分辨率延时成像

单细胞分析对于揭示复杂细胞反应产生的异质生物学功能至关重要。在这里,微流控液滴阵列通过皮升体积的细胞封装实现了高通量数据收集,这些阵列的延时成像进一步揭示了功能动态和变化。然而,当液滴显著移动时,跨越时间范围、间隔时间较长的细胞身份追踪仍然具有挑战性。具体来说,现有的机器学习方法通常依赖于标记数据或需要相邻单元作为参考;没有它们,这些方法很难在具有复杂运动模式的图像中长距离跟踪液滴和细胞。为了解决这些限制,我们开发了一个管道,它结合了视觉对象检测、通过对比学习提取特征和基于传输的最佳对象匹配,最大限度地减少了对标记训练数据的依赖。我们在各种实验和模拟条件下验证了我们的方法,并能够在长距离和长帧间隔(30分钟)内跟踪数千个油中水微流体液滴。我们在以前无法追踪的场景中实现了高精度,在小、中、大运动(分别对应于~ 126、~ 800和~ 10000 μm)的图像中跟踪50 pl液滴,在2-12液滴直径的平均运动中,正确跟踪液滴的成功率为>;90%,在>;100液滴直径的平均运动中,成功率为>;60%。当存在大而复杂的运动模式以及样品的独特性使得重复实验不可行的情况下,该工作流程为跟踪这些阵列中的液滴随时间的变化奠定了基础。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Lab on a Chip

工程技术-化学综合

CiteScore

11.10

自引率

8.20%

发文量

434

审稿时长

2.6 months

期刊介绍:

Lab on a Chip is the premiere journal that publishes cutting-edge research in the field of miniaturization. By their very nature, microfluidic/nanofluidic/miniaturized systems are at the intersection of disciplines, spanning fundamental research to high-end application, which is reflected by the broad readership of the journal. Lab on a Chip publishes two types of papers on original research: full-length research papers and communications. Papers should demonstrate innovations, which can come from technical advancements or applications addressing pressing needs in globally important areas. The journal also publishes Comments, Reviews, and Perspectives.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: