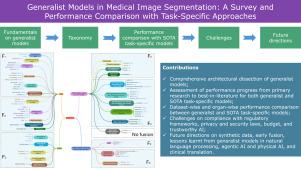

Generalist models in medical image segmentation: A survey and performance comparison with task-specific approaches

IF 15.5

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

Following the successful paradigm shift of large language models, which leverages pre-training on a massive corpus of data and fine-tuning on various downstream tasks, generalist models have made their foray into computer vision. The introduction of the Segment Anything Model (SAM) marked a milestone in the segmentation of natural images, inspiring the design of numerous architectures for medical image segmentation. In this survey, we offer a comprehensive and in-depth investigation of generalist models for medical image segmentation. We begin with an introduction to the fundamental concepts that underpin their development. Then, we provide a taxonomy based on features fusion on the different declinations of SAM in terms of zero-shot, few-shot, fine-tuning, adapters, on SAM2, on other innovative models trained on images alone, and others trained on both text and images. We thoroughly analyze their performances at the level of both primary research and best-in-literature, followed by a rigorous comparison with the state-of-the-art task-specific models. We emphasize the need to address challenges in terms of compliance with regulatory frameworks, privacy and security laws, budget, and trustworthy artificial intelligence (AI). Finally, we share our perspective on future directions concerning synthetic data, early fusion, lessons learnt from generalist models in natural language processing, agentic AI, physical AI, and clinical translation. We publicly release a database-backed interactive app with all survey data (https://hal9000-lab.github.io/GMMIS-Survey/).

医学图像分割中的通才模型:与特定任务方法的调查和性能比较

随着大型语言模型成功的范式转变,它利用了大量数据的预训练和对各种下游任务的微调,通才模型已经进军计算机视觉领域。分割任意模型(SAM)的引入标志着自然图像分割的一个里程碑,启发了许多医学图像分割架构的设计。在本研究中,我们对医学图像分割的通用模型进行了全面而深入的研究。我们首先介绍支撑其发展的基本概念。然后,我们提供了一个基于特征融合的分类,该分类基于SAM的不同偏角,包括零拍摄、少拍摄、微调、适配器、SAM2、单独训练图像的其他创新模型以及同时训练文本和图像的其他模型。我们在初级研究和最佳文献水平上彻底分析了它们的表现,然后与最先进的任务特定模型进行了严格的比较。我们强调有必要应对遵守监管框架、隐私和安全法律、预算和可信赖人工智能方面的挑战。最后,我们分享了我们对合成数据、早期融合、从自然语言处理、代理人工智能、物理人工智能和临床翻译的通才模型中吸取的教训的未来方向的看法。我们公开发布了一个数据库支持的交互式应用程序,其中包含所有调查数据(https://hal9000-lab.github.io/GMMIS-Survey/)。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Information Fusion

工程技术-计算机:理论方法

CiteScore

33.20

自引率

4.30%

发文量

161

审稿时长

7.9 months

期刊介绍:

Information Fusion serves as a central platform for showcasing advancements in multi-sensor, multi-source, multi-process information fusion, fostering collaboration among diverse disciplines driving its progress. It is the leading outlet for sharing research and development in this field, focusing on architectures, algorithms, and applications. Papers dealing with fundamental theoretical analyses as well as those demonstrating their application to real-world problems will be welcome.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: