CMF: Prediction refinement via complementary manifold-based multi-model fusion

IF 15.5

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

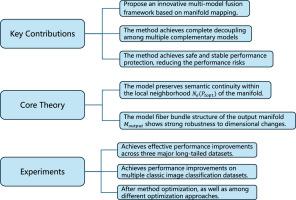

In current research on multi-model fusion, mainstream approaches predominantly focus on the design of fusion algorithms, while often overlooking the filtering or selection of outputs from individual base models prior to fusion. Moreover, most existing fusion methods exhibit a high degree of coupling, which limits their flexibility and adaptability in cross-scene applications. Consequently, once the fusion is completed, the model architecture tends to become fixed, making it difficult to integrate new models or replace outdated components. To address these limitations and achieve effective state-of-the-art (SOTA) breakthroughs in diverse single-label image classification tasks-such as fine-grained recognition or long-tailed distributions-without being constrained by model architecture, this paper proposes a highly generalizable multi-model complementary method. The proposed approach is applicable to single-label multi-class classification tasks in any deep learning domain and has achieved global SOTA performance on multiple image classification benchmarks. It imposes no restrictions on the architecture, parameter settings, or training strategies of the base models, enabling direct integration of existing SOTA models. Furthermore, the fusion process is fully decoupled, ensuring that the independent training of each base model remains unaffected and preserving the inherent advantages of their original training paradigms.

CMF:基于互补流形的多模型融合的预测细化

在当前的多模型融合研究中,主流的方法主要集中在融合算法的设计上,而忽略了在融合之前对各个基本模型的输出进行过滤或选择。此外,大多数现有的融合方法都表现出高度的耦合性,这限制了它们在跨场景应用中的灵活性和适应性。因此,一旦融合完成,模型体系结构就趋于固定,使得集成新模型或替换过时的组件变得困难。为了解决这些限制,并在不受模型架构约束的情况下,在各种单标签图像分类任务(如细粒度识别或长尾分布)中实现有效的最先进(SOTA)突破,本文提出了一种高度一般化的多模型互补方法。该方法适用于任何深度学习领域的单标签多类分类任务,并在多个图像分类基准上实现了全局SOTA性能。它对基础模型的体系结构、参数设置或训练策略没有施加任何限制,从而支持对现有SOTA模型的直接集成。此外,融合过程完全解耦,确保每个基本模型的独立训练不受影响,并保留其原始训练范式的固有优势。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Information Fusion

工程技术-计算机:理论方法

CiteScore

33.20

自引率

4.30%

发文量

161

审稿时长

7.9 months

期刊介绍:

Information Fusion serves as a central platform for showcasing advancements in multi-sensor, multi-source, multi-process information fusion, fostering collaboration among diverse disciplines driving its progress. It is the leading outlet for sharing research and development in this field, focusing on architectures, algorithms, and applications. Papers dealing with fundamental theoretical analyses as well as those demonstrating their application to real-world problems will be welcome.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: