Affinity-aware uncertainty quantification for learning with noisy labels

IF 7.6

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

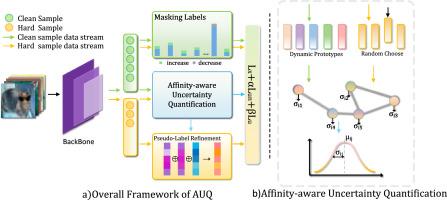

Training deep neural networks (DNNs) with noisy labels is a challenging task that significantly degenerates the model’s performance. Most existing methods mitigate this problem by identifying and eliminating noisy samples or correcting their labels according to statistical properties like confidence values. However, these methods often overlook the impact of inherent noise, such as sample quality, which can mislead DNNs to focus on incorrect regions, adulterate the softmax classifier, and generate low-quality pseudo-labels. In this paper, we propose a novel Affinity-aware Uncertainty Quantification (AUQ) framework to explore the perception ambiguity and rectify the salient bias by quantifying the uncertainty. Concretely, we construct the dynamic prototypes to represent intra-class semantic spaces and estimate the uncertainty based on sample-prototype pairs, where the observed affinities between sample-prototype pairs are converted to probabilistic representations as the estimated uncertainty. Samples with higher uncertainty are likely to be hard samples and we design an uncertainty-aware loss to emphasize the learning from those samples with high uncertainty, which helps DNNs to gradually concentrate on the critical regions. Besides, we further utilize sample-prototype affinities to adaptively refine pseudo-labels, enhancing the quality of supervisory signals for noisy samples. Extensive experiments conducted on the CIFAR-10, CIFAR-100 and Clothing1M datasets demonstrate the efficacy and effectiveness of AUQ. Notably, we achieve an average performance gain of 0.4 % on CIFAR-10 and a substantial average improvement of 2.3 % over the second-best method on the more challenging CIFAR-100 dataset. Moreover, there is a 0.6 % improvement over the sub-optimal method on Clothing1M. These results validate AUQ’s capability in enhancing DNN robustness against noisy labels.

带噪声标签学习的亲和力感知不确定性量化

训练带有噪声标签的深度神经网络(dnn)是一项具有挑战性的任务,它会显著降低模型的性能。大多数现有方法通过识别和消除噪声样本或根据统计属性(如置信度值)纠正其标签来缓解此问题。然而,这些方法往往忽略了固有噪声的影响,例如样本质量,这会误导dnn关注不正确的区域,掺杂softmax分类器,并生成低质量的伪标签。在本文中,我们提出了一种新的亲和力感知不确定性量化(Affinity-aware Uncertainty Quantification, AUQ)框架,通过量化不确定性来探索感知歧义并纠正显著偏差。具体而言,我们构建动态原型来表示类内语义空间,并基于样本-原型对估计不确定性,其中将观察到的样本-原型对之间的亲和力转换为概率表示作为估计的不确定性。不确定性较高的样本可能是硬样本,我们设计了一个不确定性感知损失来强调对高不确定性样本的学习,这有助于dnn逐渐集中在关键区域。此外,我们进一步利用样本-原型亲和力自适应细化伪标签,提高了噪声样本的监督信号质量。在CIFAR-10、CIFAR-100和Clothing1M数据集上进行的大量实验证明了AUQ的有效性和有效性。值得注意的是,我们在CIFAR-10上实现了0.4%的平均性能提升,在更具挑战性的CIFAR-100数据集上,与第二好的方法相比,我们实现了2.3%的显著平均提升。此外,与Clothing1M上的次优方法相比,有0.6%的改进。这些结果验证了AUQ在增强DNN对噪声标签的鲁棒性方面的能力。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Pattern Recognition

工程技术-工程:电子与电气

CiteScore

14.40

自引率

16.20%

发文量

683

审稿时长

5.6 months

期刊介绍:

The field of Pattern Recognition is both mature and rapidly evolving, playing a crucial role in various related fields such as computer vision, image processing, text analysis, and neural networks. It closely intersects with machine learning and is being applied in emerging areas like biometrics, bioinformatics, multimedia data analysis, and data science. The journal Pattern Recognition, established half a century ago during the early days of computer science, has since grown significantly in scope and influence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: