HR-2DGS: Hybrid regularization for sparse-view 3D reconstruction with 2D Gaussian splatting

IF 2.8

4区 计算机科学

Q2 COMPUTER SCIENCE, SOFTWARE ENGINEERING

引用次数: 0

Abstract

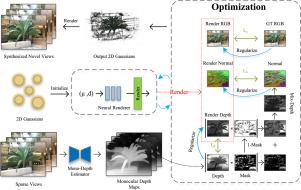

Sparse-view 3D reconstruction has garnered widespread attention due to its demand for high-quality reconstruction under low-sampling data conditions. Existing NeRF-based methods rely on dense views and substantial computational resources, while 3DGS is limited by multi-view inconsistency and insufficient geometric detail recovery, making it challenging to achieve ideal results in sparse-view scenarios. This paper introduces HR-2DGS, a novel hybrid regularization framework based on 2D Gaussian Splatting (2DGS), which significantly enhances multi-view consistency and geometric recovery by dynamically fusing monocular depth estimates with rendered depth maps, incorporating hybrid normal regularization techniques. To further refine local details, we introduce a per-pixel depth normalization that leverages each pixel’s neighborhood statistics to emphasize fine-scale geometric variations. Experimental results on the LLFF and DTU datasets demonstrate that HR-2DGS outperforms existing methods in terms of PSNR, SSIM, and LPIPS, while requiring only 2.5GB of memory and a few minutes of training time for efficient training and real-time rendering.

HR-2DGS:基于二维高斯溅射的稀疏视图三维重建的混合正则化

稀疏视图三维重建由于需要在低采样数据条件下进行高质量的重建而受到广泛关注。现有的基于nerf的方法依赖于密集视图和大量的计算资源,而3DGS受限于多视图不一致和几何细节恢复不足,难以在稀疏视图场景下获得理想的结果。本文介绍了一种新的基于二维高斯飞溅(2DGS)的混合正则化框架HR-2DGS,该框架通过将单眼深度估计与渲染深度图动态融合,结合混合正态正则化技术,显著提高了多视图一致性和几何恢复能力。为了进一步细化局部细节,我们引入了逐像素深度归一化,利用每个像素的邻域统计来强调精细尺度的几何变化。在LLFF和DTU数据集上的实验结果表明,HR-2DGS在PSNR、SSIM和LPIPS方面优于现有方法,而仅需2.5GB内存和几分钟的训练时间即可实现高效的训练和实时渲染。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Computers & Graphics-Uk

工程技术-计算机:软件工程

CiteScore

5.30

自引率

12.00%

发文量

173

审稿时长

38 days

期刊介绍:

Computers & Graphics is dedicated to disseminate information on research and applications of computer graphics (CG) techniques. The journal encourages articles on:

1. Research and applications of interactive computer graphics. We are particularly interested in novel interaction techniques and applications of CG to problem domains.

2. State-of-the-art papers on late-breaking, cutting-edge research on CG.

3. Information on innovative uses of graphics principles and technologies.

4. Tutorial papers on both teaching CG principles and innovative uses of CG in education.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: