Comprehensively evaluating the perception systems of autonomous vehicles against hazards

IF 15.5

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

Perception systems are vital for the safety of autonomous driving. In complex autonomous driving scenarios, autonomous vehicles must overcome various natural hazards, such as heavy rain or raindrops on the camera lens. Therefore, it is essential to conduct comprehensive testing of the perception systems in autonomous vehicles against these hazards, as demanded by the regulatory agencies of many countries for human drivers. Since there are many hazard scenarios, each of which has multiple configurable parameters, the challenges are (1) how do we systematically and adequately test an autonomous vehicle against these hazard scenarios, with measurable outcome; and (2) how do we efficiently explore the huge search space to identify scenarios that would induce failure?

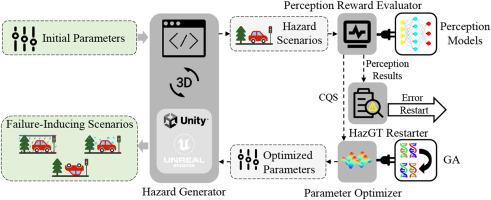

In this work, we propose a Hazards Generation and Testing framework (HazGT) to generate a customizable and comprehensive repository of hazard scenarios for evaluating the perception system of autonomous vehicles. HazGT not only allows us to measure how comprehensively an autonomous vehicle (AV) has been tested against different hazards but also supports the identification of important hazards through optimization. HazGT supports a total of 70 kinds of hazards relevant to the visual perception of AVs, which are based on industrial regulations. HazGT automatically optimizes the parameter values to efficiently achieve different testing objectives. We have implemented HazGT based on two popular 3D engines, i.e., Unity and Unreal Engine. For the two mainstream perception models (i.e., YOLO and Faster RCNN), we have evaluated their performance against each hazard through extensive experiments, and the results show that both systems have much room to improve. In addition, our experiments also found that ChatGPT4 performs slightly worse than YOLO. Our optimization-based testing system is effective in finding perceptual errors in the perception models. The hazard images generated by HazGT are instrumental for improving perception models.

综合评估自动驾驶汽车对危险的感知系统

感知系统对自动驾驶的安全性至关重要。在复杂的自动驾驶场景中,自动驾驶汽车必须克服各种自然灾害,如大雨或雨滴落在相机镜头上。因此,就像许多国家的监管机构对人类驾驶员的要求一样,对自动驾驶汽车的感知系统进行针对这些危险的全面测试是至关重要的。由于存在许多危险场景,每个危险场景都有多个可配置参数,因此面临的挑战是:(1)我们如何针对这些危险场景系统地、充分地测试自动驾驶汽车,并获得可测量的结果;(2)我们如何有效地探索巨大的搜索空间,以识别可能导致失败的场景?在这项工作中,我们提出了一个危险生成和测试框架(HazGT)来生成一个可定制的、全面的危险场景存储库,用于评估自动驾驶汽车的感知系统。HazGT不仅可以让我们衡量自动驾驶汽车(AV)针对不同危险的全面测试情况,还可以通过优化来识别重要危险。HazGT共支持70种与自动驾驶汽车视觉感知相关的危害,这些危害基于行业法规。HazGT自动优化参数值,有效实现不同的测试目标。我们基于两种流行的3D引擎,即Unity和虚幻引擎实现了《HazGT》。对于两种主流感知模型(即YOLO和Faster RCNN),我们通过大量的实验评估了它们对每种危险的性能,结果表明这两种系统都有很大的改进空间。此外,我们的实验还发现ChatGPT4的性能略差于YOLO。我们的基于优化的测试系统能够有效地发现感知模型中的感知误差。由HazGT生成的危险图像有助于改进感知模型。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Information Fusion

工程技术-计算机:理论方法

CiteScore

33.20

自引率

4.30%

发文量

161

审稿时长

7.9 months

期刊介绍:

Information Fusion serves as a central platform for showcasing advancements in multi-sensor, multi-source, multi-process information fusion, fostering collaboration among diverse disciplines driving its progress. It is the leading outlet for sharing research and development in this field, focusing on architectures, algorithms, and applications. Papers dealing with fundamental theoretical analyses as well as those demonstrating their application to real-world problems will be welcome.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: