DeTinyLLM: Efficient detection of machine-generated text via compact paraphrase transformation

IF 15.5

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

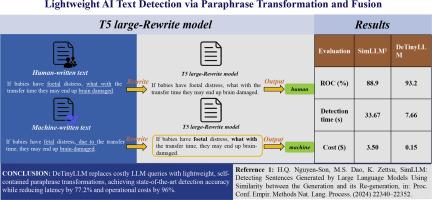

The growing fusion of human-written and machine-generated text poses significant challenges in distinguishing their origins, as advanced large language models (LLMs) increasingly mimic human linguistic patterns. Existing detection methods, such as SimLLM, rely on querying proprietary LLMs for proofreading to measure similarity, which incurs high computational costs and instability due to dependency on fluctuating model updates. To address these limitations, we propose DeTinyLLM, a novel framework that leverages fusion-driven compact paraphrase models for efficient and stable detection. First, we train a lightweight transformation model (e.g., fine-tuned T5-large) to rewrite machine-generated text into human-like text, effectively “de-AI-ifying” it through iterative fusion of syntactic and semantic features. For detection, the input text and its rewritten version are fused and classified via a hybrid neural network, capitalizing on divergence patterns between human and machine text. Experiments across diverse datasets demonstrate that DeTinyLLM achieves state-of-the-art accuracy (surpassing SimLLM by 4.3 % in ROC-AUC) while reducing inference latency by 77.2 %. By eliminating reliance on proprietary LLMs and integrating multi-level fusion of linguistic signals, this work advances scalable, cost-effective solutions for real-world deployment in AI-generated text detection systems.

DeTinyLLM:通过紧凑的释义转换高效检测机器生成的文本

随着高级大型语言模型(llm)越来越多地模仿人类语言模式,人类编写的和机器生成的文本日益融合,在区分它们的起源方面提出了重大挑战。现有的检测方法,如SimLLM,依赖于查询专有的llm进行校对来测量相似度,由于依赖于波动的模型更新,导致计算成本高且不稳定。为了解决这些限制,我们提出了DeTinyLLM,这是一个利用融合驱动的紧凑释义模型进行高效和稳定检测的新框架。首先,我们训练一个轻量级转换模型(例如,微调T5-large)将机器生成的文本重写为类似人类的文本,通过语法和语义特征的迭代融合有效地“去人工智能化”它。对于检测,输入文本及其重写版本通过混合神经网络融合和分类,利用人类和机器文本之间的分歧模式。跨不同数据集的实验表明,DeTinyLLM达到了最先进的精度(在ROC-AUC中超过SimLLM 4.3%),同时减少了77.2%的推理延迟。通过消除对专有llm的依赖,并集成语言信号的多层次融合,这项工作为人工智能生成的文本检测系统的实际部署提供了可扩展的、经济高效的解决方案。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Information Fusion

工程技术-计算机:理论方法

CiteScore

33.20

自引率

4.30%

发文量

161

审稿时长

7.9 months

期刊介绍:

Information Fusion serves as a central platform for showcasing advancements in multi-sensor, multi-source, multi-process information fusion, fostering collaboration among diverse disciplines driving its progress. It is the leading outlet for sharing research and development in this field, focusing on architectures, algorithms, and applications. Papers dealing with fundamental theoretical analyses as well as those demonstrating their application to real-world problems will be welcome.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: