Do you need help? Identifying and responding to pilots’ troubleshooting through eye-tracking and Large Language Model

IF 5.1

2区 计算机科学

Q1 COMPUTER SCIENCE, CYBERNETICS

International Journal of Human-Computer Studies

Pub Date : 2025-09-09

DOI:10.1016/j.ijhcs.2025.103617

引用次数: 0

Abstract

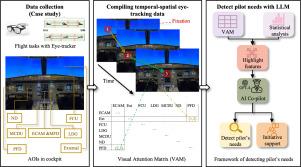

In-time automation support is crucial for enhancing pilots’ performance and flight safety. While extensive research has been conducted on providing automation support to mitigate risks associated with the Out-of-the-Loop (OOTL) phenomenon, limited attention has been given to supporting pilots who are actively engaged, known as In-the-Loop (ITL) status. Despite their active engagement, ITL pilots face challenges in managing multiple tasks simultaneously without additional support. For instance, providing critical information through in-time automation support can significantly improve efficiency and flight safety when pilots need to visually troubleshoot unexpected incidents while monitoring the aircraft’s flying status. This study addresses the gap in ITL support by introducing a method that utilizes eye-tracking data tokenized into Visual Attention Matrices (VAMs), integrated with a Large Language Model (LLM) to identify and respond to troubleshooting activities of ITL pilots. We address two primary challenges: capturing the complex troubleshooting status of pilots, which blends with normal monitoring behaviors, and effectively processing non-semantic eye-tracking data using LLM. The proposed VAM approach provides a structured representation of visual attention that supports LLM reasoning, while empirical VAMs enhance the model’s ability to efficiently identify critical features. A case study involving 19 licensed pilots validates the efficacy of the proposed approach in identifying and responding to pilots’ troubleshooting activities. This research contributes significantly to adaptive Human–Computer Interaction (HCI) in aviation by improving support for ITL pilots, thereby laying a foundation for future advancements in human–AI collaboration within automated aviation systems.

你需要帮助吗?通过眼动追踪和大语言模型识别和响应飞行员故障

及时的自动化支持对于提高飞行员的性能和飞行安全至关重要。虽然已经进行了广泛的研究,以提供自动化支持,以减轻与环外(OOTL)现象相关的风险,但对积极参与的飞行员(称为环内(ITL)状态)的支持关注有限。尽管他们积极参与,国际机场飞行员在没有额外支持的情况下同时管理多个任务面临挑战。例如,当飞行员需要在监控飞机飞行状态的同时直观地排除意外事故时,通过实时自动化支持提供关键信息可以显著提高效率和飞行安全。本研究通过引入一种方法来解决ITL支持方面的差距,该方法利用眼动追踪数据标记为视觉注意矩阵(VAMs),并与大型语言模型(LLM)集成,以识别和响应ITL飞行员的故障排除活动。我们解决了两个主要的挑战:捕捉飞行员复杂的故障排除状态,它与正常的监控行为相融合,以及使用LLM有效地处理非语义眼动追踪数据。提出的VAM方法提供了支持LLM推理的视觉注意力的结构化表示,而经验VAM增强了模型有效识别关键特征的能力。一项涉及19名持牌飞行员的案例研究验证了所提议的方法在识别和响应飞行员故障排除活动方面的有效性。本研究通过改善对ITL飞行员的支持,为航空领域的自适应人机交互(HCI)做出了重大贡献,从而为自动化航空系统中人类与人工智能协作的未来发展奠定了基础。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

International Journal of Human-Computer Studies

工程技术-计算机:控制论

CiteScore

11.50

自引率

5.60%

发文量

108

审稿时长

3 months

期刊介绍:

The International Journal of Human-Computer Studies publishes original research over the whole spectrum of work relevant to the theory and practice of innovative interactive systems. The journal is inherently interdisciplinary, covering research in computing, artificial intelligence, psychology, linguistics, communication, design, engineering, and social organization, which is relevant to the design, analysis, evaluation and application of innovative interactive systems. Papers at the boundaries of these disciplines are especially welcome, as it is our view that interdisciplinary approaches are needed for producing theoretical insights in this complex area and for effective deployment of innovative technologies in concrete user communities.

Research areas relevant to the journal include, but are not limited to:

• Innovative interaction techniques

• Multimodal interaction

• Speech interaction

• Graphic interaction

• Natural language interaction

• Interaction in mobile and embedded systems

• Interface design and evaluation methodologies

• Design and evaluation of innovative interactive systems

• User interface prototyping and management systems

• Ubiquitous computing

• Wearable computers

• Pervasive computing

• Affective computing

• Empirical studies of user behaviour

• Empirical studies of programming and software engineering

• Computer supported cooperative work

• Computer mediated communication

• Virtual reality

• Mixed and augmented Reality

• Intelligent user interfaces

• Presence

...

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: