D3PD: Dual distillation and dynamic fusion for camera-radar 3D perception

IF 7.6

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

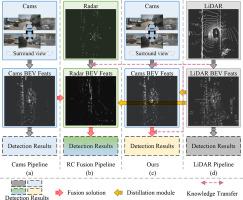

Autonomous driving perception is driving rapid advancements in Bird’s-Eye-View (BEV) technology. The synergy of surround-view imagery and radar is seen as a cost-friendly approach that enhances the understanding of driving scenarios. However, current methods for fusing radar and camera features lack effective environmental perception guidance and dynamic adjustment capabilities, which restricts their performance in real-world scenarios. In this paper, we introduce the D3PD framework, which combines fusion techniques with knowledge distillation to tackle the dynamic guidance deficit in existing radar-camera fusion methods. Our method includes two key modules: Radar-Camera Feature Enhancement (RCFE) and Dual Distillation Knowledge Transfer. The RCFE module enhances the areas of interest in BEV, addressing the poor object perception performance of single-modal features. The Dual Distillation Knowledge Transfer includes four distinct modules: Camera Radar Sparse Distillation (CRSD) for sparse feature knowledge transfer and teacher-student network feature alignment. Position-guided Sampling Distillation(SamD) for refining the knowledge transfer of fused features through dynamic sampling. Detection Constraint Result Distillation (DcRD) for strengthening the positional correlation between teacher and student network outputs in forward propagation, achieving more precise detection perception. and Self-learning Mask Focused Distillation (SMFD) for focusing perception detection results on knowledge transfer through self-learning, concentrating on the reinforcement of local key areas. The D3PD framework outperforms existing methods on the nuScenes benchmark, achieving 49.6 % mAP and 59.2 % NDS performance. Moreover, in the occupancy prediction task, D3PD-Occ has achieved an advanced performance of 37.94 % mIoU. This provides insights for the design and model training of camera and radar-based 3D object detection and occupancy network prediction methods. The code will be available at https://github.com/no-Name128/D3PD.

D3PD:双蒸馏和动态融合的相机-雷达三维感知

自动驾驶感知技术正在推动鸟瞰(BEV)技术的快速发展。环视图像和雷达的协同作用被视为一种成本低廉的方法,可以增强对驾驶场景的理解。然而,目前的雷达和相机特征融合方法缺乏有效的环境感知引导和动态调整能力,这限制了它们在现实场景中的表现。本文引入D3PD框架,将融合技术与知识蒸馏相结合,解决了现有雷达-相机融合方法中存在的动态制导缺陷。该方法包括两个关键模块:雷达-相机特征增强(RCFE)和双蒸馏知识转移。RCFE模块增强了BEV中感兴趣的领域,解决了单模态特征较差的对象感知性能。双蒸馏知识转移包括四个不同的模块:用于稀疏特征知识转移的相机雷达稀疏蒸馏(CRSD)和师生网络特征对齐。位置引导采样蒸馏(SamD)通过动态采样来细化融合特征的知识转移。检测约束结果蒸馏(Detection Constraint Result Distillation, DcRD),用于前向传播中加强师生网络输出之间的位置相关性,实现更精确的检测感知。自学习掩模聚焦蒸馏(SMFD),将感知检测结果聚焦于通过自学习进行知识迁移,专注于局部关键区域的强化。D3PD框架在nuScenes基准测试中优于现有方法,实现了49.6%的mAP和59.2%的NDS性能。此外,在占用率预测任务中,D3PD-Occ取得了37.94% mIoU的先进性能。这为基于相机和雷达的3D物体检测和占用网络预测方法的设计和模型训练提供了见解。代码可在https://github.com/no-Name128/D3PD上获得。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Pattern Recognition

工程技术-工程:电子与电气

CiteScore

14.40

自引率

16.20%

发文量

683

审稿时长

5.6 months

期刊介绍:

The field of Pattern Recognition is both mature and rapidly evolving, playing a crucial role in various related fields such as computer vision, image processing, text analysis, and neural networks. It closely intersects with machine learning and is being applied in emerging areas like biometrics, bioinformatics, multimedia data analysis, and data science. The journal Pattern Recognition, established half a century ago during the early days of computer science, has since grown significantly in scope and influence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: