Learning global-view correlation for salient object detection in 3D point clouds

IF 6.3

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

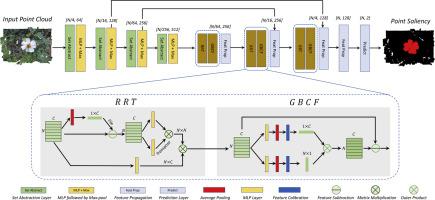

Salient object detection (SOD) in point clouds has been an emerging research topic aimed at extracting most visually attractive objects from 3D point cloud representations. The inherent irregularity and unorderness of 3D point clouds complicate salient object detection, for it is hard to learn regular salient patterns like in 2D images. Meanwhile, existing methods typically focus on per-point context aggregation, while overlooking the scene-level global-view correlation crucial for saliency prediction. In this paper, we explore SOD in point clouds and introduce a novel approach that capitalizes on a comprehensive understanding of global-view 3D scenes. Our proposed method, the Saliency Filtration Network (SFN), meticulously refines saliency representations by isolating them from the common scene-dependent global-view correlations. Most importantly, SFN is characterized by a two-stage strategy, which involves aggregating long-range context information and purify saliency from globally scene-common correlations. To achieve this, we introduce the Residual Relation-aware Transformer module (RRT), which considers human visual perception to exploit global-view context dependencies. Additionally, we propose the Global Bilinear Correlation based Filtration module (GBCF) to perform saliency purification from global-view correlations. GBCF establishes dense correlations between global space and channel descriptors, which are then leveraged to properly purify saliency representations. Experimental evaluations on the PCSOD benchmark demonstrate that our proposed method achieves state-of-the-art accuracy and significantly outperforms other compared methods.

学习三维点云中显著目标检测的全局视图关联

点云中的显著目标检测(SOD)是一个新兴的研究课题,旨在从三维点云表示中提取最具视觉吸引力的目标。三维点云固有的不规则性和无序性使显著目标检测复杂化,因为很难像在二维图像中那样学习规则的显著模式。同时,现有方法通常侧重于逐点上下文聚合,而忽略了对显著性预测至关重要的场景级全局视图相关性。在本文中,我们探索了点云中的SOD,并引入了一种新的方法,该方法利用了对全局视图3D场景的全面理解。我们提出的方法,即显著性过滤网络(SFN),通过将显著性表示从常见的场景依赖的全局视图相关性中分离出来,精心地改进了显著性表示。最重要的是,SFN的特点是采用两阶段策略,包括聚合远程上下文信息和从全局场景共同关联中去除显著性。为了实现这一点,我们引入了残差关系感知转换器模块(RRT),它考虑人类的视觉感知来利用全局视图上下文依赖关系。此外,我们提出了基于全局双线性相关的过滤模块(GBCF)来从全局视图相关性中进行显著性净化。GBCF在全局空间和通道描述符之间建立紧密的相关性,然后利用它们来适当地净化显著性表示。在PCSOD基准上的实验评估表明,我们提出的方法达到了最先进的精度,并且显著优于其他比较方法。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Neural Networks

工程技术-计算机:人工智能

CiteScore

13.90

自引率

7.70%

发文量

425

审稿时长

67 days

期刊介绍:

Neural Networks is a platform that aims to foster an international community of scholars and practitioners interested in neural networks, deep learning, and other approaches to artificial intelligence and machine learning. Our journal invites submissions covering various aspects of neural networks research, from computational neuroscience and cognitive modeling to mathematical analyses and engineering applications. By providing a forum for interdisciplinary discussions between biology and technology, we aim to encourage the development of biologically-inspired artificial intelligence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: