Dual interaction network with cross-image attention for medical image segmentation

IF 3.3

3区 计算机科学

Q2 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

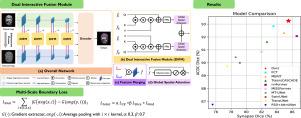

Medical image segmentation is a crucial method for assisting professionals in diagnosing various diseases through medical imaging. However, various factors such as noise, blurriness, and low contrast often hinder the accurate diagnosis of diseases. While numerous image enhancement techniques can mitigate these issues, they may also alter crucial information needed for accurate diagnosis in the original image. Conventional image fusion strategies such as feature concatenation can address this challenge. However, they struggle to fully leverage the advantages of both original and enhanced images while suppressing the side effects of the enhancements. To overcome the problem, we propose a dual interactive fusion module (DIFM) that effectively exploits mutual complementary information from the original and enhanced images. DIFM employs cross-attention bidirectionally to simultaneously attend to corresponding spatial information across different images, subsequently refining the complementary features via global spatial attention. This interaction leverages low- to high-level features implicitly associated with diverse structural attributes like edges, blobs, and object shapes, resulting in enhanced features that embody important spatial characteristics. In addition, we introduce a multi-scale boundary loss based on gradient extraction to improve segmentation accuracy at object boundaries. Experimental results on the ACDC and Synapse datasets demonstrate the superiority of the proposed method quantitatively and qualitatively.

基于交叉图像关注的双交互网络医学图像分割

医学图像分割是辅助专业人员通过医学影像诊断各种疾病的重要方法。然而,各种因素,如噪音,模糊,低对比度往往阻碍疾病的准确诊断。虽然许多图像增强技术可以缓解这些问题,但它们也可能改变原始图像中准确诊断所需的关键信息。传统的图像融合策略(如特征拼接)可以解决这一挑战。然而,他们很难充分利用原始图像和增强图像的优势,同时抑制增强的副作用。为了克服这个问题,我们提出了一种双交互融合模块(DIFM),有效地利用了原始图像和增强图像的互补信息。DIFM采用双向交叉注意,同时关注不同图像间对应的空间信息,然后通过全局空间注意提炼互补特征。这种交互利用了与各种结构属性(如边缘、斑点和对象形状)隐式关联的低级到高级特征,从而增强了体现重要空间特征的特征。此外,我们还引入了一种基于梯度提取的多尺度边界损失,以提高目标边界处的分割精度。在ACDC和Synapse数据集上的实验结果证明了该方法在定量和定性上的优越性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Pattern Recognition Letters

工程技术-计算机:人工智能

CiteScore

12.40

自引率

5.90%

发文量

287

审稿时长

9.1 months

期刊介绍:

Pattern Recognition Letters aims at rapid publication of concise articles of a broad interest in pattern recognition.

Subject areas include all the current fields of interest represented by the Technical Committees of the International Association of Pattern Recognition, and other developing themes involving learning and recognition.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: