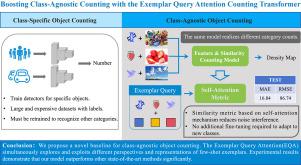

SEACount: Semantic-driven Exemplar query Attention framework for image boosting class-agnostic Counting in Internet of Things

IF 7.6

3区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

引用次数: 0

Abstract

In the Internet of Things (IoT) environment, large amounts of visual data are continuously collected, providing a rich resource for intelligent surveillance and management. For the task of class-agnostic counting in images, this paper proposes the Semantic-driven Exemplar query Attention Counting (SEACount) framework, which aims to quickly adapt and count unseen classes of objects using a few-shot exemplars. This is critical for real-time monitoring and analyzing visual semantic information in IoT. Specifically, we introduce two new components to extend Object Detection with Transformers (DETR): the Exemplar Query Attention (EQA) and the Dynamic Reshaping Module (DRM). EQA injects exemplar queries with rich semantic information into the decoder, facilitating the global image response to exemplar targets and enhancing the exemplar-to-image similarity metrics. The DRM, instead of only utilizing decoder features, fuses them with image features to enhance local details, reduce noise interference, and reshape the feature maps required for predicting density maps. This approach efficiently captures exemplar-relevant targets in images and quickly adapts to new categories without fine-tuning. Experimental results demonstrate that our proposed SEACount framework significantly outperforms other state-of-the-art methods on the latest FSC-147 dataset. We release the code at https://github.com/lxinhui1109/SEACount.git.

语义驱动的范例查询关注框架,用于增强物联网中与类别无关的图像计数

在物联网环境下,大量的视觉数据被不断采集,为智能监控和管理提供了丰富的资源。针对图像中类别不可知的计数任务,本文提出了语义驱动的范例查询注意计数(seaccount)框架,该框架旨在使用少量样本快速适应和计数未见过的对象类别。这对于物联网中的实时监控和分析视觉语义信息至关重要。具体来说,我们引入了两个新的组件来扩展变形对象检测(DETR):范例查询注意(EQA)和动态重塑模块(DRM)。EQA将具有丰富语义信息的范例查询注入到解码器中,促进了对范例目标的全局图像响应,增强了范例与图像的相似性度量。DRM不是只利用解码器特征,而是将它们与图像特征融合以增强局部细节,减少噪声干扰,并重塑预测密度图所需的特征图。这种方法可以有效地捕获图像中与范例相关的目标,并且无需微调即可快速适应新的类别。实验结果表明,我们提出的seaccount框架在最新的FSC-147数据集上显著优于其他最先进的方法。我们在https://github.com/lxinhui1109/SEACount.git上发布了代码。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Internet of Things

Multiple-

CiteScore

3.60

自引率

5.10%

发文量

115

审稿时长

37 days

期刊介绍:

Internet of Things; Engineering Cyber Physical Human Systems is a comprehensive journal encouraging cross collaboration between researchers, engineers and practitioners in the field of IoT & Cyber Physical Human Systems. The journal offers a unique platform to exchange scientific information on the entire breadth of technology, science, and societal applications of the IoT.

The journal will place a high priority on timely publication, and provide a home for high quality.

Furthermore, IOT is interested in publishing topical Special Issues on any aspect of IOT.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: